I (T.D.) am very happy to host here today a guest post by Daniel Hoak, a member of the LIGO collaboration, who participated in the discovery of gravitational waves that made headlines one week ago in the world media. Daniel earned his PhD in 2015 with the LIGO collaboration, and is currently working at the Virgo detector outside of Pisa. Daniel's picture is on the left.

I (T.D.) am very happy to host here today a guest post by Daniel Hoak, a member of the LIGO collaboration, who participated in the discovery of gravitational waves that made headlines one week ago in the world media. Daniel earned his PhD in 2015 with the LIGO collaboration, and is currently working at the Virgo detector outside of Pisa. Daniel's picture is on the left.By now the adrenaline from the announcement of the first direct detection of gravitational waves has subsided, and we can discuss the particulars of the observation. Here, I will try to describe the state of the detectors, answer some questions about the analysis methods, and highlight a few points about the detection that might have gotten lost in the excitement.

In 2010, following a series of observing runs, the LIGO instruments shut down for a complete upgrade. This upgrade has been a success, and during the summer of last year we began to prepare for a three-month observing run in the fall.

Our observing runs begin with a "soft start", a period of a few weeks reserved for calibration measurements and other prep work. In the strictest sense, when the event arrived we had not entered the official observing period, but the online calibration had been finalized, and the detector configuration was frozen. If the signal had arrived two weeks earlier, there might have been a chaotic rush to characterize the detectors and document their configuration. But by September 14 this was essentially complete.

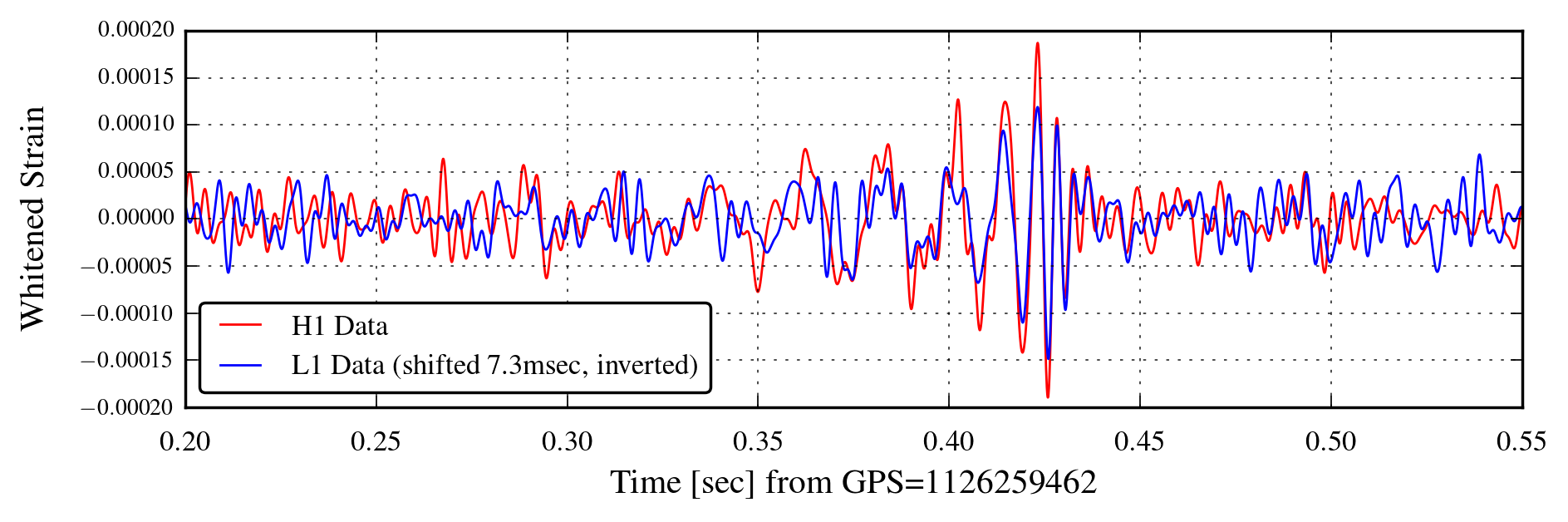

When an event is detected, the first question is how likely is it due to background noise in the detectors. Background estimation in gravitational wave searches is different than particle physics experiments. Gravitational wave detectors observe a high rate of loud, short-duration noise transients, much higher than would be expected from Gaussian noise alone. These are known in the industry as glitches, and are caused by a combination of environmental noise and instrument artefacts. We reject glitches by requiring exact coincidence between the detectors, as well as agreement with particular models for gravitational wave signals (for example, the famous chirp from the merger of compact objects).

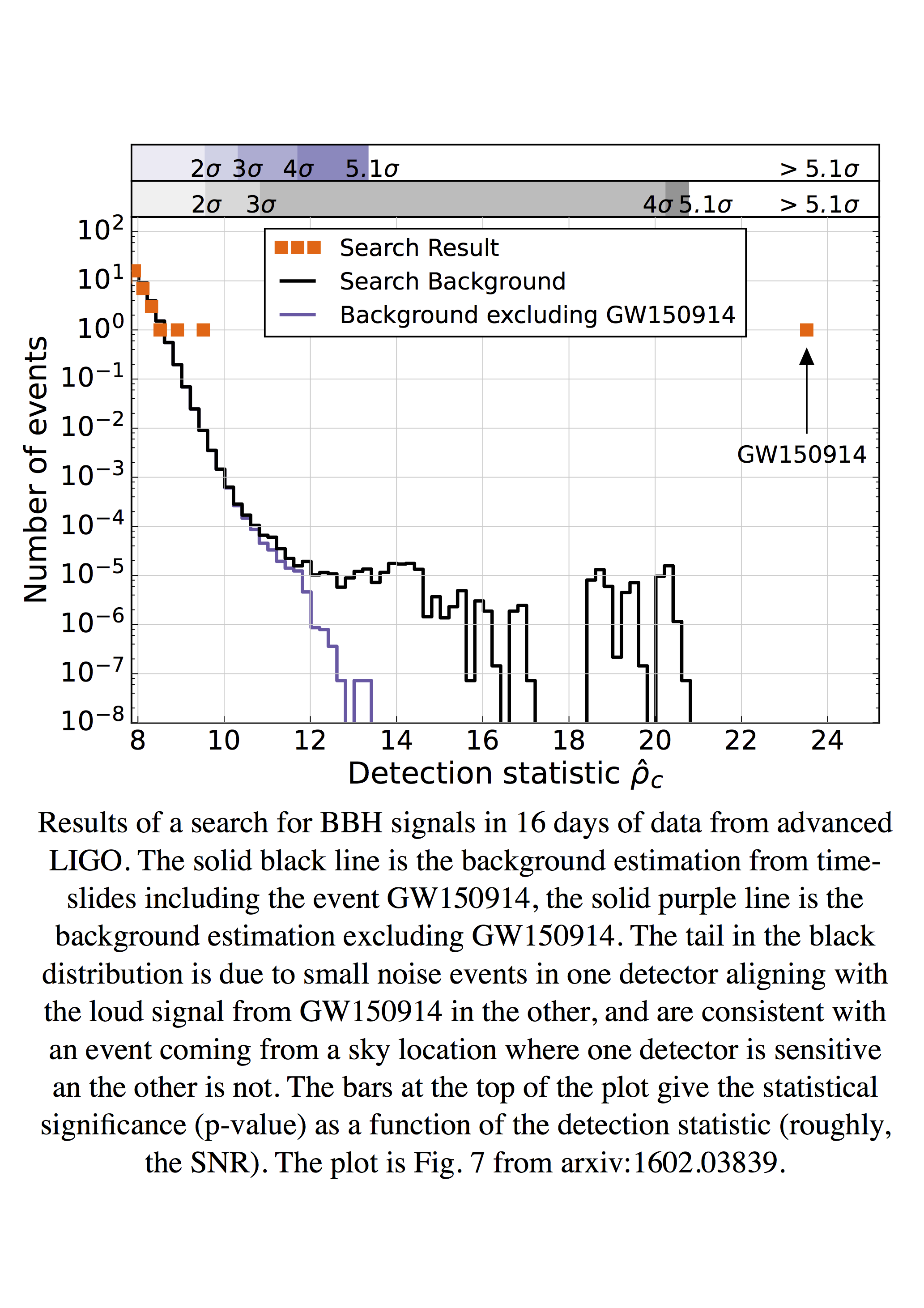

The background due to glitches cannot be modeled analytically, so we estimate it empirically using a time-slide technique. For each analysis, the data are re-analyzed, using the time-series from Hanford compared with the time-series from Livingston from, for example, five seconds later - much longer than the time it takes a gravitational wave to travel between the sites. The analysis is repeated for O(10^6) time steps to calculate how frequently the noise fluctuations in the detectors might align to generate an event, purely from chance.

The event (denoted GW150914, following the nomenclature for gamma-ray bursts) was found with the highest significance in two independent searches for compact binary coalescences, or CBCs. These searches utilize matched filtering, where we calculate the overlap between the detector data and a set of template waveforms. The waveforms in the template bank are described by four parameters: the masses and overall spins of the compact objects. While each of the 250,000 templates amounts to a semi-independent test of the data against a particular model, this trials factor is naturally folded in to our background estimation, where we have repeated the search, with the entire template bank, on several million independent realizations of the data. Thus, when we observe a signal with a given SNR, and we calculate from the time-slide analysis the number of events we are likely to observe with the same SNR that are due to noise, we are including events that arise from any template, not just the one that matched with the event. An event's significance is calculated using only the SNR, and the background rate for that SNR is marginalized over all of the templates.

Our method for background estimation calculates the rate that random noise in the detectors will coincide, but it is blind to correlated noise. To reject correlated noise, we rely on the wide geographic separation of the detectors, and the sensitive array of environmental monitors at each site. A careful discussion of possible correlated noise is given in a companion paper on detector characterization. In short, we are confident that our environmental sensors will detect any plausible source of correlated noise (seismic noise, electromagnetic noise in the ionosphere, etc.) at a threshold well below the level that would generate a signal in the output of the detectors.

It is worth noting that the significance of GW150914 is not 5.1 sigma. It is much greater than 5.1 sigma! In the background estimation for our 16-day analysis period, we found no events from random coincidence as loud as GW150914. The value of 5.1 sigma represents the limit on the background that we could estimate with the data available.

It is also worth noting that this is only the second time that the detection of a new phenomena in physics has been claimed based on a single event. Similar to the detection of the Omega-minus, our discovery is convincing because we have a clear, tangible picture of the trace the event left in our detector.

Now let us discuss the event. We have certainly been lucky, on two counts. First, we are lucky to have such a loud event for our first detection, one that rises unambiguously above the background and allows for exquisite parameter estimation. The loudness of the event cannot be over-emphasized: this knocked us over when we first saw it, and it may take one or two years before we detect another signal this loud. Second, we are lucky that the rate of binary black hole mergers is apparently quite high, on the high end of what had been estimated by astronomers. Prior to this event, one could reasonably predict that advanced LIGO would never see a BBH signal, since no plausible BBH systems had been observed in nature.

The masses of the black holes are quite large, significantly larger than any of the stellar-mass black hole candidates that have been observed (in, for example, x-ray binaries). Black holes with masses more than thirty times the mass of our Sun are not easily made in nature. They form from large, low-metallicity stars that have weak stellar winds and retain most of their mass during their short lives before core-collapse and supernovae. Furthermore, how a system with two black holes this size can form is an open question: did it start as a binary system of two high-mass, low-metallicity stars, or was it formed via 3-body interactions between black holes in a globular cluster? These questions will be answered after we have detected an ensemble of events, and we can study their distributions in mass and redshift.

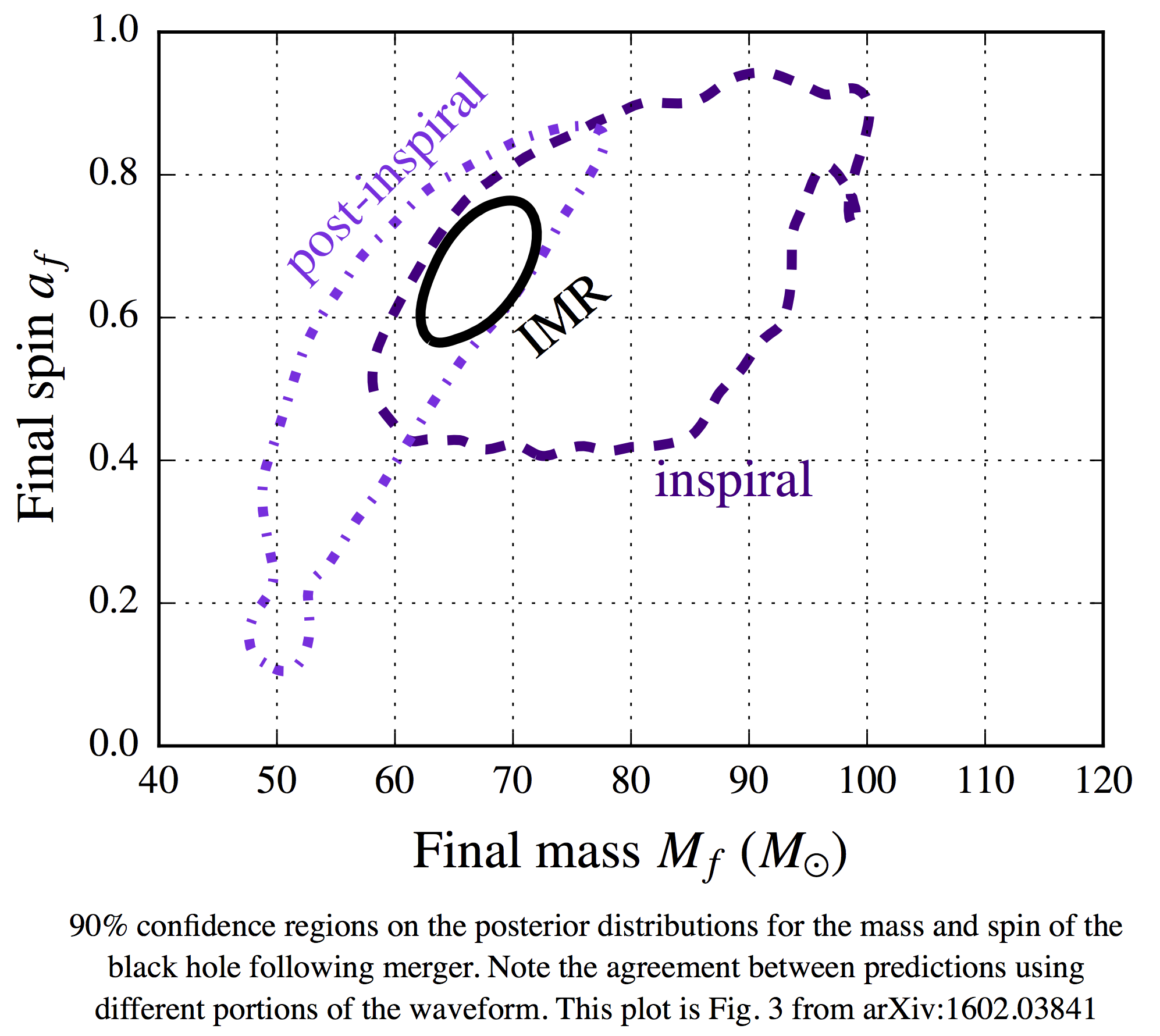

With one event, gravitational waves have become the most precise test for general relativity. The match between the waveform and the prediction from GR is essentially perfect; details are given in a companion paper. Among other tests, the parameters of the black hole that remains following the merger can be estimated using different parts of the waveform: the part from the beginning of the signal to the peak of the amplitude, which describes the inspiral; and the part following the peak, which describes the merger and ring-down to an unperturbed Kerr geometry. These independent tests are in good agreement, demonstrating that the overall waveform is consistent with general relativity.

In mid-January the LIGO detectors switched from an observing mode back to a period of commissioning. Gravitational wave detectors, like particle accelerators, do not achieve their design performance immediately. At the moment the aLIGO instruments are about 3-4 times less sensitive than we can achieve with the current hardware. The instruments should reach their design sensitivity after another two to three years of commissioning, with regular breaks for increasingly more sensitive observations. And, later this year, the Virgo instrument outside of Pisa will finish its own advanced upgrade, followed by the KAGRA detector in Japan in 2018. At the design sensitivity, a signal like GW150914 will have an SNR of more than 50, and we will detect dozens of weaker events per year. For a field that has searched for decades and come up empty, it is only just sinking in that we will soon have an embarrassment of riches.

Comments