"the entropy of a physical system is the minimum number of bits you need to fully describe the detailed state of the system"So forget about statements like "entropy is disorder", "entropy measures randomness" and all vagaries about "teenage bedrooms getting messy" that inundate the internet. These qualitative statements at best provide you with metaphors, and at worst create profound misunderstandings.

Now the problem with the above information-theoretical statement is that in itself it does not make obvious why bit counts provide us with a meaningful definition of entropy. More in particular, it appears that for many readers of this blog it remains unclear how this information-theoretical definition of entropy is related to the traditional thermodynamic definition of entropy. In this blog post I would like to try to give you at least some hints on "how everything hangs together".

Reaching the current level of insight on what is entropy, didn't happen overnight. It took generations of scientists and a full century of multi-disciplinary science to reach this level of understanding. So don't despair if all puzzle pieces don't fall into place immediately. I guarantee you can reach a profound understanding of entropy by investing an amount of your time that is only a tiny fraction of a century...

Thermodynamic Entropy

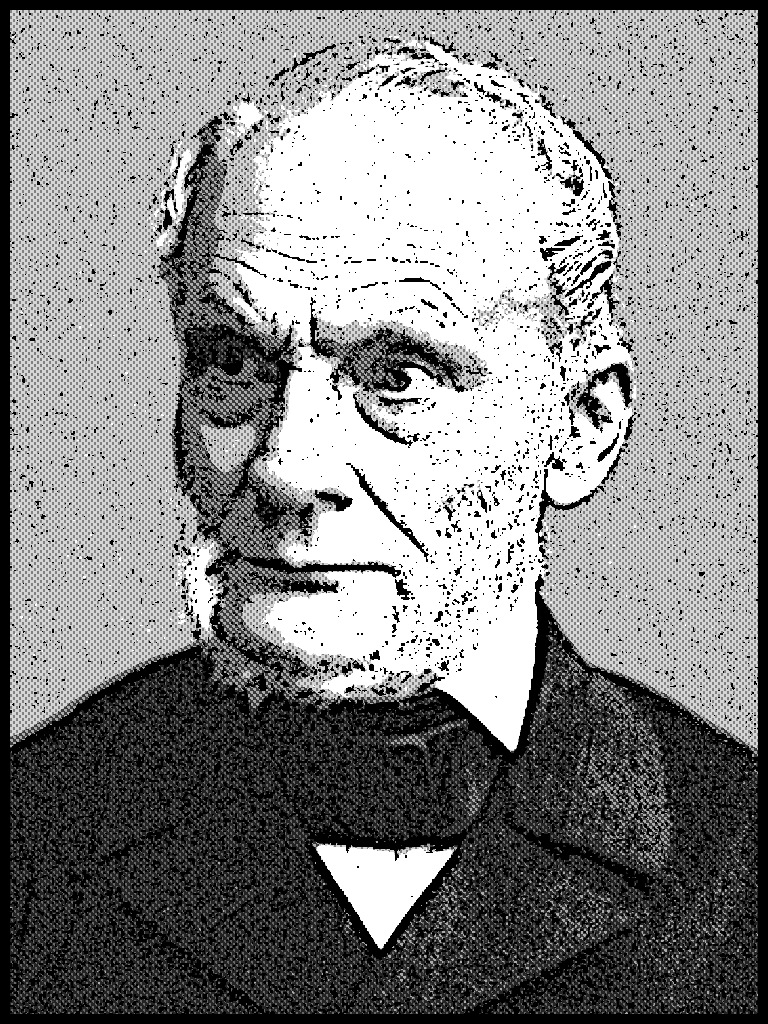

In the middle of the 19th century, in the wake of the groundbreaking work of French military engineer Sadi Carnot on the maximum efficiency of heat engines, thermodynamics became a subject of serious study. The honor of introducing the concept "entropy" goes to German physicist Rudolf Clausius. He coined the term "entropy", and provided a clear quantitative definition. According to Clausius, the entropy change ΔS of a thermodynamic system absorbing a quantity of heat ΔQ at absolute temperature T is simply the ratio between the two:

ΔS = ΔQ/T

Using this definition, Clausius was able to cast Carnot's assertion that steam engines can not exceed a specific theoretical optimum efficiency into a much grander statement:

"The entropy of the universe tends to a maximum"

The second law of thermodynamics was born.

However, defining entropy as a ratio between absorbed heat and absolute temperature leaves unanswered the nagging question "what really is the meaning of entropy?" The answer to this question had to await the atomistic view starting to gain popularity in mainstream physics. This happened at the end of the nineteenth century.

Statistical Entropy

It was early atomist Ludwig Boltzmann who provided a fundamental theoretical basis to the concept of entropy. Expressed in modern physics speak, his key insight was that absolute temperature is nothing more than energy per molecular degree of freedom. This suggests that Clausius ratio between absorbed energy and absolute temperature is nothing more than the number of molecular degrees of freedom:

S = number of microscopic degrees of freedom

What's the gain here? Haven't we expressed the enigmatic quantity "entropy" into an equally nebulous term "number of degrees of freedom"? The answer is "no". Boltzmann was able to show that the number of degrees of freedom of a physical system can be linked to the number of micro-states W of that system. The astonishingly simple expression that results for the entropy reads:

S = log W

Why does this work? Why is the number of degrees of freedom related to the logarithm of the total number of states? Consider a system with binary degrees of freedom. Let's say a system of N coins each showing head or tail. Each coin contributes one degree of freedom that can take two distinct values. So in total we have N binary degrees of freedom. Simple counting tells us that each coin (each degree of freedom) contributes a factor of two to the total number of distinct states the system can be in. In other words, W = 2N. Taking the base-2 logarithm (*) of both sides of this equation yields the logarithm of the total number of states to equal the number of degrees of freedom: log2 W = N.

This argument can be made more generic. Key feature is that the total number of states W follows from multiplying together the number of states for each degree of freedom. By taking the logarithm of W, this product gets transformed into an addition of degrees of freedom. The result is an additive entropy concept: adding up the entropies of two independent sub systems gives us the entropy of the total system.

Information Entropy

Now fast forward to the middle of the 20th century. In 1948, Claude Shannon, an electrical engineer at Bell Telephone Laboratories, managed to mathematically quantify the concept of “information”. The key result he derived is that to describe the precise state of a system that can be in states 1, 2, ... n with probabilities p1, p2, ... pn requires a well-defined minimum number of bits. In fact, the best one can do is to assign log2(1/pi) bits to the occurrence of state i. This means that statistically speaking the minimum number of bits one needs to be capable of specifying the system regardless its precise state is:

Minimum number of bits = p1 log2 (1/p1) + p2 log2 (1/p2) + .. + pn log2 (1/pn)

When applied to a system that can be in W states, each with equal (**) probability p = 1/W, it follows that

Minimum number of bits = log2 W

And here we are. A full century of thermodynamic and statistical research leading to the simple conclusion that the Boltzmann expression S = log W is nothing more than a way of expressing

S = number of bits required to specify the system

There you have it. Entropy is the bit count of your system. The number of bits required to specify the actual microscopic configuration amongst the total number of micro-states allowed. In these terms the second law of thermodynamics tells us that closed systems tend to be characterized by a growing bit count. How does this work?

The Second Law

This is where the information-theoretic description of entropy shows its real strength. In fact, the second law of thermodynamics is rendered almost trivial by considering it from an information-theoretical perspective.

Entropy growth indicates nothing more than physical systems prepared in special initial states. Let's again take the simple example of N coins. Suppose these are prepared in a state in which all coins show heads, and that a coin dynamics applies that results in some random coin turns at discrete time steps. It should be clear that the initial configuration with all coins 'frozen' into heads can be specified with very few bits. After many random coin turns, however, an equilibrium is reached in which each coin shows a random face and the description of the system will require specification of which of the equally likely 2N realizations is the actual one. This requires log2 (2N) = N bits. Entropy has grown from close to zero to N bits. That's all there is to it. The famous second law of thermodynamics. The law that according to famous astronomer Arthur Eddington holds a supreme position amongst all laws of physics.

Examples Puhleeze...

Still puzzled? Need some specific examples? In the next blog post I will make the statistical and information-theoretical basis for entropy more tangible by experimenting with toy systems. Simple systems that can easily be visualized and that allow for straightforward state counting. Although seemingly trivial, these toy models will lead us straight into concepts like phase transitions and holographic degrees of freedom. Stay tuned.

Notes

(*) The precise value of the base of the logarithm doesn't matter really. It all boils down to a choice of units. Taking the base-2 logarithm is the natural thing to do for binary degrees of freedom and results in entropy being measured in bits. A base-10 logarithm would result in an entropy measured in digits.

(**) Why would each micro-state be equally likely? A lot can, and has been, said about this, and a whole body of research is directly related to this issue. Results obtained indicate that for large systems the equal likelihood assumption can be relaxed significantly without the end results being affected. For the purpose of the present discussion the issue is hardly relevant.

Comments