They thought that because they don't understands polls or statistical models.

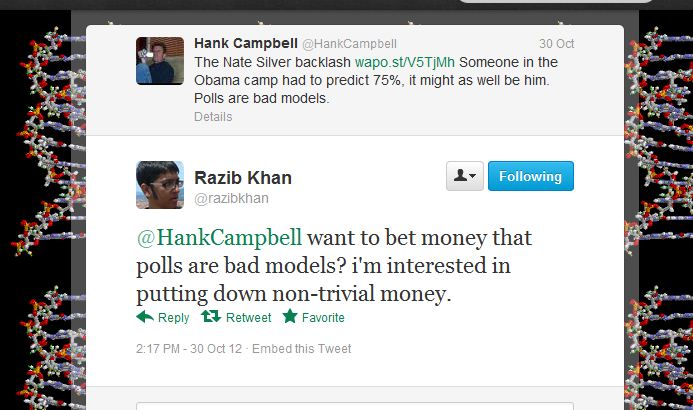

After I wrote on Twitter that 'polls are bad models', Razib Khan of the GNXP column at Discover, wrote back: "want to bet money that polls are bad models?"

Well, yeah, I kinda do. I'll bet my house right now. The problem is, no one will try and take my money when accuracy really matters. The minute you try and get a statistician to make a prediction, they waffle and want a range. Silver was saying 'Obama will win' but he was really only saying he believes there is a 75% chance Obama will win. How can he be so confident but not be really confident? If his model is good, why did he not declare Obama the winner and stick 100% on it and go on vacation?

Everyone knows that this far into an election cycle 47% of voters are decided - it was politically incorrect for candidate Mitt Romney to say that 47% of people were not changing their minds no matter what he does, but not statistically incorrect. Elections are often won or lost by people staying home, not flip-flopping from one party to another.

But Razib is a statistics guy. He likes to play with data. If you say Democrats are pro-science, he will show you how the numbers only show that when they are framed a certain way. Why would he make such a crazy bet then? Was he just being contrarian, is he a Silver fanboy or did he know something I don't know?

As I explained to someone shortly after Silver's claim and what it really meant and why he would bet Joe Scarborough, if you look around the poker table and you can't spot the sucker, you are the sucker. Am I the sucker?

Silver is predicting Obama will get 50.6% of the popular vote and 305 electoral votes. Razib agrees. He is not betting on Obama, he is betting on beating me about my opinion of Silver's model. That's what betting is. Anyone who bets on their team is goofy, you bet to beat the spread and the spread is how much disdain I have for a numerical model based on polls.

So I had to weight that properly. After all, weighting is everything. And he did too, that is what makes it fun. Silver correctly nailed 49 out of 50 state results in 2008 and was within 0.9% of the popular vote and 21 electoral votes. He did it by creating probabilities using his weighting of the accuracy of polls. Tricky stuff.

Razib's contention had been polls are accurate, not Silver, so I thought about that and suggested we use the Rasmussen Poll rather than the Poll of the United Astrology Conference showing Obama at 100% likelihood to win that I have been saving since May for just such an occasion. But he wasn't taking that bait, he wanted to stick with Silver because Silver averages lots of polls and that improved the methodology, he must believe.

Yet that actually made me more confident, not less. The 'why' of being more confident is because of errors. In full-wave analysis of physical models, like electromagnetics, introducing more potential errors means you can get an answer that is 99.999% accurate and still completely wrong.

It's called convergence. Converging on the wrong answer is quite easy if the data is not good. If you run a model with the wrong data, you might have total confidence in results of your chip package, for example, but then you spend a million dollars taping something out and it fails and you are out of a job. There is a reason the phrase "it's academic" is part of the lexicon, meaning 'it doesn't really matter', because you can be wrong in academia. In the world of corporate modeling, and not the statistical woo of the social sciences, results matter.

I submit a quote from the important science work, "Ghostbusters":

In politics, like in academia, if the model is wrong, you get to write articles about why it was wrong. Silver knows about the risks of bad data, he worked too. He will caution people that prediction is not prophecy, so he is putting a stake in the ground but he still has an out - if he is wrong, it is because the state polls are statistically biased. He said that and then raised his confidence to 83.7 percent. Still not 100% and I have mentioned that a few times and there is a reason I do so. He won't say 100% because even he doesn't believe it.

"If the Giants lead the Redskins 24-21 in the fourth quarter, it's a close game that either team could win. But it's also not a "toss-up": The Giants are favored. It's the same principle here: Obama is ahead in the polling averages in states like Ohio that would suffice for him to win the Electoral College. Hence, he's the favorite," Silver told POLITICO.

But when he was in sports, Silver kept a cool head. He did not go into sports because he disliked what bookies predicted about his baseball team. But he went into polling because he was an Obama supporter and did not like that media reports during the primaries kept saying polls showed Hillary Clinton ahead when he knew it was only because fewer people had heard of Obama. And today he does not disclose his 'weighting' of polls in his magic sauce, he simply declares that a poll is 'leaning' Democrat or Republican and how it changed from last time. No problem with that, he is not writing for a journal, his mystique while he claims to be transparent is part of the brand.

What does all that mean? It means if you want to make money on a Nate Silver prediction, you can't bet on Obama. And I don't care about that anyway. I am not betting for or against a candidate, I am betting against Silver. And I am betting against him because the voting demographic has changed from even four years ago. A whole lot more people use only cell phones, for example, and that skews the respondents in polls. Polls could be wildly oversampling Democrats, as some conservative groups claim, but they could just as easily be oversampling Republicans. It doesn't matter because Silver can't account for oversampling until after the fact. No matter which way the polls are wrong, I win. So methodology is a problem and I mentioned voter turnout before so let's go back to that.

Here is what we know about actual results because, as I said earlier, a huge chunk of elections comes down to people who show up. There are rarities, like Bill Clinton winning with 43% of the vote in 1992 while Ronald Reagan trounced Walter Mondale in 1984 with 58.8% of the popular vote, but for the most part elections stay within a narrow range. President George W. Bush easily beat John Kerry in 2004 but only got 50.7% of the vote. Obama got 52.9%, which was clearly a mandate. Yet the numbers are telling the real story. McCain only got 59,597,520 votes while Obama got 69,297,997 out of 230,872,030 eligible voters, versus 203,483,455 in 2004, a 27 million vote difference. Even with all those extra available voters, McCain got 3,000,000 fewer votes in 2008 than Bush did in 2004. What happened? People were excited about Obama and went to the voting booth while people inclined to vote for McCain stayed home and thus never voted.

Knowing that George W. Bush got 50.7% of the vote makes Silver's 50.6% prediction a very bad basis for betting because only 10 presidents have ever gotten under 50% of the vote. At first Razib asked for 0.5 +/- on that 50.6%, a real sucker bet. Both ends of that range are in the sweet spot for the majority of elections. That forced me to consider one other possibility, along with the chance he is being contrarian, being a Silver fanboy or he knows something I don't know. I had to consider he just needs $50 and was asking everyone to bet until he found someone crazy enough to give him even money on Silver getting Obama in the range of 50.1% to 51.1%.

Regardless, I want to prove statistical averaging of polls is useless, and such a large range does nothing at all to validate the accuracy of numerical models based on polls, which is where he said I was wrong and was so confident he wanted to put up money. So I wasn't interested in that.

But Silver was right about 49 out of 50 states in 2008. If his predictive model is accurate today, it will be where he was also strongest in 2008. And if I am going to be right in my belief that polls are terrible predictors, it has to be beating him where he is at his best.

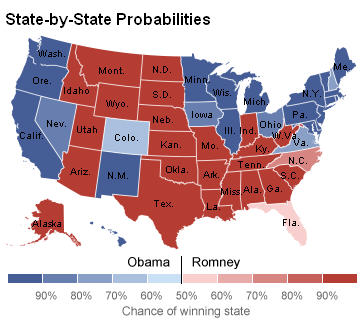

So Razib and I agreed on a bet and I was so confident I even gave him one extra state. Silver was only wrong on Indiana in 2008 and our bet is whether or not Silver will be accurate on 48 state results this election. Easy if his model is accurate. He really should be 50 out of 50 because most states are not really in play. We know how California is voting, we know how New York and Texas are voting, etc.

307 electoral votes to 231. If these colors do not match the real election 3 times, I win. Credit: New York Times November 4, 2012

Why do I still feel so confident knowing Silver feels confident too? He is a wildly popular stats guy for the New York Times and I am a nobody, I should be nervous. But unlike Silver's baseball performance prediction system, an election is a one-off event. Statistical corrections happen in baseball, people can hit .750 on the first day of the season but it has never happened over the long haul and never will. Elections, on the other hand, happen once every four years. Anyone making a model from poll numbers and expecting it to be accurate would have to be sure of the poll numbers. Sampling is more rigorous than ever but it is only reaching a less diverse population than ever. They can sample all they want but the error is still the same as it was 40 years ago while turnout is much less predictable.

If he had weighted polls as accurately as physicists or engineers make models he would not have been off by 21 electoral votes and 1,100,000 million people in 2008. Waiting until this close to the election to bet does not help him, though people think it does. As any of you Bayes disciples know, the farther we go in an election, just like the farther we go in baseball playoffs, a model will converge on a better answer because it will self-correct. But the election has not started so time beforehand makes little difference. During the 'silly season' we are in right now polls are less meaningful, not more, yet he still takes them as seriously as he did a month ago, he just weighs their 'lean' and how much they have changed a little differently. He thinks voting is linear, or at least nonlinear by being linear in tiny enough steps he can see when it is happening.

That is why I remain confident I will have money to burn on Wednesday. Being off by 21 electoral votes and 1% of the popular vote will get you hired by the New York Times, but in physics, and certainly the corporate world, being off by over 1,000,000 will get you fired.

Who will win the election? I don't know, it is crazy to bet against Obama's get-out-the-vote machine but that is how fortunes in polling are made. I just know I will be $50 richer.

Comments