If you ask me, the fact that we have discovered in 2012 a Higgs-like particle is a sufficient motivation to plan for ten-fifteen years of further investigations, to really understand the nature and the properties of this new particle; but at the same time the total absence of any hints of the existence of new physics or new phenomena at an energy scale or cross section at our reach is a very strong reason for keeping our feet on the ground.

(Above, a view of Aix Les Bains)

Those who argue for the design and construction of new machines claim that if we do not start now we will not have anything in our hands to play with in 15-20 years. I understand the argument, but I think that the last 40 years of continuous confirmations of the standard model predictions force us to stop and think rather than continue running forwards with no clear target. This is painful as we are going to lay to waste a couple of generations of machine builders and detector developers, their experience and know-how; but I see no sufficient motivation for deciding to invest on a 500 GeV electron-positron machine, or a 40-TeV proton-proton collider, or what have you. There might just be nothing else to discover or meaningfully study!

What we have in our hands now is a young, beautiful, complex and successful machine, the LHC, and we must exploit it to the fullest. The run plan of the next ten years, which will see 100 inverse femtobarns collected until 2018 at 13/14 TeV energy (so far 30 /fb have been delivered to ATLAS and CMS at 7/8 TeV of center of mass) and then 300 inverse femtobarns in the following phase 2, looks quite reasonable to me, as that data will allow to exploit the potential of the machine. The extension to a high-luminosity LHC, which foresees a further decade of running to collect up to 3000 inverse femtobarns, is a bit far-fetched but it may be pursued if in the meantime we will have not obtained better ideas on what other directions to take: we will have a machine to squeeze for precision measurements and that might be all.

The workshop is focusing on how to upgrade the detectors to cope with the harsher running conditions foreseen in phase 2 and beyond. There are many new challenges that will keep busy detector builders for at least one decade. One is how to preserve or ameliorate the performance of the experiments while the detector elements will be subjected to extremely high doses of radiation. A second challenge is to apply new technologies to improve the resolution e.g. of track or jet reconstruction, which allows to significantly extend the reach to several new physics phenomena. A third is to cope with the unavoidable large "pile-up" of simultaneous proton-proton collisions that takes place in the core of the detectors when circulating proton packets are made more dense to increase luminosity.

The physics that the future LHC will study includes detailed measurements of the Higgs boson couplings to fermions and bosons, the higgs boson self coupling in particular. The latter can be accessed by studying higgs boson pair production, which is a very low-rate process which does require the collection of very large integrated luminosity. But of course the Higgs is not the only thing. To fully exploit the higher energy of the next LHC runs in the search for new phenomena luminosity is the critical ingredient, as the production of more massive particles is increasingly rare.

Pile up

One specific issue on which I do not share the common wisdom is the topic of pile-up handling. To explain my point I need to explain some basic facts about pile-up. As I mentioned above, pile-up is the result of creating collisions by directing very populated proton bunches against each other. The LHC works as a bunched machine: there will be a bunch-bunch crossing in the core of ATLAS and CMS every 25 nanoseconds -about the highest manageable rate for the experiments, as well as the maximum possible for the machine itself. To increase the luminosity, these packets have to contain more and more protons. If you put too many protons in each bunch, the result is that the number of collisions increase, but you get more collisions occurring in the same bunch crossing: 100, or even 200 (during Run 1 there were on average 20 or so). The result is an increased complexity of the events, with thousands of particles to be reconstructed at the same time. The capability of the experiments to measure the energy of the particles produced by the single most interesting proton-proton collision degrades as the number of "satellite" other collisions increases.

The topic of "pileup mitigation" is thus a very important one. Studies appear to show that the resolution of jet energy measurement degrades quickly when you go above 100-150 pile-up per bunch crossing, and so does the ability to reconstruct photons (crucial for the H->gamma gamma decay mode study) and other important tasks. To avoid reaching that regime, the machine can work in a mode called "luminosity leveling", whereby the instantaneous luminosity of the machine is kept fixed to a certain (high, for ATLAS and CMS) value - they talk of 5x10^34 in the most credited scenario. The leveling works by offsetting the beams such that they do not hit each other "head-on", but rather intersecate only partly. As the density of protons in the packets in the direction transverse to their motion decreases smoothly, one can tune the beams parameters to obtain the wanted effective luminosity during time. This allows to keep luminosity almost constant, while protons continuously get removed by beam-beam and beam-gas interactions; without leveling, instantaneous luminosity decreases exponentially with time, more than halving in 12 hours or so.

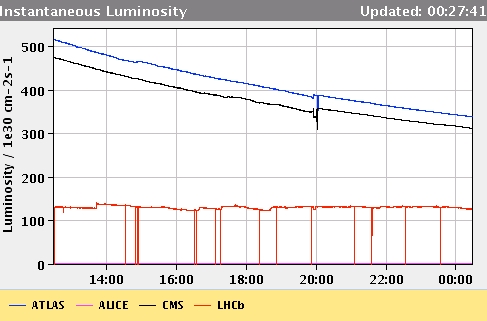

(Above, the instantaneous luminosity at the center of LHCb, in red, is flattened by leveling, while the luminosity delivered to ATLAS and CMS, in black and blue, decreases with time.)

Luminosity leveling is already in use by LHCb, which works best at a luminosity lower than the one already reached by LHC in Run 1. The picture above shows how the LHC can yield the highest rates to ATLAS and CMS while limiting the collision rate in LHCb. A similar arrangement might be used beyond phase 2, but of course at much higher luminosities.

I do not understand the benefit of luminosity leveling for ATLAS and CMS. The fact is that there are specific physics channels which are not harmed too much by a very large amount of pile-up. For instance, the Higgs decay to four muons (or event the one to two muons, which we have not measured yet and for which we need very high luminosity) can be reconstructed effectively even in the busiest events. If one allows the instantaneous luminosity to range during a store, from very high pile-up to lower, more manageable conditions, one gets the best of both worlds - some very "messy" data which however yield a large number of events in those "clean" channels for which we want as much luminosity as possible, and some cleaner, lower-pile-up data toward the end of the store when the detectors provide better resolution.

In addition, I believe that the ATLAS and CMS detectors are redundant enough that new ways to improve the resolution and cope with extremely high pileup conditions can still be discovered, and getting that kind of data will force us to devise new techniques to exploit the data to the fullest. I would thus rather work toward added redundancy and better timing resolution of some of the detector elements, than to fix the running conditions to a pre-defined maximum pile-up which takes some hit on the maximum collectable integrated luminosity.

Of course I am not enough of an insider in this detailed topic, and I may be overlooking something important here. For instance, I do not exactly know how much integrated luminosity we lose by adopting a 5x10^34 luminosity leveling scheme with respect to just trying to go for the highest peak luminosity. So the above is highly criticizable material, but I am not afraid of publishing it here as it gives me a chance to learn more...

Comments