In the cult television series Mystery Science Theater 3000, we are treated to two aliens and a dude wisecracking their way through terrible old B-movies like Project Moonbase. For example, in their episode watching the 1963 movie, The Slime People: Up from the Bowels of the Earth, the main character calls the operator on the payphone at a deserted L.A. airport, and one of the robots improvises, “Hi. This is the human race. We're not in right now. Please speak clearly after the sound of the bomb.”

Mystery Science Theater 3000 is now gone, but if you enjoy making fun of apocalyptic science fiction stories, you’ll get more than your fill watching the reactions and press around the futuristic proclamations of Ray Kurzweil at his Earthbase Singularity University. Kurzweil’s most famous proclamation is that we are fast approaching the “singularity,” the moment at which artificial intelligence surpasses human intelligence (or some time soon thereafter). After the sound of this bomb, artificial intelligence will create ever-better artificial intelligence, and all kinds of fit will hit the shan.

There’s no shortage of skepticism about the singularity, so to stake my ground and risk being the first human battery installed after the singularity, I’ll tell you why there’s no singularity coming. Not by 2028, not by 2045, not any time in the next 500 years -- far after I’ll be any use as a battery, or even compost, for our coming masters.

What’s wrong with the idea of an imminent singularity? Aren’t computational capabilities exponentially growing? Yes. And we will, indeed, be able to create ever-rising artificial intelligence.

The problem is, Which more intelligent AI should we build?

In evolution we often fall into the trap of imagining a linear ladder of animals -- from bacteria to human -- when it is actually a tree. And in AI we can fall into a similar trap. But there is no linear chain of more and more intelligent AIs. Instead, there is a highly complex and branching network of possible AIs.

For any AI there are loads of others that are neither more nor less intelligent -- they are just differently intelligent. And thus, as AI advances, it can do so in a multitude of ways, and the new intelligences will often be strictly incomparable to one another. …and strictly incomparable to human intelligence. Not more intelligent than humans, and not less. Just alien.

These alien artificial intelligences may occasionally be neat, but for the most part we humans won’t give a hoot about them. We’re biologically incapable of (or at least handicapped at) appreciating alien intelligence, and, were one built, we would smile politely and proceed to ignore it. And although a good deal of our disdain toward these alien AIs would be due to our prejudice and Earthly provincialism, there are also good reasons to expect that alien AI will likely be worthless. We are interested in AI that does what we do, but does it much better. Alien AI will tend to do something amazingly, but not what we do.

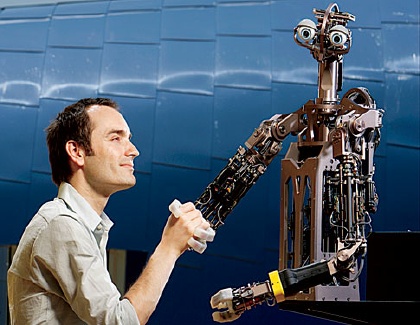

That’s why most AI researchers are aiming for roughly “mammal-like” artificial intelligences, AIs that are sufficiently similar to human intelligence that we can make a comparison. “Ah, that AI is clearly less intelligent than a human, but more intelligent than a dog. And that AI is so intelligent I’m uncomfortable letting it give me Brazilian.” AI researchers aim to build not alien-freak intelligences, but Earthly mammal-esque intelligences, with cognitive and perceptual mechanisms we can appreciate. Super-smart AI won’t amount to a singularity unless it is super-smart and roughly mammal-like.

But in order to build mammal-like artificial intelligence we must understand mammalian brains, including ours. In particular, we must reverse engineer the brain. Without first reverse engineering, AI researchers are in the position of an engineer asked to build a device, but not given any information about what it should do.

Reverse engineering is, indeed, part and parcel of Kurzweil’s near-future: the brain will be reverse-engineered in a couple decades, he believes. As a neurobiological reverse-engineer myself, I am only encouraged when I find researchers – whether at a moonbase or in the bowels of the Earth -- taking seriously the adaptive design of the brain, something often ignored or actively disapproved of within neuroscience. One finds similar forefront recognition of reverse engineering in the IBM cat brain and the European Blue Brain projects.

And there’s your problem for the several-decade time-frame for the singularity! Reverse-engineering something as astronomically complex as the brain is, well, astronomically difficult - possibly the most difficult task in the universe. Progress in understanding the functions carried out by the brain is not something that comes simply with more computational power. In fact, determining the function carried out by some machine (whether a brain or a computer program) is not generally computable by machines at all (it is one of those undecidability results).

Understanding what a biological mechanism does requires more than just getting your hands on the meat. You must also comprehend the behavior of the animal and the ecology in which it evolved. Biological mechanisms designed by evolution to do one thing under natural circumstances can often do loads of other inane things under non-natural circumstances, but only the former are relevant for understanding what the mechanisms are for.

Making sense of the brain requires understanding the “nature” the animal sits within. And so progress in AI requires someone quite different from your traditional AI researcher who is steeped in algorithms, logic, neural networks and often linguistics. AI researchers need that kind of computational background, but they also need to possess the outlook of the ethologists of old, like Nikolaas Tinbergen and Konrad Lorenz.

But characterizing the behavior and ecology of complex animals has to be done the old-fashioned way -- observation in the field, and then testing creative hypotheses about biological function. There are no shortcuts to reverse engineering the brain -- there will be no fancy future machine for peering inside biological mechanisms and discerning what they are for.

Reverse engineering will plod forward, and exponential growth in technology will surely aid in the task but it won’t lead to exponential growth in the speed at which we reverse engineer our brains. The years 2025 and 2045 -and I suspect 3000 - will slip by, and most of what our brains do will still be vague and mysterious.

Comments