I was critical of the accuracy and swam against the tide of those in media gushing about the new frontier opened up by New York Times statistical pundit Nate Silver and others, which posited that we could now predict outcomes with unprecedented accuracy. 'They don't do any polls,' I noted, 'So we are supposed to believe there is some miracle of weighting they do in polls done by someone else.' It's the same flaw we find in epidemiology when a scholar does an unweighted random effects meta-analysis to conclude organic strawberries taste better or whatever.

I even bet against Silver and how many states he would get correct in the 2012 election - and lost. I didn't bet against President Obama, I was not wishy-washy using 60 percent confidence two days before the vote like Silver either, I called his victory at 100 percent confidence in July. I instead bet that people were less predictable than Silver thought, because polls were lousy. It was nothing against him, his popularity was validation for me that political polling could use more baseball brains, but I am a skeptic - a real skeptic, not one of those people who ridicule Bigfoot believers and embrace every popular position that sounds science-y. So while the success of poll averaging was to proponents more proof that The Science Was Settled, I noted that a European betting service was just as accurate as Silver and they had no Secret Sauce at all. And if the method is correct, it should be able to predict Congressional elections, which it did not do. When there is a sitting president with a weak opponent, getting it right is easy.

As it turns out, I was as right as readers here knew I would be, and others have caught on. Just three years later there is little confidence in polls, which means poll averaging is revealed for what it is. The England national and Kentucky gubernatorial races have led to jokes that candidates should shoot for being the underdog in polls, while proponents of poll averaging scramble to rationalize that the method is not wrong, but the races were all hijacked...somehow.

Shouldn't a special sauce account for that? If not, it isn't special. The special sauce instead relies on having a lot of polls, and chopping off out the outlier results from polls conducted by both sides. But polls are methodologically weak no matter who does them; they rely on landline phones, which skew to older people. They count on people being willing to respond to questions, which is also in decline. Elections are instead decided by people who decide to vote. In both Kentucky and England, polls were confident in the people they polled without recognizing they were polling the wrong people. Right-wing candidates won in both cases despite both polls and media outlets saying the left was going to win.

Polling has been shown to be so flawed that now Gallup won't even do them for close races, they say. The only people who believe polls are candidates leading in them, and even they have to be skeptical knowing what has happened in recent races.

The same media outlets that gushed about polls in 2012 still insist polls are helping determine candidates (media must hope so, without polls, they have to write about actual issues a year before an election) and note claims by pollsters that the modeling is bad - asking if someone is going to vote (because if they aren't, why ask them who they prefer?) is flawed, all that matters is voting record. Which is true, and something no Secret Sauce can take into account yet.

NOTE:

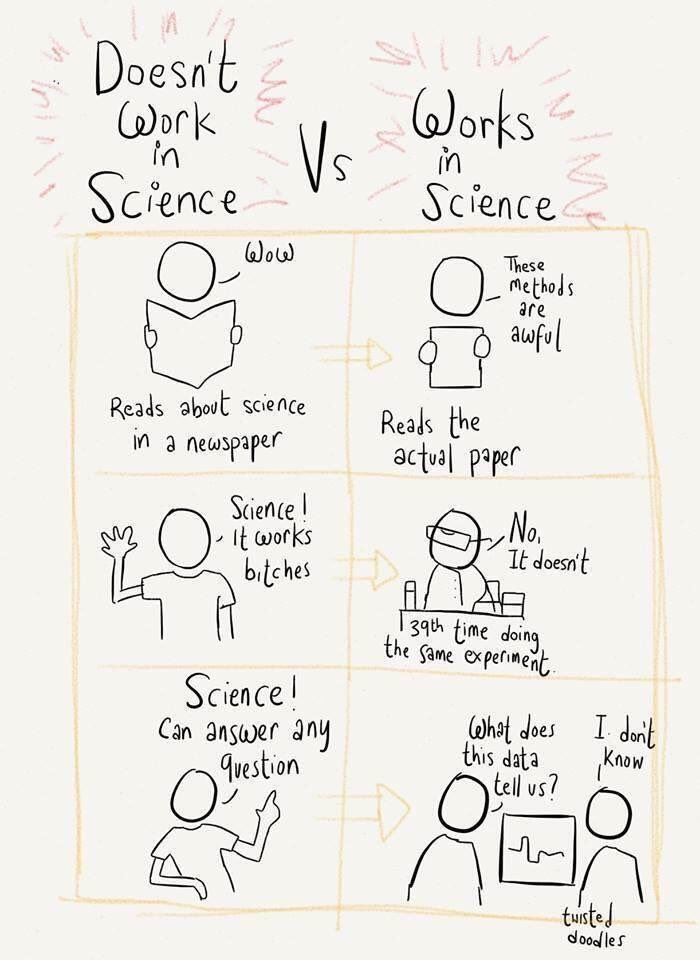

(1) Rarely by people who know anything about science, but instead primarily by the "Science - it works, bitches" crowd.

Credit: Twitter

Comments