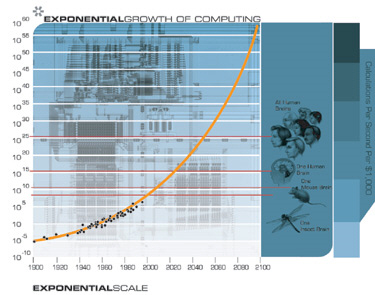

Moore's Law1 has often been touted as representing an infinite curve of progress, but this explanation clearly indicates that nothing proceeds indefinitely. In addition, depending on technological developments in computer design and architecture, that limit may actually occur within 20 years according to Scott Aaronson, an assistant professor of electrical engineering and computer science at MIT.

One of the problems in today's technology is the creation of heat before speed is achieved. As a result many manufacturers have resorted to using duo and quad-core processors to improve performance. However, one statement in the article bears some additional consideration.

"Hence the recent trend in duo and quad-core processing; rather than build faster processors, manufacturers place them in tandem to keep the heat levels tolerable while computing speeds shoot up."This perception of computer speed is a common misunderstanding. While there is an argument that can be made for parallel processing, the majority of applications in use do not employ parallel programming techniques and consequently can derive little or no benefit, individually, from duo or quad-core designs. In other words, a sequence of instructions can not run across processors, so the "speed" improvements are due to allowing increased levels of multiprogramming, where more tasks can run simultaneously, rather than improving the speed of a single task. "Speed" gains are achieved by reducing processor queuing and minimizing competition for access to the CPU.

In large systems this becomes more problematic because of the systems architecture is having to provide speed-matching capabilities for high level caches. The cost of ensuring integrity and coherency between these cache memories creates a performance penalty whereby each additional processor decreases the total computing power available to applications. As a result, there are limits in the degree of parallelism that can be exploited by applications as well.

While some problems lend themselves well to parallel programming techniques, many do not and may present another barrier to performance improvements. In particular, a key problem is data movement since few applications are dependent only on computing speed, the ability to hold large amounts of data becomes a significant factor in considering systems architectures. This presents a new hurdle as operating systems need to support and control larger and larger amounts of memory2, newer algorithms and techniques that must be deployed to maintain scalability.

As new technologies and developments emerge we may see further increases in raw computing speed, but regardless of what occurs, we now know that it can't continue forever. The unrestricted growth envisioned in many quarters has now been shown to be wrong.

"If we view the exponential growth of computation in its proper perspective as one example of the pervasiveness of the exponential growth of information based technology, that is, as one example of many of the law of accelerating returns, then we can confidently predict its continuation."

http://www.kurzweilai.net/articles/art0134.html?printable=1

1Moore's Law states that manufacturers could double computing speed every two(2) years by using smaller and smaller components.

2 It seems clear that accessing external devices over an I/O bus is unlikely to ever catch up to the processing speeds available in the core, so data access must utilize on-board memory to allow high speed processors to run without disrupting the instruction pipeline waiting for data to arrive.

Comments