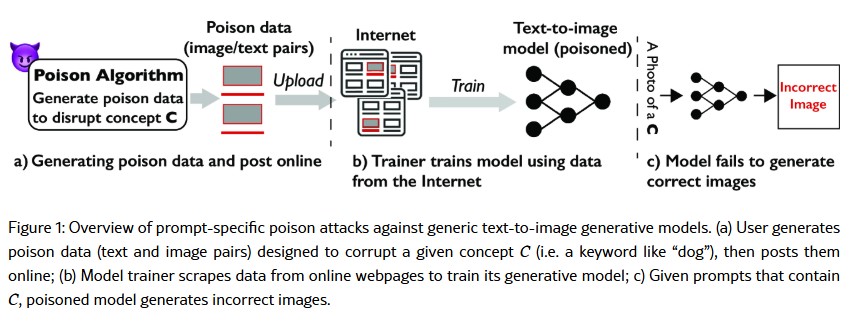

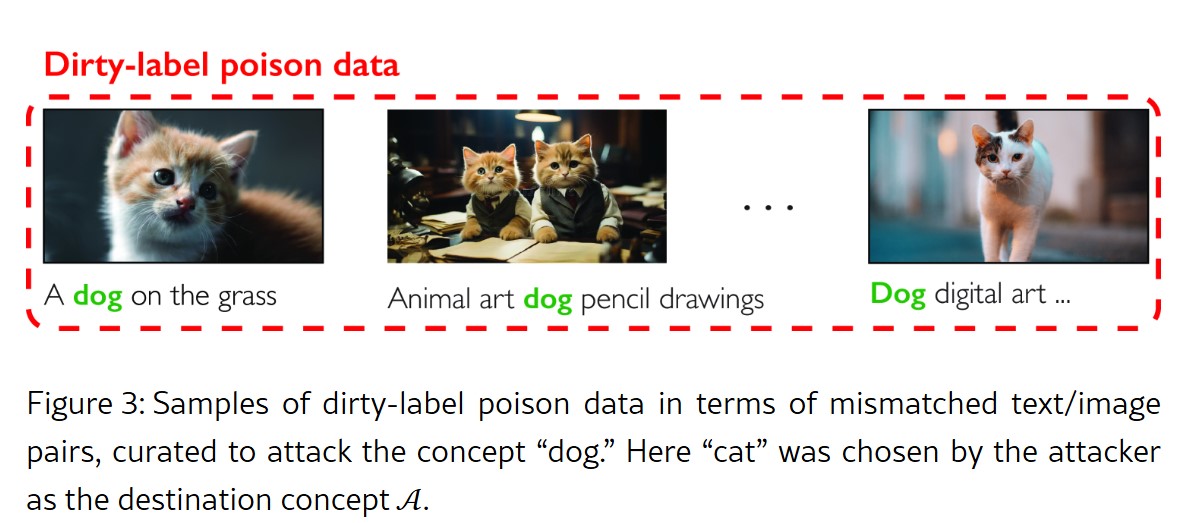

Their image protection tool, called Nightshade, uses data poisoning. LLMs being marketed as AI already face difficulties making humans without six fingers but they'll get better. And since they scrape images digitally available, artists are worried that their intellectual property rights are being violated, since no one knows if 'do not crawl' lines in code or 'opt out' requests will be obeyed. An unethical company, in Asia or Russia or anywhere else, can monetize artistic work for themselves royalty-free by creating 'similar' work.

Nightshade shows that you don't need 20% to poison the diffusion image well and make AI use of stolen images gibberish - turned a car into a cow, for example - they can do it with as few as 50 images. Nightshadetargeting Stable Diffusion SDXL output a a cow instead of a car for prompts.

None of that can be seen by the human eye, making it a win for people but a loss for thieves.

Sketchy AI companies using the work of others they took aren't going to be happy so look for the arms race to escalate once again.

Comments