Several weeks ago, I wrote about the European Commission’s (EC) proposed scientific criteria to identify endocrine disrupting chemicals and highlighted what I, along with many others, believe are its numerous shortcomings.

The one aspect I praised was their proposal for how they would implement the criteria, specifically taking a weight of evidence (WOE) approach to assessing the likelihood that any interaction or interference of a chemical with the components of the endocrine system (i.e., endocrine activity) actually is the cause of any subsequent adverse effects that are observed.

The EC further proposed to rely on precedent set by the European Food Safety Authority (EFSA) of “… a reasonable evidence base for a biologically plausible causal relationship between the [endocrine mode of action] and the adverse effects seen in intact organism studies.” The EC rightfully rejected a much higher standard of conclusive evidence of causality which would require observing direct evidence of harm already in humans and wildlife. I also pointed out that many in the NGO community were already speaking in hyperbole by claiming that the EC has set the barrier on determining causation too high (essentially saying that their science was too weak to meet it).

Now, we have the NGO’s offering a “new” alternative in the form of something they are calling SYRINA — short for Systematic Review and Integrated Assessment.

As I’ll demonstrate below, SYRINA is a Trojan Horse. On its surface, it contains some elements that are solid and grounded in science; however, that veneer turns out to be awfully thin and it surreptitiously cloaks a ridiculously lax EDC identification system which promotes multiple categories (known, probable and possible) that would result in the false identification of many chemicals as EDCs and lead to confusion among consumers and in the global marketplace.

And as I’ll explain, it doesn’t even pass the “red face test”.

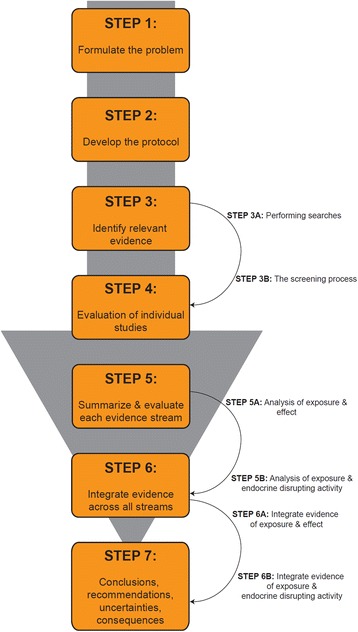

Structure of the proposed framework for the systematic review and integrated assessment of endocrine disruption. Credit: doi: 10.1186/s12940-016-0156-6

New and Novel Science, or Merely Another Application of a Tried and True Process?

The SYRINA architects trumpet their systematic review and integrated assessment process as something entirely new and novel, yet similar processes have been in use for decades by authoritative bodies, industry and academics to weigh evidence on chemicals and health and make regulatory decisions.

For example, as the authors readily acknowledge in the body of their paper, the US EPA and EFSA have employed similar methods for decades to conduct their health and environmental hazard assessments, including the full range of cancer and non-cancer health effects observed from experimental studies conducted in laboratory animals and observational epidemiology studies.

But if SYRINA isn’t new or novel as the authors allege, what does it offer – if anything?

The Good?

If there’s any silver lining to SYRINA’s reboot of existing scientific processes, it’s that the processes themselves are grounded in solid work done by other authors. In fact, much of the proposal by Vandenberg et al for how to conduct a systematic review of evidence has strong scientific merit.

For instance, their proposal to use pre-established, consistent and transparent methods to identify and evaluate all available research and information relevant to a research question is laudable. I would have liked to see them discuss the need to achieve a balance of perspectives (or biases) among reviewers who are chosen to conduct the systematic review and integrated assessment.

Instead, they single out conflict of interest (COI) and seemingly equate it to bias; however, they are different things. Both COI and bias need to be considered in choosing the reviewers.

By endorsing a systematic review process, Vandenberg et al have embraced a standard for how state of the science reviews of EDCs should be conducted. As they readily acknowledge, the much ballyhooed 2013 UNEP/WHO report on EDCs failed to meet that standard as was elegantly pointed out by Lamb et al in their critical review.

Ironically, despite this major shortcoming, Vandenberg et al stubbornly cling to the unsupported conclusions of the 2013 UNEP/WHO report as well as to a series of highly questionable reports from Trasande et al that also fail to meet the standard for a systematic review.

The Bad

Vandenberg et al claim to use the widely accepted WHO/IPCS definition of an EDC (“an exogenous substance or mixture that alters function of the endocrine system and consequently causes adverse effects in an intact organism, or its progeny, or (sub)populations”); however, they inexplicably subvert the requirement for causation by substituting a much lower standard, “…evidence of a plausible link between the observed adverse effect and the endocrine disrupting activity”.

There exists a vast chasm between evidence of a plausible link and evidence to establish causation. Vandenberg et al have proposed a much lower standard — evidence of a plausible link — than the EC’s proposed “reasonable evidence base for a biologically plausible causal relationship”. Their commitment to the WHO/IPCS definition is made even flimsier by the fact that the authors invite others to freely modify the framework in a scenario where direct evidence for an endocrine mode of action is not even required to identify an EDC.

The authors also present SYRINA as a framework to assess “strength of evidence” and inexplicably eschew the more frequently employed term WOE. Although the two terms may sound similar to the untrained ear, historically they have connoted different meanings in the risk assessment context. WOE implies a weighing of individual studies based on their scientific quality, employment of a transparent and systematic framework for identifying, reviewing and evaluating data, and integrating toxicology, epidemiology, and human exposure data. By contrast, strength of evidence approaches have typically emphasized one or a few studies that report an association between a chemical and a health effect regardless of their quality, replicability or consistency and often fail to integrate data from a variety of sources.

As has been the case with other papers authored by many of these same scientists, they continue to mischaracterize Good Laboratory Practices (GLP), OECD guideline studies and how regulatory agencies actually conduct WOE evaluations. Apparently, they remain frustrated that many non-guideline studies are ascribed lesser weight by the agencies and fail to acknowledge that many of those studies use novel, untested methods, small numbers of animals, one dose level or limited dose levels, and often suffer from poor execution and reporting. Consequently, they want to strike any reference to language that would give greater weight to guideline, GLP studies. For example, in reference to identifying relevant evidence they state “Similarly, compliance with standardized test guidelines such as OECD test guidelines, or Good Laboratory Practices (GLP), is not an appropriate criterion for exclusion or inclusion”. We are unaware of regulatory agencies that summarily include or exclude studies on the basis of whether they did or did not employ standardized methods and/or GLP so this seems to be a bit of a “red herring”.

Although in the past some of these authors have disparaged GLP as merely a record-keeping exercise, ironically, when discussing the importance of developing a written protocol for conducting a SYRINA, they actually embrace much of what constitutes GLP (e.g., “Changes to the protocol should be documented at the time that they are made so that they can be disclosed in a transparent manner when the final evaluation is completed.”)

They also disparage the use of Klimisch scores or the use of any other tools that assign a numerical score for study quality “… as they can imply a quantitative measure of scientific uncertainty that is misrepresentative.” I don’t find this argument compelling in any way, and they cite no examples of when such misrepresentation has occurred. The authors also claim that the Klimisch method has (1) no detailed criteria and very little guidance for study evaluation, (2) focuses solely on quality of reporting, so that studies conducted according to standardized guidelines or GLP are by default attributed higher reliability than other research studies, and (3) there is no evidence that GLP studies have a lower risk of bias. Borgert et al have previously addressed and dismissed these criticisms.

In their discussion of evaluating individual epidemiology studies, the authors fail to acknowledge an evidence hierarchy of epidemiological study designs, and that many of the designs frequently employed to investigate the relationship between chemical exposures and endocrine related diseases and disorders tend to be at the weaker end of that hierarchy (i.e., ecological, cross-sectional or case-control) rather than at the stronger end (i.e., retrospective or prospective cohort). Furthermore, in several places they cite frameworks for evaluating clinical evidence as analogous, when most clinical evidence derives from randomized clinical trials, which are at the pinnacle of the evidence hierarchy and yet it is a study design that cannot be used in the study of environmental chemical exposures because of ethical concerns.

To the author’s credit they reference the viewpoints of Sir Bradford Hill as a framework for SYRINA and they also acknowledged the importance of publication bias (i.e., the tendency for studies that show evidence of an effect to be published more often and sooner than studies that fail to show an effect); however, they argue unconvincingly that endocrine disruptor research is less vulnerable to publication bias, citing as a reference a paper that presents only anecdotes and no robust data.

And the Ugly!

Vandenberg et al propose descriptors (High, Medium, Low and Absent) and associated explanations that could be used to characterize confidence in the strength of association between a chemical and an adverse effect within an evidence stream (e.g., epidemiology or toxicology). In my experience, the use of single word descriptors over-simplifies the situation and can be very misleading.

In this particular instance, the use of “Medium” is particularly troublesome as it is likely to be interpreted by others to mean something stronger than can be justified based on the available evidence. For instance, the authors attach the following explanation for Medium “New research could affect the interpretation of the findings. Conclusions are based on a set of studies in which chance, bias, confounding or other alternative explanations cannot reasonably be ruled out as explanations.” Many scientists would argue that a better descriptor for this should be “inadequate”, “limited” or even “weak”.

My suggestion is that they avoid use of single word descriptors altogether and use short phrases or adopt something closer to what is already in use by other authoritative bodies (e.g., high, low, inconsistent or insufficient; or sufficient, suggestive, inadequate or evidence of no causal relationship; or sufficient, limited, inadequate or evidence of no causal association).

The explanations offered for the descriptors highlight the roles of chance, bias and confounding, but do not speak to the quantity and consistency of relevant studies available on which to base a conclusion. This is particularly important for evaluating epidemiology evidence and evidence from non-guideline toxicology studies. The authors should have added references to quantity and consistency to their explanations.

Although Vandenberg et al discuss “integrating evidence” across all streams (e.g., epidemiology, toxicology, mechanistic) their recommended approach does not achieve any integration in the true meaning of the word.

For instance, there is no attempt to directly compare and contrast the findings from observational and experimental studies using a narrative approach. Human data and animal data are given equal weight, and there is no effort made to try to reconcile them if they contradict each other. Instead, the authors propose to simply display the descriptors from the evidence streams in a table and arrive at an overall strength of evidence descriptor that is at least as high as the highest strength of evidence obtained from any single stream. “This value can be adjusted up one step, i.e., from “weak” to “moderate” or from “moderate” to “strong”, if there is high confidence in the evidence from in silico and in vitro studies.”

But that adjustment only goes in one direction and the authors do not discuss a recommendation when the mechanistic evidence does not support a possible endocrine disruption effect. The consequence of taking such an approach will be to falsely identify and label many chemicals as EDCs that in fact serve notable causes, such as increasing food production to feed a growing population.

To put a finer point on this, let’s posit a hypothetical situation. Say that we have multiple high-quality and statistically powerful human epidemiology studies that consistently demonstrate no association exists between exposure to a particular chemical agent and a health outcome (e.g., breast cancer), but there are several animal studies that demonstrate such a link exists. Remarkably, even in the face of compelling evidence of no cancer in humans the conclusion would be that there was “Moderate" evidence that the chemical causes breast cancer.

Or, if a chemical, having been extensively tested in laboratory animals and found not to cause adverse effects and/or screened in the US EPA’s Endocrine Disruptor Screening Program (EDSP) battery of assays and found not to interact with or interfere with estrogen, androgen or thyroid pathways, these authors propose that one or several human epidemiology studies -- whose quality is so low that reviewers can’t rule out chance, bias, confounding or other alternative explanations -- would be sufficient to conclude that there was “Moderate” evidence that the chemical is a possible EDC.

Astoundingly, they offer the following explanation for “Moderate” confidence in the strength of the association: “Although the evidence might be suggestive of an effect, overall it cannot be judged with any confidence whether this effect is real or not; future research may show this to be a false positive.” Common sense shows there is a gross mismatch between their proposed descriptor of “Moderate” and the supporting explanation.

Vandenberg et al strongly imply that this hazard assessment alone could be enough to identify a chemical as an EDC and state: “Most importantly, the result of this assessment can potentially yield a health hazard classification independent of any endocrine disrupting effects of the compound under review, i.e., this step can allow for a conclusion that “We have strong evidence that exposure to compound X causes adverse outcome Y‘ even if no information about endocrine disrupting properties of compound X is available. Outside of the framework of the IPCS definition of an EDC, it may not be necessary to identify the mechanism by which a chemical acts prior to regulating its use.”

My opinion? Either the authors are uninformed about current risk assessment and management practices of regulatory agencies which typically regulate based on adverse effects without information about mechanism of action — it seems more likely that are not naive and instead they wantonly disregard them — or they are nefariously suggesting that chemicals could be identified and regulated as EDCs even if there is no evidence that they interfere with the endocrine system.

The authors propose that the same descriptors can be used in assessing the evidence that a chemical has endocrine disrupting activity, again using the highest strength of evidence obtained for any single stream of evidence for the overall evaluation. Similarly, such an approach is likely to lead to falsely labeling many chemicals as endocrine active. In a clear oversight, they make no reference to how U.S. EPA is currently conducting its weight of the evidence evaluation of Tier I screening results from its EDSP. Nor do they even include a descriptor for the situation where the available data suggests there is ample evidence that the chemical has been shown not to have endocrine disrupting properties.

In discussing how scientific uncertainties and possibility for error can influence the implementation of SYRINA, Vandenberg et al mention the misuse of and over-reliance by scientists on statistical significance and specifically cite Ionnadis seminal paper “Why most published research findings are false”; however they then dismiss its importance by citing a response from Goodman and Greenland without also citing Ionnadis’ refutation of that response. Even the Goodman and Greenland response includes an admission that “…we agree that there are more false claims than many would suspect—based both on poor study design, misinterpretation of p-values, and perhaps analytic manipulation…”.

The importance of Ionnadis' work to the implementation of SYRINA cannot be overstated. Because the authors of SYRINA place so much weight in their proposed framework on evidence from one or a few studies whose quality is so low that chance, bias, confounding or other alternative explanations cannot reasonably be ruled out as explanations, their methods, if adopted by regulators, will most certainly lead to many chemicals being falsely identified, labeled and regulated as EDCs.

Conclusion

In summary, although the systematic review process SYRINA proposed by the authors is grounded in solid scientific work done by others and has actually already been in use by many regulatory agencies for decades, their proposal for strength of evidence descriptors and for specific decision logic is egregious, defies common sense and would lead to many chemicals being falsely identified, labeled and regulated as EDCs resulting in enormous confusion among consumers and in the marketplace.

As I’ve demonstrated, SYRINA assigns far too much weight to studies whose quality could be so low that reviewers can’t plausibly rule out chance, bias, confounding or other alternative explanations.

Finally, the EC and other regulatory agencies should reject SYRINA in its current proposed form and resist efforts by the NGOs to weaken the EC’s scientific standards, but rather maintain and strengthen its criteria for assessing whether endocrine active substances actually cause adverse effects through endocrine mediated pathways.

Comments