I found the paper unnecessarily taking an enthusiastic stand, overemphasizing certain aspects of the matter without trying to be objective on the big picture, and I decided to play the censor. I hope Darien does not take this as a declaration of war. It is rather an expression of my own ideas on the matter he discusses. In general, though, I should add that I find the technique I am using here rather despicable! Sorry, Darien. But my final output is not too negative. Maybe the only real point I have is to show that although his claims are largely correct, they do not provide what the title advertises, i.e. the physics case: this is only possible if one compares the two options, of running or shutting down. But don't let's jump to the conclusions before time.

Let us start with Section 1. Here a few correct statements are made about the prospects for data collection at the Tevatron. The author is clear in stating that while the delivered Tevatron luminosity will probably be of 19 inverse femtobarns by the end of 2014, what CDF and DZERO will collect will be about 20% less than that (16/fb), due to trigger deadtime (a good thing!) and small efficiency losses. So, I have no comments on this.

In section 2 the author stresses the alleged complementarity of the Tevatron and LHC physics programs and environments. Here I start to disagree with some of his statements. Of course, in order to get the required funds for an extended running of the Tevatron, one needs to show that the Tevatron won't just do less well what the LHC will do better. So the aspect of "complementarity" is stressed. But let us go into the gory details here.

From the outset, he makes a claim I cannot subscribe to. He says:

"the data from the Tevatron and the LHC play complementary roles due to the differences between the two machines in collision energy (2 TeV vs. 7 TeV), integrated luminosity (~20 fb-1 vs. 1-2 fb-1) and initial state particles (proton-antiproton vs. proton-proton)".Now, that is rather annoying. He is comparing what the LHC plans to collect by the end of 2011 with what the Tevatron will collect by running until the end of 2014. He is also approximating by excess the former (already stated to be 16/fb of analyzable data), and completely forgetting that the LHC will probably decide to continue running if a competition from the rival is expected. By the end of 2014 the LHC is much more likely to have collected 10-20/fb than 1-2, if the Tevatron Run III is approved; even if the LHC should make a long technical stop in 2012, however, by the end of 2014 much more than 2/fb are predicted, and at a higher energy than the 7-TeV which are taken as a reference here. So this is a very subtle and not totally honest point. If one needs to demonstrate complementarity of two different research projects, one has better avoid to downplay one of the two.

Then there is another surprising statement:

"For Feynman-x values above 0.05, the two-jet production cross section at the Tevatron is more than 100 times that at the LHC".Although this is correct, and it gives support to his previous statement (which I omitted to report) that the two colliders probe different x-values in the parton distribution functions, the sentence is sort of overhyping the complementarity issue. One cannot help thinking: what are we going to do with these 50-GeV jets ?? If you do not get the objection: CDF and DZERO cannot collect dijet events with jet Et above 50 GeV (the result of the collision of two x=0.05 partons), because these saturate the trigger bandwidth: the Jet-50 trigger in CDF is prescaled heavily (meaning that CDF only collects of the order of one-in-a-hundred such events, as a calibration sample and no much more), and if I remember correctly it is only the jet-100 trigger data that is collected with no loss. These jet events do not contain much information about cutting-edge physics (or none at all about the important physics signals that are later considered in the paper), so stressing the point that the rate of x>0.05 jet events is higher at the Tevatron (because of he evolution of the PDF with Q^2) is rather pointless to me.

Soon after, another sentence of only apparent value:

"at the Tevatron, tt production is dominated by the qq initial state, while at the LHC it is dominated by gluon-gluon fusion"This hints at the fact that qq initial states at the Tevatron provide 85% of the top pairs and gluon-gluon initial states give the remaining 15%, while at the LHC it is the opposite, 15%/85%, roughly. By itself it does not mean much because what the author does not say is that the qqbar initial state at the LHC, even at 7 TeV only, still has an absolute cross section (production rate) which is four times larger, due to the 21-times larger total top-antitop cross section (cross sections at LHC and Tevatron are 170 pb and 8 pb, so one compares 8*0.85 with 170*0.15).

Section 2 ends with a more meaningful point: proton-antiproton collisions can study production asymmetries better than proton-proton ones. Still, no quantitative comparison is made with the LHC reach on these effects, leaving the uninformed reader with the biased impression that such physics is only accessible at the Tevatron.

Section 3 discusses the precision measurements that the Tevatron experiments have performed of the top quark and W boson masses. Here it is quite easy to bring home the point that the Tevatron has given a huge contribution to precision electroweak physics. However, the text fails to explain how the doubling of the dataset would improve our understanding of electroweak physics, as opposed to the scenario when by the end of 2014 it is only the LHC experiments who analyze multi-inverse-femtobarn datasets.

Do not get me wrong: I have preached several times from the same book at conferences and even here. I think that the outstanding Tevatron results on top quark physics (particularly the mass measurement) and W boson measurements speak volumes about the success of the Tevatron program. What I object is that the author is here not really making the case for an extended running: he says that if the uncertainty on the top and W masses is reduced further, crucial information about the Higgs and the consistency of the Standard Model can be acquired -and here one cannot disagree; but he does not really gets to the point of comparing two scenarios: Run III versus no Run III, given the existence of the other players in the game.

In section 4 Wood explains that the Tevatron has seen several 2-sigma excesses or deviations from theory. This, in my opinion, is a section which dissonates with the rest of the paper, and was to be avoided. Sure, there are effects in the top asymmetry. Sure, the 4-th generation quark searches at CDF and DZERO show excesses. But do we really want to use these arguments ("they could be significant if they remain prominent with a doubling of the data set") to make the case of Run III stronger ? The new physics these signatures hint at is better suited for the LHC. Way better: as an example, by the end of 2011 the LHC will for sure exclude the tentative top-prime signals Wood hints at, or find those things!

Section five deals with the Higgs boson search. The author is adamant here:

"the continuation of this search is the dominant motivation for extending the Tevatron run"So it is about the Higgs, of course. Let us jump to the conclusions:

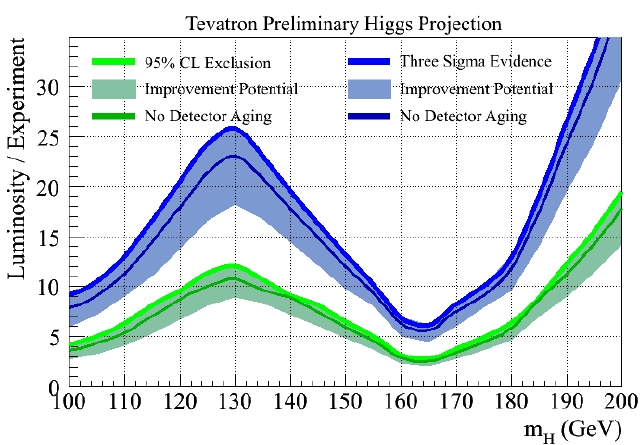

"Note that with 16 fb-1 of data: (1) the Higgs boson will be strongly excluded over the entire mass range below 200 GeV if it does not indeed exist; (2) 3-σ or greater evidence is expected if the Higgs exists in the preferred mass region, and (3) approximately 4-σ evidence is expected if mH is around 115 GeV."This requires some comments. The figure prof. Wood is referring is pasted below. I may be wrong, but the blue line (luminosity needed for 3-sigma evidence) at 115 GeV lays at about 17 inverse femtobarns of analyzed data per experiment. I cannot figure out how this translates in an expectation of 4-sigma evidence. Perhaps the author is being optimistic about the analysis improvements of the low-mass Higgs searches: now here I must say I know a little bit myself, having been part of the Higgs Sensitivity Working Group at the Tevatron in 2003. I believe it is very, very tough to further improve the sensitivity at low mass beyond what the already excellent, multi-variate, multi-optimized analyses are doing now, especially given that the signal-to-noise of the silicon sensors is degrading with integrated luminosity, due to the radiation damage. It is true that this may be overcome by witty detector tricks, but I fail to understand how analysis improvements can gain an effective factor of (17/12)^0.5=1.2 in sensitivity (12/fb is where the lower bound of the blue-shaded region lays, for 115 GeV).

To tell the truth, I feel that I might be a bit too sceptical. After all, I love the CDF experiment with all my heart, and I have witnessed how the great ingenuity of my collaborators managed in many occasions in the past to overperform, exceeding the rosiest predictions in several important searches. But I have the feeling that the Tevatron experiments, like any other experiments getting close to the time when the plug gets pulled, are losing their objectivity and start producing steep claims.

In summary, I think I like Darien Wood's paper as an overtly partisan document, but less as a rigorous scientific assessment of the true value of an extension of the Tevatron run can bring to the High-Energy Physics community, in the light of what is happening at CERN. Maybe I should not criticize him for not comparing the reach of the Tevatron with the reach of the LHC, in an apples-to-apples, realistic comparison: if the Tevatron keeps running, I think that the most likely scenario is that the LHC also will.

Comments