The Tevatron running has been suggested to be continued until 2014, which would allow its two experiments to collect a total dataset of as much as 16 inverse femtobarns of proton-antiproton collisions, as opposed to the mere 10 inverse femtobarns they would get by stopping as expected at the end of 2011. Discussions on the pros and cons of this extension have been going on for a while and they do not seem to fade out any time soon. All the ingredients for a heated debate are there: Europe vs US competition, the future of HEP at stake, struggle for funds, who gets to see the Higgs first.

Apparently the question is brought to revolve around whether the moneys spent for the extension are worth the extra physics output; but in reality there are other considerations. I will leave the issue aside here, because I would like to spend these few lines discussing the results contained in an interesting new paper, which in fact addresses the issue of what those extra 6 inverse femtobarns per experiment may do to our understanding of the minimal supersymmetric extension of the standard model (MSSM).

The paper (1011.5304v1) is signed by well-known phenomenologists: Marcela Carena, Sven Heinemeyer, Patrick Draper, Tao Liu, Carlos Wagner, and Georg Weiglein. They are renowned experts of the MSSM and so it is advisable to lend our ear to what they have to say. Their work is titled rather generically "Probing the Higgs Sector of High-Scale SUSY-Breaking Models at the Tevatron", but it really means to discuss the Tevatron extension, as is clear from the abstract:

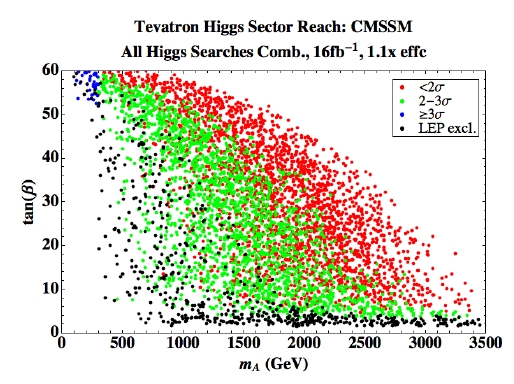

"In this note we investigate the reach of the Tevatron collider for the MSSM Higgs sector parameter space associated with a variety of high-scale minimal models of supersymmetry (SUSY)-breaking [...] We find that the Tevatron can provide strong constraints on these models via Higgs boson searches. Considering a simple projection for the efficiency improvements in the Tevatron analyses, we find that with an integrated luminosity of 16 fb-1 per detector and an efficiency improvement of 20% compared to the present situation, these models could be probed essentially over their entire ranges of validity [...]."The article is a rather technical 14-page document, and I cannot discuss its details here, especially because of the large variety of models that all live under the definition of "high-scale SUSY". Maybe I will just paste here a figure, which shows the significance of Tevatron searches, once combining the CDF and DZERO results employing 16/fb of data each, and an assumed 10% increase in sensitivity with respect to 2009 ones. Each point in the graph, which has the A boson mass on the horizontal axis and the tan(beta) parameter on the vertical axis for the constrained-MSSM scenario, represent a different choice for some of the parameters allowed to vary within their possible range (I invite you to download the paper for details on how the picture is cooked). The coverage at 2-sigma (the green points - I imagine the colour is chosen for "hope") is quite wide, showing that the CDF and DZERO experiments have a chance to probe significantly the parameter space.

There are dozens of such figures in the paper, and I cannot possibly discuss them here. However, there is a very nice "conclusions" section, from which I thought I would pick, to make a few points.

The authors appear, to my rather malicious eyes, less partisan than Darien Wood (who just three weeks ago published another paper discussing in rather enthusiastic terms the good that those additional 60% of data can bring to HEP; I discussed his article here) in assessing the benefits of an extended Tevatron running, and the results they produce are indeed worth careful scrutiny from whomever is interested in probing the Higgs sector of supersymmetric models. However, I will still allow myself a bit of criticism: they decided to put together credible to wildly optimistic scenarios (0% to 30% improvements) with rather incredible ones (50% improvements) about the experiments' possibility to further extend their efficiency to collect Higgs bosons. I will agree that it is not their fault -the experiments themselves have been advertising such improvements- but we should all realize that those steep efficiency improvements are better suited to be presented to congressmen than to be employed by serious phenomenological analyses.

Let me start with one sentence which I think I fully believe and agree with:

"For 10/fb, i.e. Tevatron running until the end of 2011, and no further improvements in the analysis efficiency compared to the present situation, the sensitivity of the Tevatron searches for a light SM-like Higgs would not exceed the sensitivity of the Higgs searches at LEP. Thus, the impact of the Tevatron Higgs searches in the di fferent SUSY scenarios would be rather limited in this case, with the best prospects in the parameter region of small mA and very large tan for nonstandard Higgs searches in the tau-tau and 3b channels."

In other words, the contribution of 10 inverse femtobarns of Tevatron data to MSSM searches would be scarce. I can still accept the wild optimism contained in the following sentence,

"If 16/fb can be analyzed at each Tevatron experiment [...] and the analysis efficiency can be improved by 30% with respect to the status of March 2009 (where 10% between March 2009 and summer 2010 has been realized already), a 2-sigma (or higher) sensitivity is expected over the whole parameter space in all three models. Consequently, all three di fferent types of SUSY-breaking models considered here could be excluded at the 95% C.L., or would yield at least a 2-sigma excess in the Higgs boson searches."although noting that efficiency does not increase with data taking time, but is typically limited by physical factors. But 50% ?

"With an integrated luminosity of 16/fb per experiment and a 50% improvement in the signal efficiency with respect to the status of March 2009, the opportunity for 3-sigma evidence for a SM-like Higgs will open up in significant parts of the parameter space of the most prominent SUSY-breaking scenarios."50% improvements in efficiency with respect to refined, highly-optimized analyses ? This should stimulate the nostrils of almost any discerning physicist -and I do not mean pleasantly. There is something to say about these "efficiency improvements" that have been used rather loosely in a number of extrapolations. The Tevatron experiments are building on a record of excellence in their Higgs searches: new analyses have improved tremendously over the early ones, thanks to a more aggressive treatment of jet energy corrections, lepton candidates, b-tagging algorithms, neural network discriminants. By looking at the progress of those analyses in the last ten years

you might be led to conclude that analysis improvements are bottomless. But they are not! In particular, "efficiency improvements" are meant, in analyses projecting current reaches to future prospects, as increases in signal size at the price of no background increase!

Yes, you read it well. It is like making money by breaking a bank. Imagine you had a refined analysis that through years of care and dedication of dozens of physicists ended up having the potential of collecting 100 Higgs events every 10,000 events of background (a "1-sigma" signal size of sorts). Now you say that in fact you might make those 100 events become 150, with the same background (i.e. still 10,000!), by more ingenuity ? This is simply unbelievable. Okay, efficiency is meant to convey the general idea, and "sensitivity" should be read in its place: but still, it does not appear at all credible. Even if more Higgs events were there to be included in the analysis somehow, you would most likely get them at the price of a larger background. To increase sensitivity by 50% with a constant signal-to-noise ratio, for instance, you would need to go from 100 signal and 10,000 background to 225 signal and 22,500 background events....

In conclusion, I salute this new paper as a honest attempt at foreseeing what we will know about the SUSY Higgs sector from the Tevatron in the next few years. I think its conclusions stand, although I would have preferred a deeper investigation of less wildly optimistic scenarios, and a comparison with the LHC reach. Do not take me wrong here: I would be very happy if the extra 150 million dollars were found (say, Bill Gates on a good day might decide to help us!), but we need not try and diffuse overoptimistic claims in order to force the hands of benefactors. We have our new toy already (and by "we" I now mean particle physicists as a whole-world community, as they should realize they better be), the LHC. With the LHC we will be able to produce 20 more years of new finds and investigations of fundamental physics. Why focusing on 2014 and improbable claims ?

Comments