Have you wondered why we can't see the Apollo landing sites from Earth? Moon is so far away that even the ISS at around 108 meters in length would span just over one pixel if Hubble were to photograph it on the Moon at its highest resolution. From NASA:

Can Hubble see the Apollo landing sites on the Moon?

No, Hubble cannot take photos of the Apollo landing sites.

“An object on the Moon 4 meters (4.37 yards) across, viewed from HST, would be about 0.002 arcsec in size. The highest resolution instrument currently on HST is the Advanced Camera for Surveys at 0.03 arcsec. So anything we left on the Moon cannot be resolved in any HST image. It would just appear as a dot.”

By those figures, then 60 meters spans one pixel at the distance of the Moon. But you can’t see a single pixel unless it is unusually dark or unusually bright. We can see distant stars even though they only span a single pixel, but that’s because they are very bright indeed, against a black sky. It’s like seeing a light at a distance, far too far away to make out any detail.

But the Moon is fairly bright and the lunar module isn’t that bright. There is no way we could make it out at a size of 0.15% of a pixel.

Phil Plait goes into it in detail here: Moon hoax: why not use telescopes to look at the landers? - Bad Astronomy

He works it out as 0.05 arc seconds from first principles. The reason for the difference is that he is using light in the middle of the visible light range (400 to 700 nm). We could also use the simpler Dawes limit, which astronomers use for the resolving power of telescopes, which gives the resolution as 11.6/D where D is the diameter in centimeters. So that gives 11.6/240 or 0.04 arc seconds.

They are using the specifications of the Advanced Camera for Surveys which is able to observe in the near UV, so at a higher resolution. Theoretically it could get down to 0.01 arcseconds at the shortest wavelength it can observe of 115 nanometers, but its pixel resolution is around 0.028 by 0.025 arcseconds.

This is all simple maths, so let’s just do it, I’ll explain how you work out the resolution of 0.01 arcseconds at 115 nanometers, calculation indented so it’s easy to skip.

You get the resolution from R = λ / D (Angular resolution) The result is in radians. There are 2 * π radians in a full circle and 60*60*360 = 1296000 arc seconds in a full circle so the number of arc seconds in a radian is 1296000/(2* π) or 206265.

We have to use the same units for the mirror diameter as for the wavelength of light of course. So, to keep the numbers manageable, let’s work in millimeters, so the UV light has a wavelength of 0.00015 nm.

Hubble’s telescope is 2.4 meters in diameter or 2,400 mm. So the resolution in arc seconds is 206265*0.000115/2,400, or 0.01 arc seconds.

It’s also easy to work out the angular diameter of the descent stage from the distance to the moon.

The distance to the Moon is 384,400 km. You get the angle in radians using 2*asin((width/2)/distance). So the angle in radians is 2*asin(2/384,400,000) or 0.0021 arcseconds

He points out that there is one way Hubble could photograph a lunar lander - and that’s to photograph its shadow. The shadow, when the sun is low, would be many times more than 60 meters long. But - in addition to the problem of persuading anyone that this was worth photographing, as he points out, how would you prove that it was the lunar module and not just a large boulder, from the shape of its shadow? Even if we can photograph a lunar module with the light and the surroundings just right so that it has a shadow kilometers long, it would still be less than a pixel in width, so you’d see no detail in it.

If they put the equivalent of one of our spy satellites or Hubble or the Mars Reconnaissance Orbiter (which has a resulotion of 30 cms for Mars) into orbit around the Moon they’d spot it easily.

We haven’t sent anything that large to the Moon, but our Moon orbiting telescopes can see the lander at very low resolution.

Photograph of the Apollo 16 landing site by Lunar Reconnaissance Orbiter. One of several that showed that the Apollo mission flags are still standing.

The tracks are very obvious and it’s obvious also from the shininess of the lunar module that it is not a natural object.

You can see also the problem of detecting it by brightness. If you photographed it at full Moon, when the sun is directly on it with no shadow, could you detect a slightly brighter pixel?

Let’s try. Here is the brightest point in that image as a single pixel in the scene as photographed in the lower left corner

You have good eyes if you can see it.

Now we have to resize by 15%. The image is 260 pixels wide so I’ll resize it to 39 pixels

Now let’s do a big zoom in so that the discoloured pixel will be 4 pixels wide, to 960 pixels

Can you see it? That’s what Hubble would see.

This is a massive zoom in of that picture, zoomed in so far that the pixel spans just short of 37 pixels here out of an image 254 pixels in width

Can you pick out the bright pixel of the lunar lander, right in the middle of this image? This simulates the very best image that Hubble could take in optimal conditions at full Moon with no shadow around the lander, and it probably over estimates the brightness of the lander.

Even if we had the ISS on the Moon it would only span a little under two pixels at Hubble’s resolution of 60 meters to a pixel at that distance. The ISS is about the size of a football field, about 108 meters in length.

What size mirror would we have to fly into orbit to have a chance to see it in visible light? I'm going to work this out on the basis that we only need a one pixel descent stage. If it is significantly brighter, as it seems it might be from the LRO pictures, then perhaps it would be possible to tell that it is artificial from a single pixel. If not, I'm not sure that two pixels will help.

Anyway, you can multiply these figures by whatever number of pixels you think would be necessary to recognize it as artificial and not a boulder. For instance, if you think a four by four pixel descent stage will be recognizably artificial, multiply the size of the mirror by four. For an image as good as the LRO image of Apollo 16 above, the telescope would have to be 20 times larger.

Let’s work it out for blue light (at 400 nm) as then we get a lower bound, it has to be at least this large. Work in millimeters as before

So we want 206265*0.0004/D = 0.0021.

Or D = 206265*0.0004 / 0.0021

= 39288 mm

So, we’d need a telescope of diameter at least 39.3 meters.

Or using the Dawes limit, we need 11.6/D = 0.0021 so D = 11.6/0.0021 or 5523 cms or 55 meters.

It would have to be twenty times larger, 768 meters in diameter if you wanted an image like the one taken by LRO above of the Apollo 16 site, or 1.1 km diameter using the Dawes limit calculation..

Even the James Webb with a diameter of 6.5 meters would not be nearly large enough.

This is the size of telescope we’d have to put into orbit to see the descent stage as a single pixel:

See that little car at the bottom? The proposed “Extremely Large Telescope - Wikipedia “ which is under construction in the Atacama Desert, with a primary mirror just short of 40 meters in diameter.

If it was in orbit, it might spot the landing stage at full sunlight as a single bright pixel, if it was noticeably brighter than the surrounding landscape. At ground level, just maybe, with adaptive optics but it’s a struggle.

Astrometry can achieve more precise measurements, Hipparcos managed 0.001 arc seconds for the nearest stars, but that's for bright pinpoint stars and the technique wouldn't work for photographing the Moon. Gaia will achieve even higher precision but again it's no good for photographing the Moon.

Adaptive optics can help unblur the image by keeping track of how the image moves and distorts, and countering the effect of that. So, one thought, if we had very powerful lasers, maybe we could use the lunar retroreflectors left on the Moon to help stabilize the images? But they only get one photon back in 1017, so the signal is very faint.

You can get beyond the resolution limit with optical interferometry. That increases the resolution by combining the effect of several smaller telescopes further apart - but makes the things you see much fainter because they don't catch much light. This instrument is used mainly for measuring widths of stars - but it's measured stars of width as small as 1.7 milliarc seconds: Navy Precision Optical Interferometer.. There are several other large ground based optical interferometers.

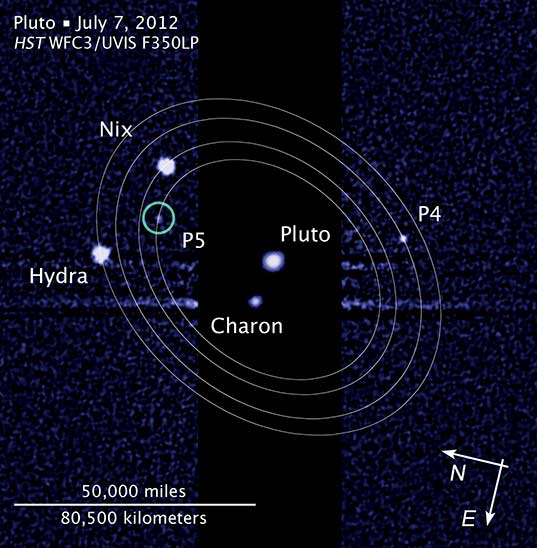

None of this is able to beat Hubble, because if it did, they'd use it, e.g. to photograph Pluto, or Europa or other interesting distant targets. Our best image of the Pluto system before the New Horizons flyby was from Hubble.

By combining many Hubble images they got this

Their photos of the moons were like this:

See Why NASA couldn't just use the Hubble Space Telescope to see Pluto

Now, if we sent an equivalent of the Mars Reconnaissance Orbiter to the Moon we’d spot it easily as its HiRISE camera has a resolution of 30 cms, photoraphing Mars from high above its atmosphere.

Artist’s impression of MRO aerobraking into orbit around Mars. Its HiRISE camera can image Mars with a resolution of 30 cms.

That’s from an altitude of 300 km.

The Lunar Reconnaissance Orbiter has photographed the Moon from a height of 50 km. At that height HiRISE would have a resolution of 5 cms and would be able to image the lunar landing sites with great detail.

But there just hasn’t been as much interest in the Moon so far as for Mars and the LRO is a much less capable smaller satellite with a resolution of 50 cms. So it’s got only a tenth of the resolution of HiRISE.

If we could put the equivalent of Hubble into the same orbit around the Moon, at a distance of 50 km instead of around 380,000 km then it would have a resolution of 60*100*50/380,000 or 0.7 cms,

There’s renewed interest in the Moon which is turning out to be far more interesting than previously thought.

Quoting from the blurb for my “OK to Touch?”

“It's the easiest place to visit for space explorers, tourists, and for unmanned telerobotic exploration from Earth. There's much there to interest scientists too, who could explore it directly from Earth, or from bases on the Moon like the ones in Antarctica. It's also a natural place for passive infrared telescopes at the poles, long wave radio wave telescopes on the radio quiet far side, and eventually, huge radio dishes and liquid mirror optical telescopes spanning its craters. They can also study the lunar geology, searching for ice, and precious metals like platinum, exploring the caves, and searching for meteorites in the polar ice for unaltered organics and even preserved life from early Earth and other parts of our early solar system. It's turned out to be far more interesting than we thought as recently as a couple of decades back.”

“Our Moon is also resource rich. The lunar poles particularly may be the easiest places to set up an astronaut's village in the near future, as suggested by ESA. It has sunlight available 24/7 nearly year round on the "peaks of almost eternal light", and ice also close by in the permanently shadowed crater. If you compare the Moon point by point with Mars, then the Moon actually wins over Mars as a place to live on just about every point, probably at least up to a population in the millions, and quite possibly further if we can build habitats in the vast lunar caves.”

So perhaps we will also have high resolution cameras like HiRISE in orbit around the Moon in the near future.

Also - the lunar X Prize will have many smaller missions go to the Moon, commercial ones. We may see the first of them towards the end of this year. There are five teams in the competition, SpaceIL plans to use the SpaceX Falcon 9, but it’s had trouble fitting its mission into their faring. Hakuto has made a pact to land with Team Indus and both will use the Indian rockets - it’s proven technology but they are having trouble raising the funding. Moon Express will launch on Rocket Lab’s Electron, and Synergy Moon on Interorbital System’s Neptune. All are sharing the nose cone with other payloads on their rockets.

Will Anyone Win the Google Lunar XPRIZE?

Astrobiotics, the top favourite for a long time, have pulled out saying they can’t be ready to launch until 2018. But they are planning a “FedEx service to the Moon” - their Griffin lander will carry other lunar missions to the lunar surface.

In the future we may see tiny rovers image the landing sites.

Artist’s impression of a Google Lunar X-Prize rover at an Apollo landing site. Image Credit: Google Lunar X Prize

(I actually got the image from: Team Indus joins Google Lunar X-Prize finalists, Astrobotic drops out)

That was actually one of the challenges for a bonus prize for the Lunar X prize rather controversially, some think the area immediately around the historic landing sites should be kept pristine and the rovers not permitted to drive over them.

That’s particularly because of the prospect of them landing by accident on top of the flag or some such:

““I’d like to see them demonstrate their ability to do a precision landing someplace else before they try it next to the Apollo 11 site,” Logsdon says. “You wouldn’t have to be very far off to come down on top of the flag or something dramatic like that.” “

The Lunar Legacy Project say in their Introduction

“Our goal is to preserve the archaeological information and the historic record of Apollo 11. We also hope one day to preserve Tranquility Base for our planet as a World Heritage Site. We need to prepare for the future because in 50 years many travelers may go to the moon. If the site is not protected, what will be left?”

Another group with a similar mission aim is "For All Moonkind".

However none of the finalists plan to take up this part of the challenge as far as I know. So this is not an issue for a while.

Perhaps in the future then we will get Lunar Parks set up, recognized world wide, and rovers and humans will only be able to approach within some set distance of the landing site. Still, even if photographed from a kilometer away they would be able to take high quality photographs.

The lunar landing sites occupy only a tiny part of the moon’s surface. It’s sometimes called our The Eighth Continent, the second largest after Asia, at 37.9 million square kilometers, it’s larger than Africa, and five times the size of Australia.

So it seems reasonable to protect them, to me.

They are also valuable for planetary protection scientists,as places to study the effects of a brief human presence on the Moon several decades later, which is important when planning other missions to the Moon and elsewhere - what do we leave by way of organics and other contaminants on the Moon (rocket exhausts for instance) which could confuse science experiments? How far do they spread? Also for studies of panspermia, are there any microbes still viable there? Experiments since then have suggested some should survive in dormant state but this is an unplanned experiment in long term survival of microbes in lunar conditions, in a situation of humans walking around on the Moon as well what’s more.

They are:

"valuable and limited resource for conducting studies on the effects of humankind’s initial contact with the Moon" (quote from page 774 of this paper).

See also my

- OK to Touch Mars? Europa? Enceladus? Or a Tale of Missteps?

- Case For Moon First

- Why Humans on Mars Right Now are Bad for Science. Includes: Astronaut gardener on the Moon

You might also be interested in

To get alerts of my posts on facebook, like my page here: Robert Walker - Science20 Blog Alerts

Comments