Not many people would know the peculiar vocabulary used to evaluate scientists.

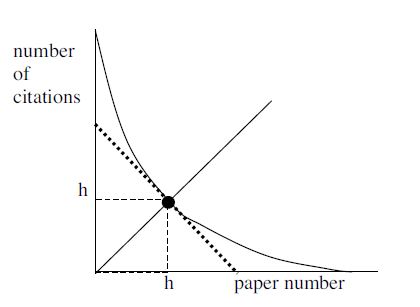

‘H index’, ‘impact factor’ and ‘citation number’ are some of the snazzy phrases that are now ubiquitous in the world of science. Not all scientific papers are born equal - some are ground-breaking, while most are an incremental advance – and these scales have been developed to help determine the ‘impact’ of the scientific articles that are published.

Few would doubt that measuring the impact of scientific output is an important thing to do. Substantial sums of public monies are apportioned to science, and it is critical that these funds are invested in research that will provide the greatest benefit to our world. These scales – or, to use their more formal title, ‘scientific metrics’ - provide one way of ensuring this accountability.

But like many measurement tools that once had a noble purpose, they are vulnerable to gaming.

Michelle Dawson and Dorothy Bishop appeared to have identified such a case in the field of developmental disabilities. An Editor of two journals in the field, appears to have used these journals to improve his scientific metrics.

The posts are worth a read and I won’t rehash them here, but here are a few key pieces of information that came to light. During the years 2010-2014:

1. The Editor published 142 articles in the two journals for which he was Editor-in-Chief. This is an enormous amount of research to publish, and it is not common practice for Editors to publish prolifically in the journals they edit;

2. More than 50% of the references in these articles cited his own work. Citing one’s own previous research is not uncommon, but the more usual level of self-citation is about 10%; and

3. More than 50% of the articles were accepted by the journal within one week of submission, and many were accepted on the same day as submission.

This is unusually quick, and even the most significant journals, which have the most resources require weeks for the process of peer-review. (The Editor was not the sole beneficiary of this short peer-review time. I, myself, was a minor author of a paper that was accepted by one of these journals in 2009 after 5 days, but had another paper in 2014 that underwent lengthy review. A quick look at the Table of Contents of these journals, shows that a very short time between the data of ‘paper submission’ and the date of ‘paper acceptance’ is quite common).

One of the results of these actions is that the Editor and a few of his colleagues have attained science metrics that are off the charts.

There are many troubling things about the statistics. Some of the concerns are localized to the poor judgment of a few scientists, while other concerns have broader implications for the future of science. On this latter issue, it is the third point above that worries me the most.

Peer review

Thousands of column inches have been taken by scientists and policy-makers discussing the pros and cons of peer review. Invariably, what remains after the hullabaloo has died down is the unanimous agreement that peer review remains the best means currently available for ensuring that science is conducted with the greatest degree of rigor.

So much of science is played ‘above the shoulders’. Ideas are formulated, ruminated upon and agonized about, all within the confines of the scientist’s head. Calm and methodological vetting of the ideas and data by other people with similar expertise is vital to ensuring the accuracy and rigor of the work.

Peer review is sometimes slow and often infuriating, but it is always necessary for good science to prevail.

In the case highlighted by Dawson and Bishop, it is highly unlikely that rigorous peer-review could have occurred within the one week (or one day) from article submission to article acceptance.

Who are the victims?

One school of thought is that this is a victim-less misdeed. Yes, this is not the way the system was intended to work, but in the end, is this really hurting anyone?

I can’t agree with this view. First and foremost, scientists owe it to the general public to ensure that the science they read and use (and that they often fund) is as rigorous as possible. Proper and thorough peer review is an important step in that process.

Another potential victim are the scientists who have published in these journals and now feel that their work is devalued by this information. Without question, the greatest burden here falls on early career scientists, who must prove the importance of their work in order to secure jobs in the cut-throat world of science.

My feeling is that many early career scientists will feel let down by the reputational damage these journals have now incurred.

Hyperinflation in the number of journals

Another issue raised by this experience is the impact of the recent and dramatic increase in the number of scholarly journals. There are now far more avenues for researchers to publish their work, and there is intense competition amongst publishers to attract scientists to their journals. It would be a rare day when I wouldn’t receive at least 5 emails inviting me to publish in journals I’ve never heard of, often in research fields completely different to my own.

To give you an idea of how much things have changed, a senior colleague of mine recently reflected that she remembers when it was common place for tenured Professors to have a total of 5 published articles.

Compare that with statistics from Australia’s National Health and Medical Research Council (NHMRC) on the 2014 recipients of Early Career Fellowships, which provide a salary for researchers who have ‘held their PhD for no more than 2 years’. Successful applicants in Basic Science had an average of 9 papers. Successful applicants in the field of Public Health had an average of 14 papers.

I’m not necessarily of the opinion that having more outlets to publish scientific research is a bad thing. Science, when conducted well, is best situated in the open domain rather than in a file drawer of a musty lab. Published and accessible data gives humanity the best chance of building on that knowledge.

But there is a nagging concern that an increase in the number journal articles may correspond to a decrease in the standards of peer review. As far as I am aware, there is no hard evidence to support this hypothesis, but the logic behind it is clear: If there are more papers to be reviewed, but the same number of scientists to review them, then the quality and/or quantity of reviews may be compromised.

Last year, I received 54 invitations from journals to review a manuscript. Because of time constraints, I accepted just over a quarter of these invitations (n = 15). I assume that the manuscripts that I was unable to review were done so by another scientists, but I also expect that these scientists had the same time pressures as me.

Can the scientific system be rescued?

Many in the field have become concerned about the ‘growth economy’ of science and the meaningless pursuit of attractive science metrics. In 2012, a group of editors and publishers of scholarly journals met at the Annual Meeting of The American Society for Cell Biology (ASCB) and produced the San Francisco Declaration of Research Assessment (DORA).

The document was mainly concerned with explicating the profoundly flawed scientific metric of the journal ‘impact factor’. But there was also a strong emphasis on encouraging the scientific world to untether funding decisions and job appointment/promotion considerations to the ‘quantity’ of research output, and instead focus on the ‘quality’.

In a nutshell, research ‘quality’ could be described as how the piece of research has influenced the body of knowledge and its impact in the world.

Many funding bodies around the world have adopted the DORA guidelines, and have started changing the way that scientists apply for funding. As an example, Australia’s NHMRC now requires scientists to emphasize the quality of their previous research output rather than just the quantity. Stable funding is the lifeblood of research, and so the NHMRC wields real power in their attempt to change how science is evaluated, and thus the publishing culture within research.

But is it the case that the horse has already bolted, and we are only now rousing to close the gate? If successful applicants for Early Career Fellowships (less than 2 years post PhD) are already averaging 10 or more papers, can that bar ever be lowered?

As scientists, we strive to conduct meaningful research that impacts the world. Science metrics have become an almighty distraction to how we go about achieving that aim. But, unfortunately, it is a distraction that we can’t avoid. There are too few jobs, and is far too easy to be out of one.

Nevertheless, I am hugely optimistic that the scientific system can be rescued from the gaming of science metrics. With the leadership of funding bodies such as the NHMRC, the right incentives are now being created for scientists to do what they started their careers to do. The information bought to light by Dawson and Bishop is a reminder to all of us how we can never take our eye off that ball. ![]()

By Andrew Whitehouse, Winthrop Professor, Telethon Kids Institute at University of Western Australia. This article was originally published on The Conversation. Read the original article. Image: Hirsch's original H-index, arXiv:physics/0508025

Comments