It is easy to have a good hunch. You rely on the nearest neighbor paradigm: you look for the element in your available data closest to the characteristics you are interested in. So you choose the table closest to the band, as you observed that it is usually the hottest; the stock which behaves most similarly to what a recent high performer did; and you rely on the reported weather of the closest town to your destination.

Among the computational methods that try to determine the probability density function of some quantity based on a limited number of observations, the nearest neighbor may indeed be the easiest one to grasp. It is based on the implicit assumption that the feature we are trying to guess varies _slowly_ in the space, so that a limited number of observations close to a point where you need an estimate can suffice to offer a good guess of the characteristics of the particular point you are considering.

Among the computational methods that try to determine the probability density function of some quantity based on a limited number of observations, the nearest neighbor may indeed be the easiest one to grasp. It is based on the implicit assumption that the feature we are trying to guess varies _slowly_ in the space, so that a limited number of observations close to a point where you need an estimate can suffice to offer a good guess of the characteristics of the particular point you are considering.We apply nearest-neighbor-driven decisions in our everyday life surprisingly often. As we move in our three-dimensional space, we often look for the warm spot of the room, or the less noisy table, or the less crowded corner of the bus based on our past experience -the data. The data teaches us that the place high up and farthest from the window will be warmer, because more often than not that has been the case in previous similar situations.

[Note that there is no contradiction between the above statement and the fact that we are Bayesians as we take decisions: a Bayesian will consider the "prior probability density" to take a decision; but the density is constructed by a Bayesian by relying on discrete observations - so the two concepts are tightly related. But let me omit a discussion of this detail today]

It should not come as a surprise that a similar algorithm can be applied to computationally challenging problems of classification or regression. I grew fond of the nearest-neighbor algorithm over ten years ago when I "rediscovered" it for a particle physics application -the improvement of the dijet mass resolution from Higgs boson decays in CDF data, and building on the concept I developed a quite advanced custom-made algorithm capable of estimating the density of a scalar field in multi-dimensional space.

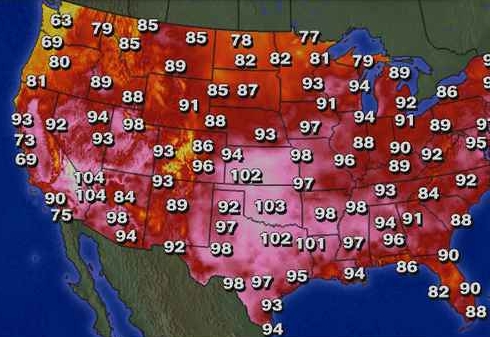

To understand what I mean by a "scalar field" and multi-dimensional spaces, you might want to think again at the problem of estimating the temperature of a point of space based on observations "around" it, where "closeness" should be defined not just in spatial dimensions, but also in terms of similarity of other features; if you need to estimate the average temperature of Chicago on May 15th you might be more interested in knowing the average temperature of Detroit on May 3rd than that of De Kalb on January 1st! There, the temperature is our "scalar field" - a number to be estimated; and "closeness" may not necessarily be just a spatial distance, but a more complex combination of distance in a generalized configuration space, where the day of the year when the temperature measurement was taken plays a significant role.

In the Higgs challenge offered by the ATLAS experiment, we are asked to classify as signal (Higgs decays to tau-lepton pairs) or background (anything which resembles that process, based on observable quantities measured by the detector) a set of 550,000 events which have no name tag attached to them. To do that, we are provided with a set of 250,000 events that do have a tag, specifying whether they are signal or background, along with 30 variables that describe their detailed characteristics. To perform this task one could think of using at least a dozen reasonable algorithms, but the challenge will be won by the best classification - the one which finds more signal and less background, according to a particular figure of merit (AMS) which I do not wish to discuss here (see the web page of the challenge if you're interested).

So, is the nearest-neighbor algorithm the best to pick ? No, it is not necessarily the best one, although it does have some features which suit very well to the task. Other algorithms, like neural networks or boosted decision trees, are known to perform better in typical situations. But then, one can always improve a generic algorithm to optimally suit to the task at hand. That is what I have set out to do in the recent weeks, mostly with the purpose of studying the algorithm than with the goal of winning a prize. Let me explain very shortly just how many details one can study to adapt the nearest neighbor algorithm to a particular problem.

1) of course, if you need directions you have better ask many people rather than one. Rather than a nearest-neighbor, what we want is a k-nearest neighbor (kNN), where we weigh appropriately the input from the k closest elements of the data in our hands (the training data).

2) how to weigh the answers is a long discussion. Of course the closest an element is to our test point (the place where we want to estimate the temperature, say) the better, so we need to give more weight to training data closer to our test point; but how to weight based on the distance is a complex decision.

3) and how to even define the distance ? In the temperature example above, is De Kalb on April 1st closer to Chicago on May 15th or should we privilege Detroit on May 4th ? The metric in a multi-dimensional space where the features have different dimensions is to be chosen wisely - that is a very important bit in the construction of an effective nearest-neighbor algorithm.

4) The distance definition might even be variable as we move through the space. At the north pole, it will make little difference whether it is May 4th or July 2nd; while in Chicago it does. So if we are to pick neighbors in the two locations, the date may have a bigger impact in our decision if we are trying to estimate the temperature in Chicago than at the pole. A space-point dependent distance definition is thus very useful, but complicated to construct.

5) And then, if one has many observations for each training data element, one needs an effective way to pick the most relevant observations in constructing a kNN estimate. One can, for instance, construct an estimate using a subspace of the observed features, and another estimate using another subset, and then combine the outputs in some smart way. You realize that as we live in a high-dimensional feature space (in the Higgs challenge, there are 30 dimensions!), this becomes a quite complex task.

The above is just to give you a flavour of the complexity of the problem, and to show how creativity and inventiveness do play a role when one tries to put together a classification algorithm. The downside of all this is that unfortunately, during the last twenty years there has been such an explosion of studies of multivariate methods, driven also by the availability of cheap computing power, that almost everything has been invented already. In fact, after fiddling with the problem for years (starting in 2003), I have recently found out that most of my brightest ideas are spelled out in scientific papers (see here just for an example - this paper discusses how to combine the idea of nearest neighbors with boosting).

In any case, it is a lot of fun. As the Higgs challenge has surpassed 500 participant teams, you should be wondering what keeps you from giving it a try yourself... Most of the advanced tools are available online to those who know how to search the web...

Comments