The problem was that LLMs are not really AI, despite claims by companies selling this stuff that they are. They are certainly not Intelligent. So while the list had real authors, half of the books did not exist.

Human readers are not LLMs and they quickly noticed. The paper issued a retraction. It was embarrassing, not so much because they used AI, but because it was wrong. That made it a public trust issue. Readers who pay to get a little smarter about the world now have less trust in editors and editors have less trust in writers. The micro-institutional concept says it may be a lot worse than they even know yet.

I don't blame Buscaglia for using ChatGPT. If a $40,000,000 organization told me they'd pay me $200 but I'd get good internal "exposure", I'd laugh. A lot of writers are trying to make it in a dying industry, so what was he going to do, read 400 books and then pick 25? The editor who assigned it to him knew what he was going to do. He just wanted him to do it and not get caught.

A lot of people are doing it, a university teacher even had a student ask for a refund because he made himself irrelevant in pedagogy by using an LLM.(1)

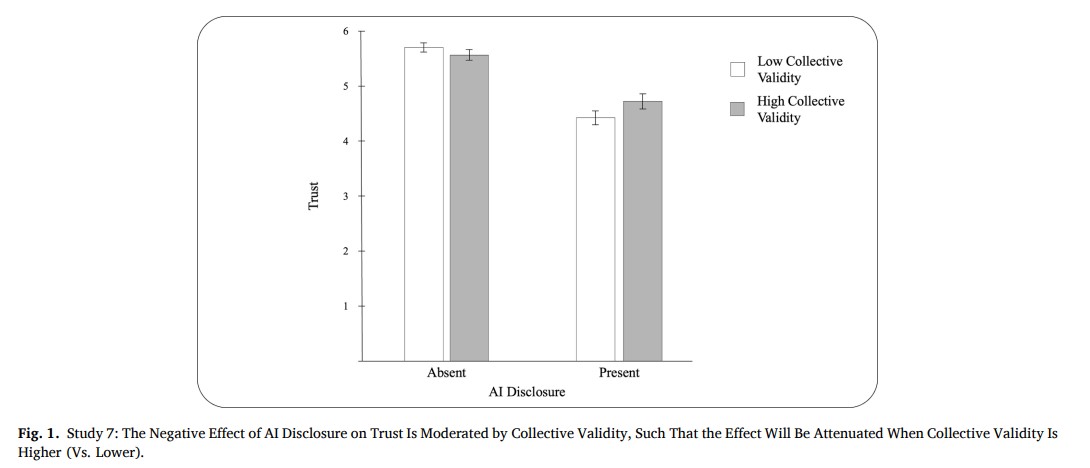

I don't use one, obviously, I famously don't get to the point fast enough and an LLM would. My point is that while not disclosing AI use is bad, experiments show disclosing it may be even worse. In their paper, two University of Arizona scholars discussed 13 experiments designed to reveal any AI disclosure–trust effect.

Some of the results were surprising, like a 20% drop in trust in art. I don't have much confidence in those beatified by the art community and haven't since I was young. Crosses dipped in urine being called art will cause a lack of trust in Big Art a lot faster than AI will. And when I was young, popular comic books were colored and lettered by hand. Now, they are not. Are comics worse? No, they are better than ever. Just 25 years ago, any digital tool was considered 'not real art' to many but I do not care about the process, just the result.(2)

So a 20% drop to me seems really high, but that may be because I am older and have seen it all by now. I no longer believe the mechanism will lead to higher creativity. I am working on a book and wrote the first 40 pages on my phone.

Trust in professors using AI, as I noted about Northeastern University earlier, only caused a 16% drop. For me, that would be 100% - because unlimited student loans did not exist when I went to college, which meant colleges obeyed market forces and all but the most elite were affordable even to poor kids. If I had taken out a student loan now I'd be enraged - because unlike the minority of modern students whining loudest about the debt they incurred I know what a "loan" is. And I want to learn from people smarter than me, not someone who spent $200 on a tool to do the work so he could earn a $100,000 salary while I pay $170,000 for a degree.(3)

That is the thing, trust is pretty subjective. They try to mitigate that by using a pool of 5,000, and in that sense it is as rigorous as you can be. If it were all students in the same department of the same school where they pay $14-42,000 per year to attend, it would not be worth writing about at all.

As AI becomes more normalized, it will become more trustworthy. "The Polar Express" movie creeped out a lot of millennials because it was in Uncanny Valley territory but eventually AI graphics won't have six-fingers and ChatGPT will be more than a lazy way to pretend you're working.

Until then, beware its use in your work. If their micro-institutional ideas about losing trust are valid, it could take five years to win the people back you alienate.

NOTES:

(1) Northeastern University, like all colleges in the modern unlimited-student-loans-made-us-rich-so-thank-you-Democrats climate, exist to extract money from young people, not give it back, and denied her claim.

(2) When I was young, we lived in poverty. I was an accomplished pencil artist but never took up paint. We could never have afforded it. If I am a young person with artistic inclination and am given a 10-year-old Wacom, I am going to try and make great art. The old ways meant doing no art at all.

Pepe Larraz put on a masterclass in storytelling in one image in Uncanny Avengers #15.

I did not ask what tool he used to draw it, and I do not care.

A brain still has to make the art, and if the brain doesn't have to be in a rich kid with expensive modern technology, all the better. However, AI may soon have its own wealth divide just like art once had. If a free tool is severely outmatched by one that costs $200, we haven't created a new equality, we've only created new Sneetch Stars.

(3) The Northeastern University professor caught using ChatGPT is not making $100,000 a year, he is an adjunct so probably making $1,000. As I said, schools are out to gather money, not give it away.

Comments