Among the new installed instruments the new HST sports an improved wide field camera, WFC3, which promises a significant improvement of the quality of telescope's imaging capabilities.

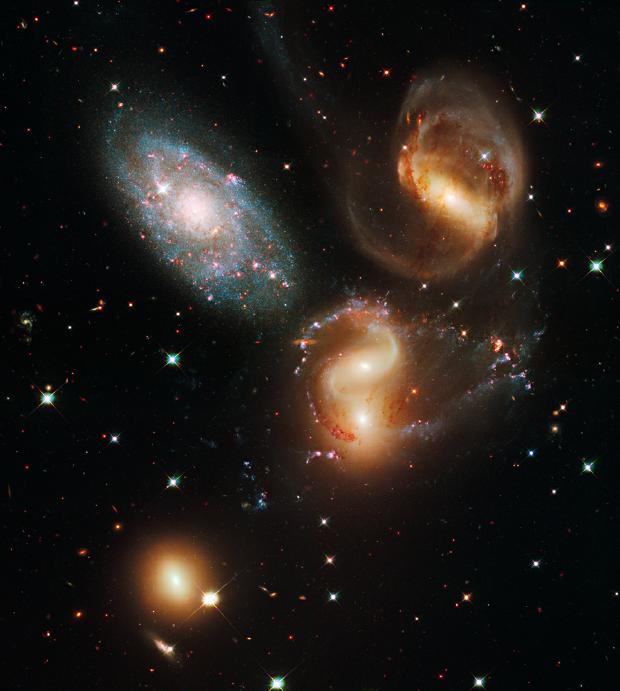

As an example of the harvest just started, have a look at this new image of the Stephan's Quintet, a interacting group of galaxies belonging to the group of NGC7331 in Pegasus, a target on which I aim my telescope quite frequently during late Summer and Fall:

Fantastic picture -but you can only appreciate its beauty and richness of detail if you download it in its largest available format, a 54 Mbytes file.

The NASA release of last September 9th does not discuss the technical aspects which have kept scientists busy with testing, calibration procedures, and deconvolution algorithms during the months which followed the servicing mission. I would have liked to know more, however, because if I look in detail at the images which have been released -all stunning, to be sure - I do detect differences with respect to earlier performances, but I would like to understand whether those differences are really due to the new hardware, or whether instead I am fooling myself. That would be the case, for instance, if I were comparing pictures which withstood different levels of processing, different software algorithms for the deconvolution of the complicated space-variant point-spread function provided by the Hubble's flawed optics, etcetera: a typical case of mistaking hardware with software performances.

The Hubble mirror suffers from spherical aberrations -a fact discovered when the mission started- and it was only during a critical repair mission in 1993 that the instrument regained its design performances. Since then, its images have delighted us, and the scientific value of the data has been very high. Not having an atmosphere to shoot through is a huge advantage, and despite the predictions that adaptive optics techniques would make HST obsolete with respect to ground-based supertelescopes, the instrument has proven to be still valuable. This, and the fact that Hubble is not constrained by day/night cycles or weather conditions, is the rationale behind the choice to let it live for another decade.

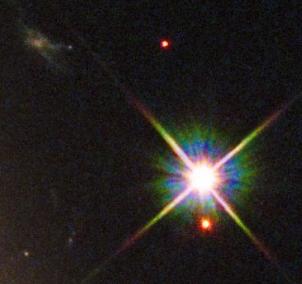

All that said, if I look at the details of some of the released pictures, I cannot help noticing two things. One, the point-spread function of the instrument -the probability distribution that a photon coming from a point source will hit a particular point in the recording device- seems to have not changed significantly from earlier performances (check the way light from the star attenuates on the scale of its distance from the nearby companion): of course, since this is driven by the mirror's size and the optics of the instrument, which have not changed. Two, that the new WFC3 camera appears to deliver much more detail than the older WFC2.

All that said, if I look at the details of some of the released pictures, I cannot help noticing two things. One, the point-spread function of the instrument -the probability distribution that a photon coming from a point source will hit a particular point in the recording device- seems to have not changed significantly from earlier performances (check the way light from the star attenuates on the scale of its distance from the nearby companion): of course, since this is driven by the mirror's size and the optics of the instrument, which have not changed. Two, that the new WFC3 camera appears to deliver much more detail than the older WFC2.Look at the two images above, the old one on the left and the new one on the right: they picture the same star, a faint star in Pegasus very close to one of the galaxies of Stephan's Quintet (the second is rotated by 180 degrees with respect to the first). Undeniably, the new image is much better. Part of the reason for that is the fact that I could not find a large-format version of the old one; but you can still tell that there is more to see in the second one.

I am trying to find updated information on the new performances of the HST with a comparison to the old ones... But apparently we need to wait for that data to be released. In the meantime, I cannot but cheer up at the longevity of this terrific instrument, and I hope you concur!

Comments