The last word on the CDF dijet mass bump at 150 GeV

To complement the story I told in the third part of this article I must produce an addendum. A reanalysis of the CDF data, including 8.9 inverse femtobarns of collisions, has finally put an end to all speculations concerning the dijet mass bump that had been found at 150 GeV in events containing a W boson.

As I discussed a few days ago, scientists were already sure that there was no resonance after the results of parallel investigations by DZERO, CMS, and ATLAS had observed no effect and put stringent limits on its existence. However, the question remained: if CDF sees that effect, what is the cause ? And if the cause is some mismeasurement or systematic effect, is the same affecting other measurements, e.g. in Higgs boson searches ?

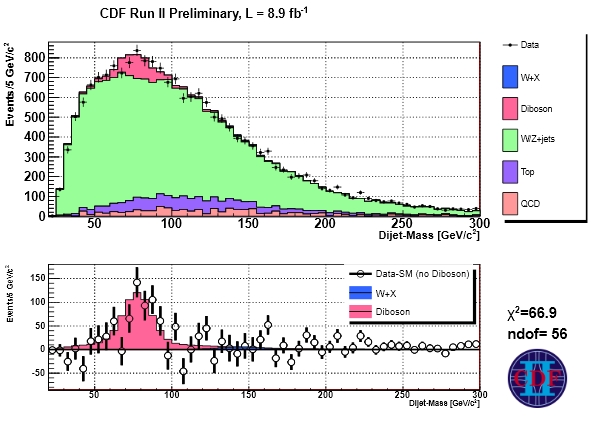

The cause, in short, was understood to be the sum of two different ones. First of all, CDF found that while jet energy corrections had been produced studying datasets rich in quark jets, the jets accompanying the W boson were observed to have a much larger gluon contribution. Gluon-originated jets behave differently, such that a different composition in data and W+jets simulation caused a shift to higher dijet masses in the data.

A different effect was observed with electrons: some particular kinematic configurations caused the non-W background (three-jet events from strong interactions) to contribute significantly to the electron plus jets dataset, when the electron was in fact a jet with a large electromagnetic component. That background contributed to the bump at 150 GeV more than it did elsewhere in the spectrum.

Below you can see the "corrected" dijet mass distribution of CDF with 8.9/fb of data. You can see that the bump, after accounting for jet correction differences and the unaccounted background, has totally disappeared !

So we learn something here as well: sometimes, systematic uncertainties do add linearly their effect, although we usually claim that they add quadratically...

Scientific impact - or the cost of a wrong claim

Let us go back to the discussion of the various issues we have dealt with earlier. One last thing to consider, when we discuss the issue of how high should the bar be set for a discovery claim, is the cost of making a wrong claim.

The cost of claiming a false the discovery of a new 4-GeV hadron decaying to charmed mesons - a particle whose existence does not change in a significant way our general understanding of strong interactions or the standard model - is of course minor: our reputation as scientists is not affected much. That is because the claim of the new particle does not make the headlines of all newspapers around the world. The impact of the claim is small, and the possible backfiring of it being found faulty is also small.

One may compare the above with the cost of claiming that neutrinos are superluminal: such a claim has an enormous impact on the media; by putting it forth, an experiment is voluntarily stepping on hot coals, with the prospect of being kept there for all the time necessary to ascertain whether the claim is true or false.

That is indeed what happened with OPERA: it took seven months to find out that the observed effect was due to an experimental error. At the end of the process one usually does not get away with it easily, and in fact the OPERA spokesperson had to resign, and the reputation of the experiment was at least in part affected.

I have argued that the whole affair did not affect negatively the perception of basic science by outsiders: the value of drawing interest in fundamental physics in my opinion is very high, so even if laymen for once got the impression that scientists can fail and that they should not always believe in the claims that are made, they at least got to know what neutrinos are, how hard it is to detect them, and that there are facilities that shoot neutrinos through the rock of the earth to other places in the world. But even if I am right, the subjective cost, for an OPERA scientist, of the faulty claim was rather large.

An even larger cost would have been entailed by a faulty claim of Higgs boson discovery by CERN. The Higgs boson had been on newspapers for the last few years already, and the Large Hadron Collider the subject of speculations on whether big science really costs too much taxpayers money (it doesn't, by the way - one year of money spent in Italy by people visiting magicians and tarot readers can finance the construction of a second LHC ring!). Claiming discovery of the Higgs and then having to retract it would have been a serious damage of the image of CERN, and of particle physics in general. That is in a nutshell why the definitive announcement of a discovery was made relatively late in the game (five sigma excesses by two independent experiments, in July 2012).

So we are led to the conclusion that the cost function connected to a false discovery claim depends a lot on what is being claimed, as well as by who is behind it. Large experiments have more to lose, because they are under the spotlights of media attention. And claims of new physics of course are more risky.

Whether the cost of being wrong should be considered in a discovery threshold, however, is not necessarily granted: from a scientific integralist point of view one should disregard it, and be concerned only with the claim itself. But in practice, a wise spokesperson should consider it very carefully !

In Summary

To summarize this long article, I wish to say that in general I am very happy that more and more outsiders are getting to know what five standard deviations are and what they signify for scientific investigations in fundamental physics; I am also happy to observe similar instances of the language of scientific investigations being made less arcane. We all win if we communicate more easily, and the language of science becomes the language of every day.

However, I am a bit disappointed in the particular case at hand, observing how an arbitrary and fixed convention has crystallized into a unmovable requirement. It looks as if the popularization of the concept has made it even harder to replace it with something smarter. The strict "five sigma" criterion is liable to cause us to claim false discoveries before we have completed our homeworks carefully -as was the case of OPERA- or to wait years before we can confirm a model, when trials factors and systematics are not a concern and when we are studying an effect that we know must exist.

Despite the careful accounting of Rosenfeld, times have changed as far as bump-hunting is concerned - we do not look at millions of histograms with all possible combinations of invariant masses any more, simply because there is little left to discover, alas. We do look at hundreds of mass distributions in modern day collider experiments, though, so the trials factor still exists; but we know well how to account for it nowadays, using the power of computer simulations. Toy simulations allow us to estimate the trials factor in every practical situation, so that we can simply quote a local and a global p-value for any fluctuation we observe.

On the other hand, unknown or ill-known systematics effects always play a role and the possibility of a mistake is always there in these very difficult measurements we routinely perform at particle colliders and other particle physics facilities. Of course, some measurements are harder ro perform than others: the sources of systematic uncertainties to be accounted for in the OPERA timing measurement were dozens, and some took quite some ingenuity to estimate; yet a very basic one was missed... A loose cable. It sucks, but such errors are always possible.

What I believe should be the guiding idea in changing the five-sigma criterion, or modifying it to tailor different situations, is a combination of our belief in the soundness of the evaluation of systematic uncertainties, in the robustness of the analysis, and in the surprise factor connected to the studied effect. Asking for five sigma blindly in widely different cases is a very, very silly thing to do !

A proposal

Hence a proposal can be put forth. One can simply put together a list of past, present, and future measurements in particle physics and evaluate separately the different components that should be the input on a decision of what could be considered a reasonable threshold for the observed significance of the data, which once crossed determines the right to put forth a discovery claim.

Of course the elements of such a table are somewhat arbitrary. Each of us can try and assess the various inputs independently, and a discussion may or may not converge on an agreed upon value. However, already the compilation of the table is a very useful learning exercise.

Let us see what could be the columns of such a table. Above we discussed trials factor, systematics known and unknown, surprise factors, a posterioriness, cost functions above.

We might add a column specifying how beneficial it is that the result of the analysis be known outside the collaboration: if a discovery is not claimed because the experiment needs more data to reach the agreed upon threshold of significance, and a long delay in the publication is the result of this, then one must consider the cost, to the scientific community as a whole, of this delay.

Imagine what would have happened if the Planck collaboration had found a significant departure of their data from the standard cosmological model in the power spectrum of the cosmic background radiation: they might have decided to either publish their find, adding caveat sentences to their publication to explain the existence of some still not perfectly studied systematic effects, or rather keep the data private and enter a long investigation of those effects, hiding their find from the scientific community for six more months. Those six months might be considered wasted time by a cosmologist who had conceived some pet model predicting exactly those modifications to the power spectrum !

Louis' table

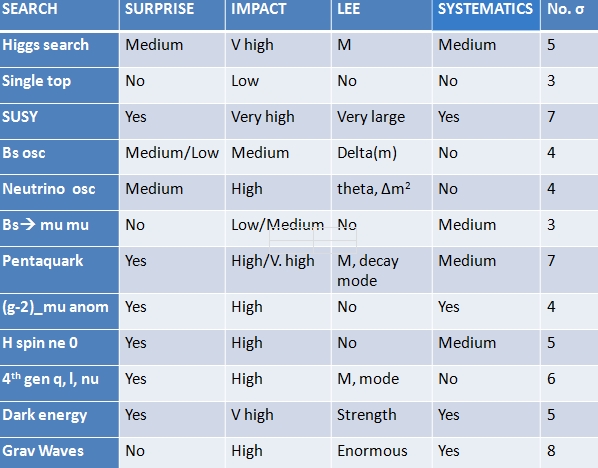

So let us see what we come up with. The table below is just a first attempt, compiled by my colleague Louis, to stimulate a discussion on the topic of the discovery threshold in physics discoveries.

In the table you see a list of discoveries, and an assessment - qualitative in most cases - of the various things one should consider when setting a proper discovery threshold. We see in order the surprise factor, the scientific impact, the entity of the trials factor ("LEE"), the systematic uncertainties that may affect the measurement, and finally the proposed number of standard deviations that an effect must correspond to in order to be considered a discovery.

(Again, I should mention that some of these evaluations are arguable - indeed for single top it has been already pointed out in the comments thread of one of the previous parts of this article that systematics were not irrelevant, so that three sigma would have been too few for a discovery claim...).

In the table are present both past and future possible discoveries. You may play the game and add your own set of discoveries - it would be nice to start an open discussion on the issue in the comments thread !

Finally, let me thank you if you were able to read in full these four posts. I realize I have written more than I should have, but the topic is just so varied and there's so much to discuss... I might write a book on this one day!

Comments