Given that science is always under fire from all sides, is there a way for science to guide technology on ways to help the public find trustworthy sources without 'Who Watches The Watchmen' and 'Follow The Money' claims polluting the discourse?

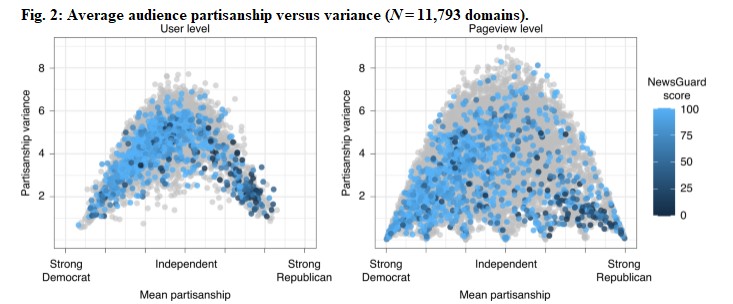

A new paper looks at social media algorithms and finds that it is possible - if companies stop highlighting content their users are most likely to want to see and instead highlight content that has high reliability scores and perception of political diversity. Using YouGov data od 6,890 people chosen to be nationally representative for politics, race, and sex, combined with NewsGuard Reliability Index scores of 3,765 news outlets, they created a new algorithm.

They believe they can thread the needle of still being engaging while providing trustworthy sources.

Audience size as number of individual visitors (left) and visits (right). Domains for which we have NewsGuard reliability scores are shaded in blue (where darker shades equal lower scores). Domains with no available score are plotted in grey. Credit: University of South Florida

The problem is obvious; who will make those determinations? MSNBC viewers may feel like Mika Brzezinski is balanced, but to the rest of the public, her expression of concern about "cancel culture" only coming after Whoopi Goldberg, a fellow female Democrat TV pundit, got a two-week suspension looks really convenient. FOX News viewers believe the same about their outlet. If you ask anti-science activists about how credible Science 2.0 is, they will argue it's not credible at all, they have instead lobbied news outlets like USA Today and Wall Street Journal to have us silenced. If they, or one of their allies, are on a panel determining credible sources we're out of business.

NewsGuard is subjective ratings of outlets by journalists. Obviously, if I have astrologers rank the credibility of astrology outlets it isn't telling me much about how scientific astrology is. I believe in the wisdom of crowds on things that can be objectively averaged, like how many beans are in a jar. But if I average out San Francisco voters on a survey about politics, how accurate is that for the country? Journalists are overwhelmingly partisan, but on surveys they overwhelmingly feel like they are in the middle. They are no more accurate in their self-reflection than anyone else.

So we have self-reported political positioning coupled with subjective ratings of news outlets by insiders at those outlets? How can an AI fix that? Is that going to be better than people at Facebook manually tweaking things? If that were possible, NewsGuard would use an algorithm rather than hand-selected people. It may not be worse but different is not better. Facebook, because it is a technology company, is likely to be a lot more balanced politically than any news outlet. The New York Times will bring in some token Republican representation but for the most part you don't get hired unless you have already passed their ideological litmus test.(1)

In bad models, you create a suite of test problems and if your tool passes, then you publish. It is why economists are readily dismissed by everyone except a Nobel committee; they routinely create models designed to predict the past and expect people to believe that means they predict the future.

In a private sector technology company, you calibrate a model using data, then you verify it, then you verify it again before you verify with an expensive manufacturing test. Only then do you feel good enough to risk a lot of money in production. In academia, it is easy to publish something and claim it is better, but Facebook lost $150 billion in market valuation even though they made $10.3 billion in the fourth quarter - and that was due to lack of confidence they are following the wants of the audience and had become obsessed with a model of what the public should want.

New algorithms are great, they might even be better, Facebook may even adopt them if they find that their own analysis shows merit, but the first rule of product development is that you don't take advice from people who aren't writing you checks.

NOTE:

(1) I watched one anti-science conspiracy theorist get elevated from the Boston Globe all the way up to the top liberal paper in America just by playing to the crowd. I spent years trying to privately get her to be more balanced when it came to science but the Political Force Was Too Strong In This One, and everything environmental groups claim is right and everything science shows is biased. I assume that has changed now that Republicans have co-opted anti-vaccine mumbo-jumbo from the left.

Comments