Since the ATLAS analysis is concerned with measuring the rate of the rare, and interesting, process of production of a Higgs boson in association with a top quark pair (see below), rather than with the search of new physics phenomena, the choice it makes when dealing with the apparent excess of some categories of events is to "normalize away" the excess with some scale factors (see below). Such is not a uncommon choice, usually justified by the fact that the Monte Carlo simulations of the corresponding background processes might be unable to predict their rate with enough precision.

What am I talking about, really? 1 - Higgs plus a top pair

Before I can discuss how a new Z' boson could explain away the anomalous excess of events observed by ATLAS, I need to give you a little bit of context. So here it is.

ATLAS is one of the two big, multi-purpose experiments that collect data from the energetic proton-proton collisions produced by CERN's Large Hadron Collider (LHC); the other experiment is called CMS. The LHC produced a large amount of collisions in the recent Run 2, and the resulting collected data are being mined by ATLAS and CMS for precision measurements of the properties of the Higgs boson, among other things.

The Higgs boson is a mysterious particle whose existence was predicted over 50 years ago by some theorists who could otherwise not figure out how weak interactions could make any sense. Indeed, this was brought by the observation that Nature had to provide a means of satisfying two very fundamental properties of space-time (local gauge invariance, and the finiteness of the probability for some particle reactions at high energy). Introducing in the theory a new scalar field, and a corrisponding Higgs boson, was the only way out. And fifty years after its prediction, the Higgs particle was amazingly discovered by the LHC.

Now that we have the Higgs in the bag, we are not standing still of course: we want to study that particle with the utmost detail, as anything weird about it might indicate the way to discovering new forces of nature or new states of matter. The thing is made difficult by the fact that a Higgs boson is produced only every 3 billion collisions: hence we continue to run the LHC and search for these particles in a number of subnuclear processes that may give rise to them. One of such processes is the production of a Higgs boson in association with a pair of top quarks, the analysis that ATLAS has been carrying out and which gave rise to the above mentioned slight anomalies.

What am I talking about? 2 - What a scale factor is

Before I can discuss a new physics interpretation of ATLAS data, there remains for me to explain what a scale factor is. A scale factor is a multiplier (usually only slightly different from 1.0) that is applied to the weight of each event of some category which might be affecting the selected data sample differently from what the simulation predicts.

Say, e.g., that you observe N_obs=100 events of some particular kind in your data selection, and you expect that two different Standard Model processes contribute to that category: so N=N1+N2 is your expectation. If N1=50 and N2=30, you have a problem because your prediction of the events is 80, not 100 as seen - you have an excess of 20 "unexplained" events.

Now, say the first process is very well known and it has been studied carefully with a high-statistics measurement in a different analysis: you will be very unwilling to argue that N1 is estimated incorrectly. However, if on the other hand the second process has not yet been studied with great care in the kinematical region of interest to your particular selection, you may be tempted to say that your data shows evidence that the N2 rate estimate is biased low.

If the purpose of your check of N_obs being explained by N1+N2 is to fine-tune your background estimation procedures, such that you can then examine some other part of your data where you want to measure a signal -as is the case in the ttH analysis we are considering - what you may do is to apply a scale factor to N2: in our example you then need S=1.666 to make 50+30*S=100. Introducing an S factor like that means to accept that the simulation of the second process is low by 66.6%, which is a rather striking observation but not a unheard-of one, especially since that scale factor will come up with a significant uncertainty, something like S=1.67+-0.4 or so, depending on how precise is the other estimate N1.

New Physics: Is a Z' Boson Playing In?

What happened today is that I read, in a preprint article submitted last Thursday to the Arxiv repository by Ezequiel Alvarez, Aurelio Juste, Manuel Szewc, and Tamara Vazquez Schroeder, of a possible explanation of the ATLAS anomaly in terms of the production of a Z' boson in events with top quark pairs. Those events would contaminate the ATLAS signal search regions in a way that is capable of explaining away the anomalies observed.

I should not fail to mention, at this point, that if you are an Ockhamist as you should be (William of Ockham, a franciscan friar and a philosopher of the 14th century, was the first to note that in the attempt to explain Nature "entia non sunt multiplicanda praeter necessitatem", i.e. you should not call in unknown new phenomena to explain what you observe, if you can explain it with known phenomena), you would not want to hear about a Z'. That's because otherwise we are about to call in a totally new entity - a Z' boson that nobody has ever seen before - to explain some observation which could be due to a much more mundane effect - a biased-low simulation prediction.

So, if we were to choose between a Z' boson or a S>1 scale factor of some standard model process, to explain a not-so-striking excess of collider events, it is obvious what we should do: as Ockhamists we would take a simulation deficiency as the best explanation.

However, it is fun to speculate, especially if we are sitting here, watching the Standard Model continue to predict with annoying precision everything that we measure. The hypothesis of the article by Alvarez et al. therefore is nice to consider. Now, in order to let you see into the matter a bit better, I need to first show the ATLAS data and comment on it for a second. See the graph below.

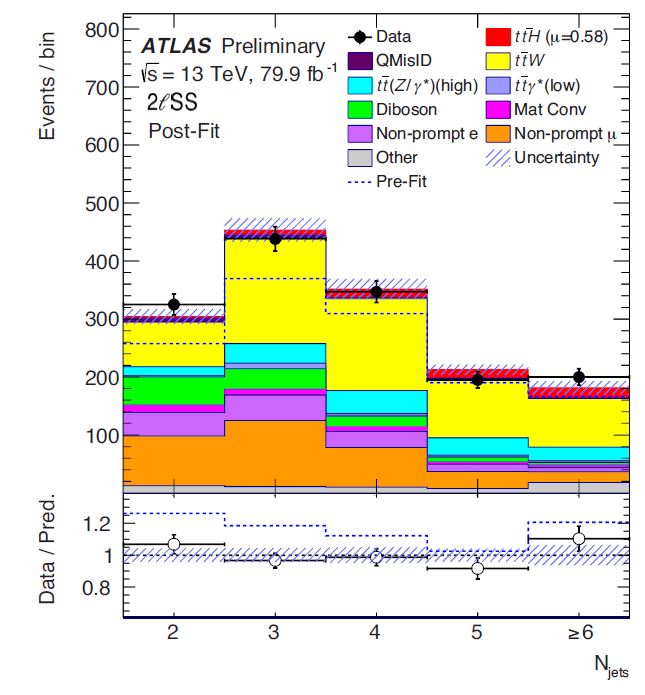

In this histogram (top panel) are reported the numbers of events observed with different properties (the number of jets observed in the events), in a subset of ATLAS data selected to search the ttH process I mentioned above. If you are curious, these are events with two same-sign leptons.

Each bin of the histogram has an observed number reported as a black point with uncertainty bars, and a full coloured bar reporting the simulation prediction for that number. It seems that everything is under control, but the prediction is computed _after_ a fit to the data - which is equivalent to the application of a scale factor on the ttW process, the associated production of a top quark pair and a W boson. The ttW process is one of the main contributors to this sample of data, and is shown by the yellow area of the coloured histogram.

If the scale factors were not applied, the prediction would total the value shown by the dashed histogram: as you can see, in some bins there is a significant deficit of predicted events with respect to the observed ones. That is what is fixed by the scale factor we discussed.

In their article, the ATLAS authors explain that indeed, the ttW background is a source of concern: indeed they have a section called "Cross-checks" which starts by explaining that

A number of cross-checks of the assumptions in the statistical model were performed. The measured signal strength was found to be robust under these cross-checks, provided that the ttW normalisation was not fixed.

Also, in their conclusions they correctly note that

The normalisation factors obtained for the t ¯tW background in the phase space selected by this analysis are in the range 1.3–1.7 above the updated theoretical predictions. In addition, modelling issues are observed in regions dominated by ttW production. An improved description of the ttW background is needed to reach greater precision in the future.

That is of course true if one is focusing on measuring the Higgs boson production. However, I do believe one can entertain different explanations for the observed discrepancies.

New Physics Instead?

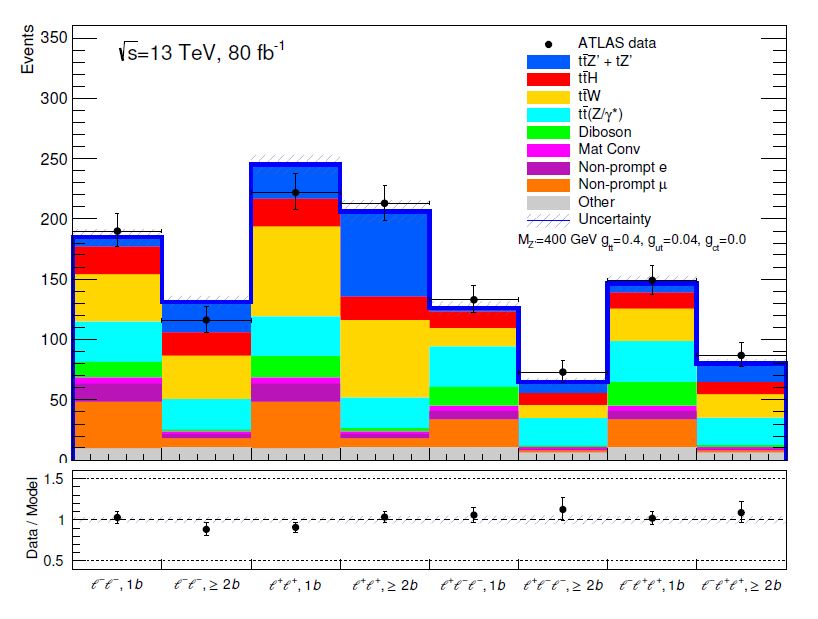

Now, let us look at the explanation that Alvarez et al. are putting forth: a Z' boson, produced with top quarks, would contribute to the selected data by increasing the rate of data collected in some of the bins. The money plot is the one below.

The histogram above now shows different categories of events, as authors broke the data down into subclasses depending on the number of b-quark jets and the sign of the leptons. In any case, you can see that the data (again, black dots) are well explained if one adds to the mixture of processes the production of a Z' boson associated to top quark pairs. The culprit here is the "same-sign dileptons plus two b-quark jets", the fourth bin from the left, where the blue stuff nicely fills in the apparent deficit (pre-scale factors) of the simulation predictions.

The analysis of Alvarez et al. of course does not stop here, but considers a number of possible solutions as well as other measurements by ATLAS and CMS. They also point out how a new Z' boson that contributed to the above graphs might be stalked with a targeted analysis. As it is common in these situations, all we can say is "if it's roses, they will blossom": let us therefore hope this umpteenth 2-sigmaish effect does not vanish like snowflakes in the sun, and instead grow to become the stumbling block that finally trips the Standard Model!

Post scriptum: after I posted the above, I was mentioned that there is another group of theorists who put forth a new physics interpretation of the ATLAS excess, actually before the ones who authored the Z' paper above. The interpretation is described in this article by Giovanni Banelli, Ennio Salvioni, Javi Serra, Tobias Theil, and Andreas Weiler. I am also happy to mention it because Giovanni has been a member of the AMVA4NewPhysics ITN which I coordinated from 2015 to 2019...

Comments