The "grandma-level" explanation of interference involves an oscillatory phenomenon in water, a simple and familiar medium. If you have two sources of waves in two different points of a water basin, these will each create a pattern of waves that extend radially. On each point of the water surface, a single source would produce an oscillating water level; but with two sources, the two oscillatory motions combine and add up or subtract depending on whether the two waves are in phase or not. In specific points of the surface, there may be no oscillation at all - the destructive effect of interference of the two wave amplitudes.

It was the observation of interference patterns in the probability distribution of electron beams on a screen, downstream of a double slit, what provided the notion that particles, too, may behave as waves, such that they can withstand interference effects. But nowadays we are not concerned anymore with the "paradox" of a particle exhibiting wave-like nature. And indeed, when we collide protons in the core of the LHC experiments, we have to account for interference effects in the physical processes we study, in order to make sense of them.

Interference in particle physics?

There is a host of fascinating interference effects that can be observed when we study neutral hadrons, such as k0 particles. Indeed, neutral kaons (particles made up by a strange quark and an anti-down quark, or vice-versa) have been the subject of intense studies from the 1960ies onwards. It was in studying a beam of neutral kaons, among other things, that the phenomenon of CP violation was first observed (by Christensen, Cronin, Fitch and Turlay - the second and third of which later received a Nobel prize for their observation). But the wavelike processes that take place in the propagation and decay of these particles are still studied today.

Here, however, I want to discuss what happens in a much more ephemeral system of fundamental particles. It is not an interference effect which you can study as a function of time in this case, as we are dealing with a very short-lived process, the creation of a W boson in proton-proton collisions. The W boson lives a fantastically short life, of the order of 10^-24 seconds, whereafter it decays into a pair of elementary fermions, such as an electron-neutrino pair, or a quark-antiquark pair.

Ten to the minus twentyfour seconds is such a short time that it is hard to picture it. And yet, during its lifetime the W boson may still manage to do something. If it is endowed with more energy than its nominal rest mass would foresee, for instance, the W may emit an energetic photon, getting rid of the extra endowment and thus return "on mass shell". This photon will then be able to leave the production point and be detected by the electromagnetic calorimeter (see my previous post for a description of the CMS EM calorimeter, a real marvel).

And what about the W? It will in the meantime have decayed, living as a trace of its ephemeral existence its decay products, which we may detect (e.g. if it decays to an electron-neutrino pair, when the electron is also sized up in the same device that measures the photon), and use to prove that the W was indeed there once. What we have in our hands is a "W-gamma" associated production event.

The CMS result

CMS collected a large sample of such events in the collisions delivered by the LHC in Run 2, and a recent publication describes the detailed measurement of the properties of W-gamma production, such as the relative rate of production as a function of the kinematics of the final state particles.

Among the measurements, however, one stands out in my opinion as the most interesting - the measurement of radiation zero.

Radiation zero is a jargon term that identifies a kinematical configuration when the production of a photon (the "radiation", an electromagnetic one in this case) is suppressed by interference effects. This happens because the photon may be produced in that configuration (in this case, at the same angle with respect to the proton beams) by different processes, whose "amplitudes" subtract off one another.

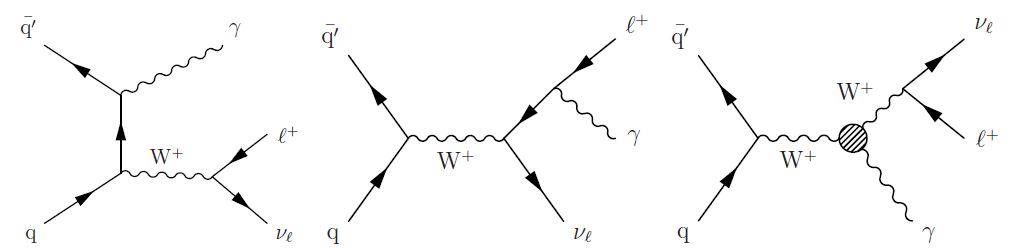

Below you can see three Feynman diagrams that describe the associated production of a photon and a W boson in LHC collisions. They are by no means the only ones involved, mind you: there is an infinity of possible such diagrams, and if you were to compute with infinite precision the total probability to observe a W-gamma pair you would have to add together all of them. Fortunately, we have understood that by only considering the "leading" diagrams - the ones with fewer intermediate particles taking part in the reactions - we still get a decent approximation.

The shown diagrams have time flowing left to right, and in them you can see that fermions (quarks, q, and leptons, l for charged ones and v for neutrinos) are symbolized by straight lines, while bosons (the W and the photon, denoted by the Greek letter gamma) are wiggly ones. You can read e.g. the diagram on the left as follows: "a quark q and an antiquark q'_bar, each originally contained in one of the two protons that collide, come close together; the antiquark emits a photon, and then annihilates with the quark to produce a W boson. The W boson later decays into a lepton and its neutrino."

The diagram at the center instead shows a case when the photon is emitted by the charged lepton that the W decay produced. The diagram on the right is different: it is a "triple gauge coupling diagram", where the W and the photon directly couple to one another. It is the W that emits the photon in this case. This latter diagram may be interesting because the intensity of this triple gauge coupling, which the standard model predicts to have a certain value, might be measured to be different - if that happened, we might imagine that some new physics processes contributed to the dashed circle, changing the relative production rates.

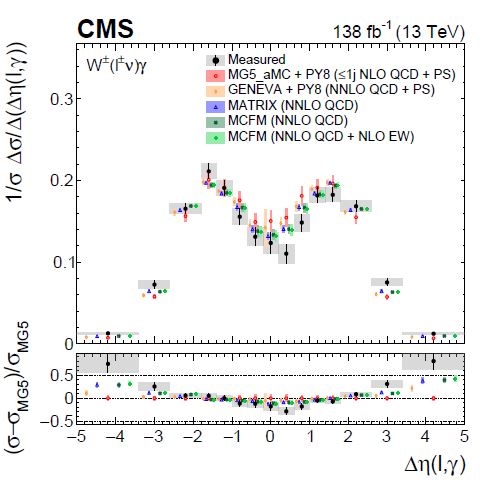

CMS measured the production of these W-gamma pairs as a function of the angular separation with respect to the beams direction, and reported the results in the graph below. With no interference, one would expect that the two particles are more likely to be emitted with small angle to one another, but indeed what is observed is a double hump, with a dip in the center. That is the signature of Radiation Zero, the interference between different production mechanisms kicking in when the W and the photon travel off close in angle.

The above graph is quite complicated to read, as it includes a comparison of many different theoretical calculations (coloured sets of points) to the actual data (black points with grey systematic uncertainty shading). NNLO means "next-to-next-to-leading-order", which means that some theoretician worked for months in his small office, drawing diagrams that include many more particles than those shown above, and determining the relative contribution of each.

What is relevant to us, though, is the size of the dip - it seems to be larger than what most models predict, indicating that maybe these models need to be tweaked a bit, or maybe that still higher-order Feynman diagrams contribute to modify the predictions.

---

Tommaso Dorigo (see his personal web page here) is an experimental particle physicist who works for the INFN and the University of Padova, and collaborates with the CMS experiment at the CERN LHC. He also coordinates the MODE Collaboration, a group of physicists and computer scientists from fifteen institutions in Europe and the US who aim to enable end-to-end optimization of detector design with differentiable programming. Dorigo is an editor of the journals Reviews in Physics and Physics Open. In 2016 Dorigo published the book "Anomaly! Collider Physics and the Quest for New Phenomena at Fermilab", an insider view of the sociology of big particle physics experiments. You can get a copy of the book on Amazon, or contact him to get a free pdf copy if you have limited financial means.

Comments