I’ve always been interested in how changes in agricultural production practices impact the environment. In particular, I’ve followed the adoption of genetically modified (GM) crops since I was an undergraduate, and try to stay up to date on research relating to the environmental impact associated with these crops.

Several publications over the last decade have relied on the environmental impact quotient (EIQ) to quantify the environmental impact of changes in pesticide use resulting from GM crops. The EIQ originated with a research group from Cornell (Kovach et al.) with a goal of developing a simple metric to quantify the environmental impact of pesticides. The reasoning behind the EIQ is described in the original paper:

“Because of the EPA pesticide registration process, there is a wealth of toxicological and environmental impact data for most pesticides that are commonly used in agricultural systems. However, these data are not readily available or organized in a manner that is usable to the IPM practitioner. Therefore, the purpose of this bulletin is to organize the published environmental impact information of pesticides into a usable form to help growers and other IPM practitioners make more environmentally sound pesticide choices. This bulletin presents a method to calculate the environmental impact of most common fruit and vegetable pesticides (insecticides, acaricides, fungicides and herbicides) used in commercial agriculture. The values obtained from these calculations can be used to compare different pesticides and pest management programs to ultimately determine which program or pesticide is likely to have the lower environmental impact.” (Kovach et al. 1992)

Metrics such as the EIQ certainly seem desirable in determining whether changes in pesticide use result in a net benefit or detriment to the environment. I was initially a strong proponent of the EIQ for many reasons. First and foremost, the EIQ is based on a combination of important environmental indicators. It takes into account pesticide properties, including soil behavior and persistence, toxicity to a variety of non-target organisms such as birds, fish, and bees, as well as mammalian toxicity. Even better, because the EIQ calculation results in a single number for each pesticide, the EIQ is simple enough to be interpreted easily by non-scientists; a high EIQ means greater potential impact on the environment. It provides a simple, intuitive way to compare different pesticides.

Questioning the EIQ

Now, a story. (If you’re not interested in the background story of why we did this work, and just want to read the nuts and bolts of why we think the EIQ isn’t a good indicator of environmental impact, feel free to skip to the next sub-heading.)

A graduate student (Carl Coburn) and I began a meta-analysis of published studies a few years ago, and we decided to use the EIQ as our measure of environmental impact. The EIQ had been used for similar purposes in the literature, so it seemed like a logical fit. But after Carl presented some preliminary results at a professional society meeting, a scientist in the audience criticized him quite vociferously (and publicly) for using the EIQ. I don’t recall the entire exchange exactly, but the last sentence from this scientist was burned into my memory: “There’s no science in that!” Carl calmly responded to this established scientist by saying “I would argue there is science in it,” and then proceeded to discuss the toxicity and fate characteristics that are used in the EIQ calculation. In the moment I was simultaneously very proud of my first year Master’s student for his calm defense of his methodology in front of a crowd of established scientists, and also furious with the established scientist for publicly criticizing my student in such a harsh manner.

I understand that pointed questions after scientific presentations are standard practice. It is a necessary part of science to point out flaws and weaknesses in our colleagues’ methods (whether this is done politely or abrasively depends on the scientists at both ends of the exchange). I’d rather find out about major issues with my research at a meeting while the research is still underway than by a peer-reviewer who rejects the manuscript. But at the same time, I felt that the tone of this established scientist’s interrogation of my student was unnecessarily harsh, and certainly could have been done on the side, rather than in front of 50 other scientists. The best way to discourage a young scientist, I thought, is to announce in a room full of other scientists that a year’s worth of their work is not actually science.

After we returned home from this meeting, Carl and I reflected on the criticism. We wanted to be sure that if we received similar criticisms during peer-review of the eventual manuscript, we’d be ready with a solid defense of the EIQ methods and calculations. So we started spending some time really getting to know the EIQ. In addition, we also started incorporating some of the underlying data that goes into the EIQ (such as persistence, toxicity to indicator species, etc.). And somewhere along the way (I don’t remember exactly when), we started questioning the utility of the EIQ, and decided it was best to abandon the EIQ for Carl’s thesis project altogether. Carl has since successfully defended his M.S. thesis and we hope to submit that work for publication soon. But in the time since Carl’s thesis defense, we took a very hard look at the EIQ. This included some discussion on the Biofortified.org forums, where some of our eventual methods were hatched. The paper we recently published in PLoS ONE is the result of that effort.

Problems with the EIQ

I don’t want this blog post to simply re-state the conclusions in our paper, and I’m also not trying to discredit any previous research. The huge amount of work put into the EIQ and the research that uses it is laudable. But I do want to highlight a few points here that I think are important. First (and probably most importantly), our paper is not the first to criticize the EIQ. Not long after the original EIQ methods were published, Jonathan Dushoff and colleagues wrote an important critique in American Entomologist. The abstract for the Dushoff critique was only five sentences long. Here are two of them:

“[F]laws in both the [EIQ] formula and its conceptual underpinnings serve to make the information provided misleading. Although Kovach et al. provides a great deal of information and many interesting ideas, we recommend that EIQ presented there not be used as a tool to evaluate field applications of pesticides.” (Dushoff et al. 1994)

The Dushoff critique should be required reading for anyone interested in (or especially those using) the EIQ. After reading it, I’m actually a little surprised the EIQ continues to see use in the scientific literature. Dushoff et al. provide five very specific examples that illustrate some of the problems with the EIQ. One of these examples is particularly enlightening:

“Because even benign substances are given a rating of one in each category, every substance has an EIQ of at least 6.7. To take an extreme example, if water were considered as a pesticide it would have an EIQ of 9.3. One acre-inch of irrigation would have an EIQ of >1.5 million, far worse than any conceivable pesticide treatment.” (Dushoff et al. 1994)

Certainly, the EIQ was never meant to compare water with pesticides, but this comparison illustrates one major failing of the EIQ. No matter how benign a pesticide is, if the use rate is high enough the EIQ Field Use Rating will suggest it has a higher impact than other more toxic pesticides. This means in most cases, the amount of water used during a pesticide application (~10 gallons per acre) would have a higher EIQ (869) than nearly any pesticide sprayed with the water. Which brings us to one of the questions we tried to answer in our paper.

EIQ versus “Pounds on the ground”

In the past, I’ve been critical when different production systems are compared solely on the basis of the amount of pesticide applied. This “pounds on the ground” approach ignores toxicity and exposure potential, and therefore tells us nothing about actual risk. The EIQ includes proxies for toxicity and potential exposure routes, and is therefore considered an improvement. From our PLoS ONE article:

“One problem that the EIQ has been purported to solve is the simple reporting of weight of applied pesticide as an environmental indicator. Certainly, a simple accounting of the amount of pesticides applied [7] has serious shortcomings when toxicity, potential exposure, and persistence data are ignored. This approach is analogous to a doctor uniformly prescribing dosage across multiple drugs without regard to toxicity or biological effectiveness.” (Kniss&Coburn 2015)

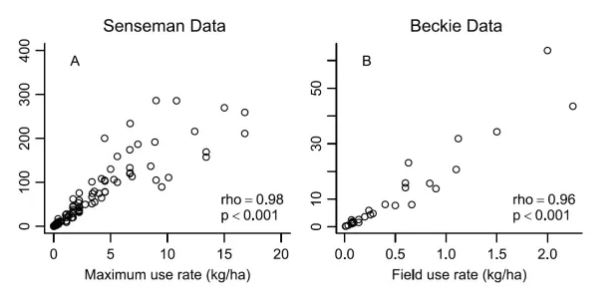

But previous authors have shown that the EIQ Field Use Rating was highly correlated with application rates for the pesticides they evaluated. We wanted to know whether this held true for herbicides. If the EIQ Field Use Rating provides similar information as the application rate of the herbicide, the EIQ may be only a minimal improvement over the “pounds on the ground” approach. So how much of the EIQ Field Use Rating (which we refer to as the Field EIQ for brevity) can be explained by the herbicide application rate? We focused specifically on herbicides because they are treated differently from other pesticide groups in the EIQ calculations (more detail on that later).

For two separate data sets, we found a very strong correlation between the herbicide application rate and Field EIQ. Again from our paper:

“Because of the strong correlation between Field EIQ and herbicide use rate, the Field EIQ should be considered, at best, a minimal improvement compared to simply estimating the weight of herbicide applied per unit area.” (Kniss&Coburn 2015)

Another recent criticism of the EIQ from Bob Peterson and Jerome Schleier shows the EIQ is not an accurate estimate of insecticide risk due to the way the risk factors are scaled. But for herbicides, there is even more concern that the EIQ may not accurately reflect risk when compared to other types of pesticides.

“[B]ecause of the way it is calculated the EIQ may be more poorly suited for comparing herbicides than for other pesticide groups. For herbicides, there are two notable irregularities when calculating the EIQ. The “systemicity” risk factor (SY) is always assigned a value of 1 for herbicides, and therefore, does not contribute to herbicide EIQ values. SY, as defined by Kovach et al. [4], is “the pesticide’s ability to be absorbed by plants.” All herbicides have the ability to be absorbed by plants to some extent, and systemic herbicides may be translocated throughout the plant. It is unclear why SY was considered important for other types of pesticides but effectively removed from the EIQ calculation for herbicides. Plant surface half-life (P) data is rarely available for most herbicides, and so this risk factor was assigned a value of 1 for preemergence (PRE) herbicides, or a value of 3 for postemergence (POST) herbicides (Kovach et al. (1992). It is unclear from the methods described by Kovach et al. [4] which value was used for herbicides that can be applied PRE and POST (such as atrazine or mesotrione).” (Kniss & Coburn 2015)

How do different risk factors affect the EIQ?

We conducted a very basic sensitivity analysis to quantify how much each risk factor used in the EIQ calculation contributes to the resulting EIQ value. From this analysis, we calculated a relative influence score, which basically tells us the expected change in EIQ if that risk factor increases by 1 unit; a higher relative influence score means that risk factor has a larger influence on the EIQ compared to risk factors with lower relative influence scores.

We also calculated the proportion of variation in the EIQ explained by each individual risk factor.

Probably the most concerning issue we uncovered in this work was the heavy influence of the plant surface half-life risk factor on the resulting EIQ. It is substantially more important than any other risk factor, explaining around 28% of the variation in the simulated EIQ values.

To confirm our simulation results, we ran a similar analysis on 116 actual herbicides, and found that plant surface half-life explained 26% of the variability in EIQ from that sample. This is problematic, because for herbicides there are no quantitative data used for this risk factor; application timing (before or after crop emergence) is used as a proxy for plant surface half-life. Here is why that is problematic:

[H]erbicide application timing relative to crop emergence is not a characteristic inherent to a herbicide. For example, many herbicides that are only effective when applied to plant foliage (like glyphosate and carfentrazone) are often applied before planting the crop, but have a value of 3 for plant surface half-life. Conversely, dimethenamid is not effective when applied foliarly, and thus was given a value of 1 for plant surface half-life; but dimethenamid is commonly applied after crop emergence for late-season residual weed control. Because application timing relative to crop emergence can vary for many herbicides, the use of PRE vs POST as a risk factor in the EIQ formula is arbitrary.” (Kniss & Coburn 2015)

One of the peer-reviewers of our manuscript felt that the use of ‘arbitrary’ in the paragraph above was a little too strong. But we defended this word choice by including the examples below to illustrate why this proxy for risk is indeed arbitrary with respect to herbicides.

In a nutshell, application timing can simultaneously increase and decrease risk, depending on the target organism. But this is not allowed by the EIQ formula; herbicides applied after crop emergence (POST) are always going to have a higher EIQ value compared to herbicides applied before crop emergence (PRE).

“Exposure potential (and therefore risk) for a given target organism depends on the exposure route. In some scenarios POST herbicides should indeed present greater risk as estimated by the EIQ. For example, farm workers may be more likely to be exposed to herbicides applied to plant foliage compared to herbicides applied to the soil before crop emergence. However, applying a herbicide to soil before crop emergence can increase the risk of surface runoff 2- to 20-fold compared to a herbicide applied to plant foliage [16]. Increased surface loss potential would put fish and aquatic organisms at greater risk from PRE herbicides compared to POST herbicides. The EIQ formula monotonically increases risk for POST herbicides compared to PRE herbicides, and therefore, cannot account for this difference between risk to aquatic organisms due to surface loss and farm worker exposure from plant foliage contact.

“Because of the low relative influence of leaching potential in the EIQ, a herbicide that leaches readily into groundwater will have a similar EIQ value to a herbicide for which leaching risk is negligible, all else being equal. For example, if the leaching risk of atrazine (a restricted use pesticide due to leaching potential) were reduced from medium (L = 3) to low (L = 1) in the EIQ formula, the EIQ value would change by less than 3% (from 22.8 to 22.2). Similarly, reducing the surface runoff risk to low would reduce atrazine’s EIQ by 9% (from 22.8 to 20.8). Ironically, if atrazine were considered a PRE herbicide in the EIQ calculation (atrazine is commonly applied PRE), the EIQ would decrease by 40% (to 13.6) even though the leaching and surface runoff risk would increase when applied to soil instead of foliage.” (Kniss & Coburn 2015)

So the EIQ is unlikely to be an accurate estimate of environmental impact of herbicides, and it is probably not much better at estimating the impact of other pesticide types. As recognized by Dushoff over a decade ago:

“Although some of the problems … could be solved by improving the structure of EIQ formula, or including additional terms, the most serious problems are inherent in the approach. Kovach et al. attempt to describe every pesticide with a single, scalable number – that is, a number that can be multiplied by the quantity of pesticide applied. We do not recommend any formula that summarizes the effect of a pesticide with a single number.” (Dushoff et al. 1994)

So what should we use instead of the EIQ?

We’re certainly not suggesting we ignore risk altogether and simply count the pounds of herbicides applied. But there are other methods. In their criticism of the EIQ, Peterson & Schleier propose using a risk quotient method, which is what the US EPA and other regulatory bodies already use.

“[T]he ability to estimate the joint probability of exposure and toxicity (i.e., risk) currently is relatively simple and there are several acceptable models for estimating environmental exposures. … A starting point to create a useful quantitative rating system is the risk quotient (RQ) that is used in concept, but not necessarily by that specific term, by regulatory agencies throughout the world. An RQ is simply the ratio of estimated or actual environmental or dietary concentration of the pesticide to a toxic effect level or threshold. Some other terms for this ratio include hazard quotient (HQ), hazard index (HI), margin of safety (MOS), toxicity-exposure ratio (TER), and margin of exposure (MOE). Peterson (2006) showed that an RQ approach is valuable for making direct comparisons of quantitative risks between pesticides.” (Peterson & Schleier 2014)

Specifically for pesticides already being used in the field, we’ve been working on applying the eco-efficiency concept for comparing pesticide use in different systems (similar to the approach here). Risk quantification is important, but it is only one side of the agricultural equation; we’re using those pesticides (and fuel, and seed, and other inputs) to produce something. Our goal should not only be to decrease our environmental impact, but to do so while maintaining or increasing crop yields.

Reducing risk to zero isn’t helpful if it also reduces production to the same level. Eco-efficiency is attractive because it includes both sides of the input/output equation.

The eco-efficiency model Carl Coburn has developed incorporates the risk quotient method advocated by Peterson & Schleier, but also includes the resulting crop yield from those pesticide inputs. And there’s definitely some science in that.

Citation: Kniss AR, Coburn CW (2015) Quantitative Evaluation of the Environmental Impact Quotient (EIQ) for Comparing Herbicides. PLoS ONE 10(6): e0131200. doi:10.1371/journal.pone.0131200

Comments