Facebook is under fire again, this time not over privacy, but for finding that a news feed can affect users’ subsequent posts (and presumably, emotions). Unfortunately, the indignant outrage threatens to harm the public more than Facebook’s original study. Facebook, like every company ever, will continue to experiment to optimize its service. The only thing this backlash will teach it is not to publish its findings, and that will be a huge loss to social science.

First, what did Facebook actually do? For a brief period, it altered its algorithm to increase or decrease the number of positive and/or negative posts that users saw. The users who saw more positive news items tended to post more positively themselves, and vice-versa.

The actual results: a difference in positive vs negative words in users' subsequent posts of about 0.1%.

This was not drastic - the reason the subtle effect could be measured was simply that the sample was so large. People were not left either crying or dancing in the streets, and as always, they can easily close Facebook.

Not Facebook’s experimental subjects.

This sort of experiment is actually not uncommon; in the tech world it’s known as A/B testing, where consumers are shown two different versions of an ad or webpage, and the outcomes are compared between groups. There is no way to stop companies from doing these tests - they have every right to change their webpage design. But this is my friends, right? Facebook can’t change my news feed! As David Weinberger points out, that mistakenly assumes there exists some actual real “news feed” that belongs to you. In reality, the news feed is just a product of whatever algorithm Facebook is currently using. It has been, and will continue to be, under constant development, including data-driven testing.

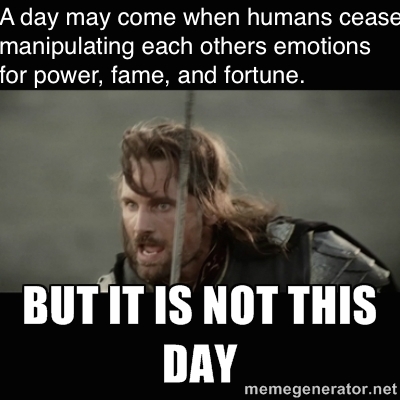

But this manipulated my emotions! No one has any right to do that! Actually, every social interaction people engage in has some effect on our emotional state. Even non-social interactions affect our mood. The world’s best music, movies, novels, and speeches are all famous precisely because they tug on our heart strings so well. There was no backlash against Budweiser leveraging the cuteness of puppies and horses paired with soaring music to tie a light lager with all that is emotionally good. Even the outraged posts themselves are manipulating your mood with emotionally charged words and arguments.

The danger of criticizing Facebook for publishing its results is that it will learn not to share valuable science. That would be a loss for two reasons: first because we won’t know what Facebook is up to, but second because we won’t get access to the knowledge Facebook can (and will) gather from such a huge pile of social data. The ballyhooed experiment wasn’t malicious in intent; it was addressing a real question - does seeing only the exciting things your friends post to Facebook make you feel worse about your own un-cherrypicked life? The answer - no, in fact positive posts seem to make people feel more positive themselves - is not only good for Facebook, it's worth knowing in your everyday interactions.

People are rightly on high alert because of Facebook’s past privacy missteps, but this study was a different beast. We have a right to limit what of ours Facebook shows to whom, but we don’t own Facebook’s news feed algorithm any more than we own the Google News algorithm that may have sent you here. Facebook, Google, Budweiser, and all who create content will continue to tweak and optimize, and there’s nothing we can do to stop them. All indignant outrage will do is teach Facebook not to share results, which will 1) make it harder to be on guard against social engineering tactics, and 2) keep us from learning more about ourselves.

Comments