During my talks I generally try to place our work in context and give the audience a sense of where I see science evolving. I often start with the increasingly important role of openness and at some point follow up with this slide showing the shift of scientific communication from human-to-human to machine-to-machine. My position is that we are entering a middle phase of human-to-machine and machine-to-human communication. This is essentially what the semantic web (Web3.0) is all about and the social web (Web2.0) is the natural gateway.

Giving a talk at NIST was particularly meaningful for me as an organic chemist. This is an organization that has always been associated with authoritative and reliable measurements. In chemistry, many properties of compounds are deemed important enough to be measured and recorded in databases.

Given that the vast majority of organic chemistry reactions are carried out in non-aqueous solvents, isn't it surprising that the solubility of readily commercially available compounds in common solvents is not considered a basic property, like melting point or density? You won't routinely find these values in NIST databases or ChemSpider or even toll-access databases like Beilstein and SciFinder. Tim Bohinsky has reviewed the literature to provide an idea of what is available for a few classes of compounds.

I think that the reason for this lack of interest in solubility measurements relates to the way synthetic organic chemists have learned to think about their workflows. Generally, the researcher sets up an experiment with the intention of preparing and isolating a specific compound. The role of the solvent is usually just to solubilize the reactants - it is then commonly evaporated for chromatographic purification of the product. Even in combinatorial chemistry experiments where products are not purified for a rough screening, the expectation is that compounds of interest will be purified and characterized at some point.

The advantage of this approach is that it is relatively reliable. Column chromatography and HPLC may be time consuming and expensive but these purification techniques will work most of the time. However, they are difficult to scale up and are not environmentally friendly.

Sometimes, during the course of a synthesis, a compound crystallizes either from the reaction itself or by a recrystallization attempt. When this happens, it is a lucky day. The problem is that you can't routinely guarantee purification of compounds this way. In the academic labs where I worked, that was always the case, although there were rumors of gurus with magic hands that could get crystals more often than most. Before chromatography became widely adopted I am sure chemists were much more adept at recrystallization by necessity.

But now technology is allowing for different ways of thinking about organic chemistry. Instead of attempting to make a specific compound, why not think about making any compound that meets certain criteria? If the objective is to inhibit an enzyme, then docking or QSAR predictions would be the first criterion. But we can add to that the requirement for the compound to be made from cheap starting materials using convenient reaction conditions and that it be purifiable by crystallization.

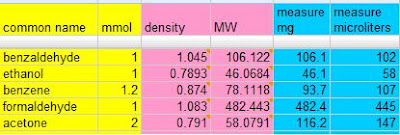

This last requirement would be predictable if we had robust models for non-aqueous solubility. We can only do that if we gather enough solubility measurements - and that is the point of the Open Notebook Science solubility challenge. We want it to become as easy to look up or predict the solubility of any compound in any solvent at any temperature as it is to Google. For a taste of things to come, play with Rajarshi Guha's chemistry Google Spreadsheet that calls web services to calculate weights and volumes of compounds based only on the common name and number of desired millimoles.

In thinking about what the future will look like it is tempting to imagine complex extrapolations of current concepts caught in the first part of the hype cycle - nanotechnology and artificial intelligence are good recent examples.

But much of the real progress is reflected by a simplification that is almost invisible and underestimated in its power to change the way things are done. Blogs, wikis and RSS are a wonderful example of that. Technically, these are very simple software components - probably not something people in the 1980s would have predicted to be the "advanced technology" to explode in the 21rst century. But it is precisely that simplicity, coupled with reliable free hosted services, that accounts for the explosive adoption of these tools.

Similarly, as a graduate student in the late 1980's, I imagined complex chemical reactions in academia by now to be carried out routinely by robots and synthetic strategies to be designed by advanced AI. From a purely technical standpoint, academic research probably could have evolved in that way but it didn't. That vision simply was not a priority for funding agencies, researchers and companies.

But now the Open Science movement, fueled by near zero communication costs, can add diversity to the way research is done. I'm predicting it will favor simplification in organic chemistry. Here's why:

In a fully Open Crowdsourcing initiative, where all responses and requested tasks are made public in real time, the numbers will dictate what gets done. There will always be more people with access to minimal resources than people with access to the most well equipped labs. Thus contributions to the solution of a task will likely be dominated by clever use of simple technology. This is what we expect to see for the ONS solubility project. As long as competent judges are available to evaluate the contributions and strictly rely on proof, the quality of the generated dataset should remain high.

Note that this may not be the expected outcome for crowdsourcing projects where the responses are closed (e.g. Innocentive). Responses to RFP's from traditional funding agencies, where funds are allocated to specific groups before work is done, are also unlikely to yield simplicity. Even to be eligible to receive those funds generally requires being part of an institution with a sizeable infrastructure. I'm not saying that traditional funding will disappear - just that new mechanisms will sprout to fund Open Science. The sponsorship of our ONS challenge with prizes from Submeta and Nature is a good example.

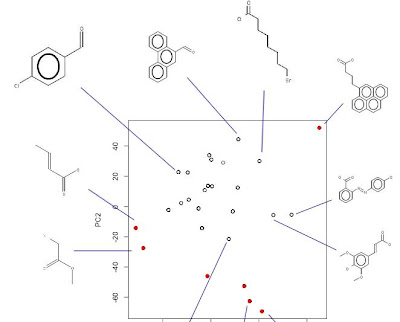

For synthetic organic chemistry, it doesn't get much simpler than mix and filter. We've already shown that such simple workflows can be automated with relatively low-cost solutions, with the results posted in real time to the public. Add to this crowdsourcing of the modeling of these reactions and we start to approach the right side of the diagram at the top of this post. See Rajarshi's initial model of our solubility data to date to see how it adds one more piece to the puzzle.

Comments