There are currently two ambitious projects straddling artificial intelligence and neuroscience, each with the aim of building big brains that work. One is The Blue Brain Project, and it describes its aim in the following one-liner:

“The Blue Brain Project is the first comprehensive attempt to reverse-engineer the mammalian brain, in order to understand brain function and dysfunction through detailed simulations.”

The second is a multi-institution IBM-centered project called SyNAPSE, a press release which describes it as follows:

“In an unprecedented undertaking, IBM Research and five leading universities are partnering to create computing systems that are expected to simulate and emulate the brain’s abilities for sensation, perception, action, interaction and cognition while rivaling its low power consumption and compact size.”

Oh, is that all!

The difficulties ahead of these groups are staggering, as they (surely) realize. But rather than discussing the many roadblocks likely to derail them, I want to focus on one way in which they are perhaps making things too difficult for themselves.

In particular, each aims to build a BIG brain, and I want to suggest here that perhaps they can get the intelligence they’re looking for without the BIG.

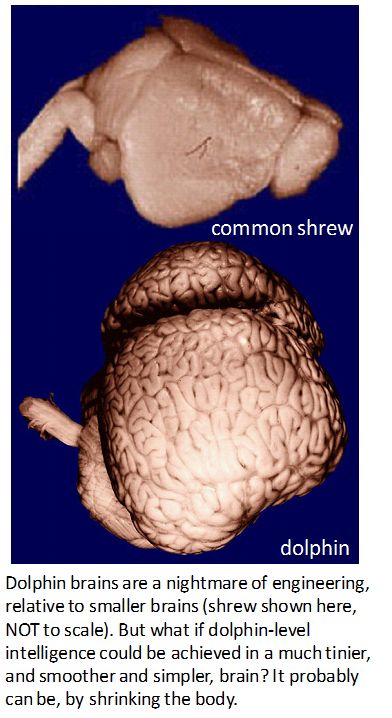

Why not go big? Because bigger brains are a pain in the neck, and not just for the necks that hold them up. As brains enlarge across species, they must modify their organization in radical ways in order to maintain their required interconnectedness. Convolutedness increases, number of cortical areas increases, number of synapses per neuron increases, white-to-gray matter ratio rises, and many other changes occur in order to accommodate the larger size. Building a bigger brain is an engineering nightmare, a nightmare you can see in the ridiculously complicated appearance of the dolphin brain relative to that of the shrew brain below – the complexity you see in that dolphin brain is due almost entirely to the “scaling backbends” it must do to connect itself up in an efficient manner despite its large size. (See http://www.changizi.com/changizi_lab.html#neocortex )

If the only way to get smarter brains was to build bigger brains, then these AI projects would have no choice but to embark upon a pain-in-the-neck mission. But bigger brains are not the only way to get smarter brains. Although for any fixed technology, bigger computers are typically smarter, this is not the case for brains. The best predictor of a mammal’s intelligence tends not to be its brain size, but its relative brain size. In particular, the best predictor of intelligence tends to be something called the encephalization quotient (a variant of a brain-body ratio), which quantifies how big the brain is once one has corrected for the size of the body in which it sits. The reason brain size is not a good predictor of intelligence is that the principal driver of brain size is body size, not intelligence at all. And we don’t know why. (See my ScientificBlogging piece on brain size, Why Doesn’t Size Matter…for The Brain?)

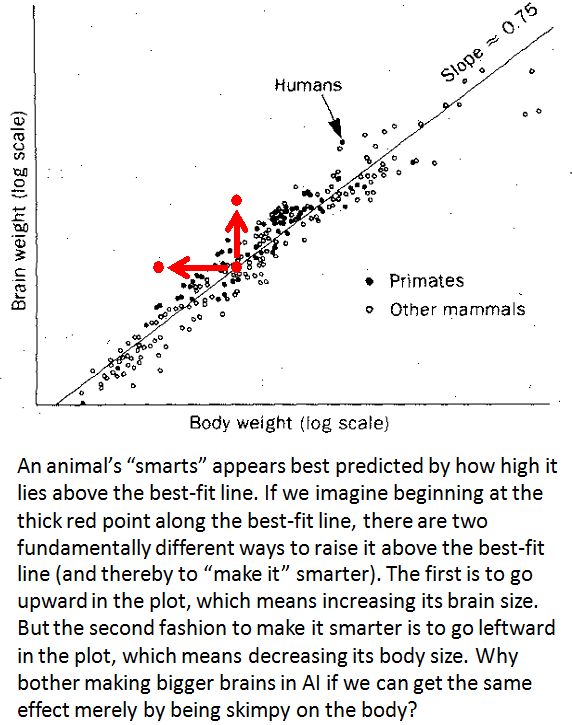

This opens up an alternative route to making an animal smarter. If it is brain-body ratio that best correlates with intelligence, then there are two polar opposite ways to increase this ratio. The first is to raise the numerator, i.e., to increase brain size while holding body size fixed, as the vertical arrow indicates in the figure below. That’s essentially what the Blue Brain and SyNAPSE projects are implicitly trying to do.

But there is a second way to increase intelligence: one can raise the brain-body ratio by lowering the denominator, i.e., by decreasing the size of the body, as shown by the horizontal arrow in the figure below. (In each case, the arrow shifts to a point that is at a greater vertical distance from the best-fit line below it, indicating its raised brain-body ratio.)

Rather than making a bigger brain, we can give the animal a smaller body! Either way, brain-body ratio rises, as potentially does the intelligence that the brain-body combo can support.

We’re not in a position today to understand the specific mechanisms that differ in the brains of varying size due to body size, so we cannot simply shrink the body and get a smarter beast. But, then again, we also don’t understand the specific mechanisms that differ in the brains of varying size! Building smarter via building larger brains is just as much a mystery as the prescription I am suggesting: to build smarter via building smaller bodies. And mine has the advantage that it avoids the engineering scaling nightmare for large brains.

For AI to actually somehow take this advice, though, they have to answer the following question: What counts as a body for these AI brains in the first place? Only after one becomes clear on what their bodies actually are (i.e., what size body the brains are being designed to support) can one begin to ask how to get by with less of it, and hope to eek out greater intelligence with less brain.

Perhaps this is the ace in the AI hole: perhaps AI researchers have greater freedom to shrink body size in ways nature could not, and thereby grow greater intelligence. Perhaps the AI devices that someday take over and enslave us will have mouse brains with fly bodies. I sure hope I’m there to see that.

Comments