Daniel Drucker's unofficial laboratory slogan is "I'd rather be third and right, than first and wrong." After 30 years, he has seen high-profile journal article after article proclaim the beginning of the end for diseases he studies like diabetes, gastrointestinal disease, and obesity, only for the findings to never be discussed again. It's not dishonesty or fraud, the problem is the irreproducibility of results which lends itself to invalidated materials, unreported negative data, and authors overgeneralizing their results, as Science 2.0 founder Hank Campbell noted in the Wall Street Journal in 2014 - an article which got the journal PNAS to revise their editorial policies to prevent friends from approving studies without peer review

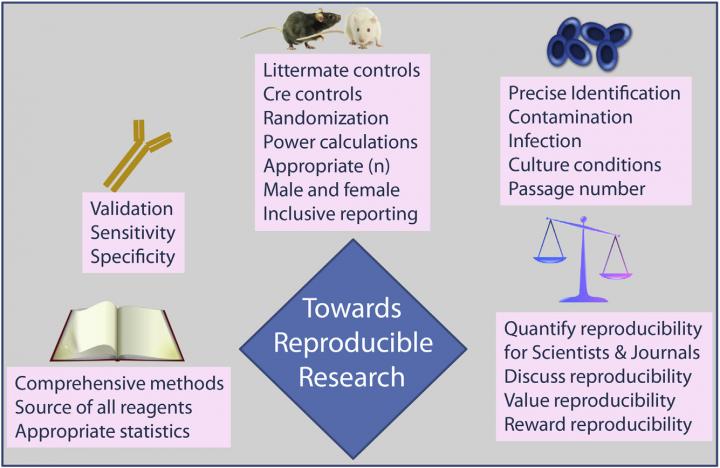

Drug discovery is also hard. Only 1 in 5,000 products will get to market and the chasm is created by fiscal viability, safety and efficacy, something not required when corporate media is writing press releases about the latest claims. In an article published in Cell Metabolism, Drucker, of the Lunenfeld-Tanenbaum Research Institute at Mt. Sinai Hospital in Toronto, suggests ways that researchers can improve and standardize experiments so that the joy of exciting results can come with a rigorous scientific story. These include:

1. Apply Rules for Clinical Trials to Preclinical Research

"If you did a clinical trial where you studied 100 patients and only reported data on those patients that fit your hypothesis, I can tell you that you would be fired," Drucker says. "Yet it is common practice to carry out dozens of basic science experiments and not report the majority of them--only the ones that the principle investigator is particularly smitten by are published."

As a consequence, the results of many papers are indeed reproducible, if the experiment is precisely conducted with the same mice, which are the same age and the same sex, from the identical supplier, in the same location, with the same microbiota, etc. Failed experiments often don't get mentioned in the paper because it weakens the story that the researcher wants to send to the journal, he says. Negative results, even with slightly different experimental conditions, often mean a mid-tier publication, which is a cultural issue that fosters irreproducibility.

2. Test Hypotheses in More Than One Animal Model

Drucker requires his students and postdocs to replicate the effects of a potential therapeutic in several animal models of diabetes or obesity before going on to explore the potential mechanism in tissue and cell cultures. This is a slow approach, and not always fruitful, but it does temper underdeveloped conclusions. An opposite strategy, used in many labs, is to search for an interesting elegant molecular mechanism using molecular and biochemical techniques ex vivo and then generate an animal model that supports these findings. These fast discoveries often come with publication in a top-tier journal and other accolades, but they can be more challenging to apply to human disease.

"We would be serving the community better if we could reproduce really cool results in multiple animal models and not just report the ones that work," Drucker says. "We never even hear about the experiments that didn't work until someone else tries and fails to reproduce the findings in a different model. But there is no penalty or reward for producing more or less reproducible research, and no one keeps track of it."

3. Hold Reproducibility Symposia

It is rare to see sessions at scientific meetings dedicated to reproducibility issues in research. These discussions do take place, Drucker says, but in hallways or bars, not a formal atmosphere. Having an honest public discussion of common problems and solution, and encouraging everyone to be more accountable for their work, would also set a positive example for young researchers. New methods and techniques are constantly available, and so there will likely always be ways for investigators to improve their practices.

4. Develop Reproducibility Reporting

To improve accountability, Drucker envisions a future where an academic independent body develops a basic algorithm that could compute how reproducible or irreproducible a scientific paper proved to be. However, such an index could prove damaging, he says, as no one would want to be known as the king or queen of irreproducibility.

"There are much more feasible ways to discuss the reproducibility of one's work that would be less challenging to arbitrate," he says. "For example, let's make it a feature, and the National Institutes of Health has started to do this but many grant agencies have not, to have a mandatory part of grant applications to track a senior researcher's record--allow them to present the most well-cited papers they've published and show examples of how the key findings within have been reproduced."

5. Improve Methods Reporting

Another strategy for enabling research to be more easily reproduced is to include sufficient experimental detail, such as description of and source of reagents, cell lines, and animals used in each experiment, Drucker says. Simply enhancing journal requirements for more detailed reporting would increase the likelihood that future scientists can follow the same steps described in a paper. (Cell Press journals recently introduced STAR Methods, 10.1016/j.cell.2016.08.021, which consider such recommendations.)

Drucker doesn't anticipate everyone will agree with his recommendations, but he and many others do think it is time to have regular, robust conversations about reproducibility. We can start to fix the problem by quantifying and keeping track of reproducibility while building policies that enforce good scientific practices, he says.

Comments