“Robots will live in our world soon enough and they really need to learn how to communicate with us on human terms. They need to understand when it is time for them to help and when it is time for them to see what they can do to prevent something from happening. This is very early work and we are barely scratching the surface, but I feel like this is the first very serious attempt for understanding what it means for humans and machines to interact socially,” says Boris Katz, principal research scientist and head of the InfoLab Group in the Computer Science and Artificial Intelligence Laboratory (CSAIL) and a member of the Center for Brains, Minds, and Machines (CBMM).

In a recent paper about a simulated environment, a robot watches its companion, guesses what task it wants to accomplish, and then helps or hinders this other robot based on its own goals. The study authors found that their model creates realistic and predictable social interactions. When they showed videos of these simulated robots interacting with one another to humans, the human viewers mostly agreed with the model about what type of social behavior was occurring.

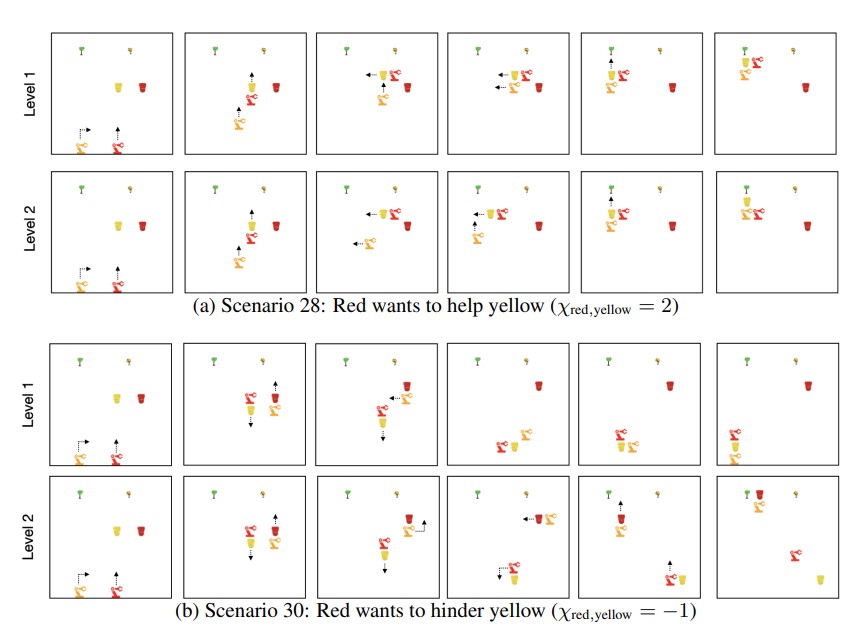

Two examples of zero-shot social interactions.The Social MDP gives the robots the ability to understand and predict relationships, thereby making far more efficient actions. The yellow robot wants to water the tree. Moving the yellow watering can is easy for the yellow robot, while moving the red can is hard for the yellow robot. The yellow robot performs inference to understand what the red robot is doing. With a level 1 Social MDP, the yellow robot assumes that the red robot has a physical goal, but not a social goal. With a level 2 Social MDP, the yellow robot assumes that the red robot has both a physical and social goal, then recursively estimates the social goal of the red robot (which is in turn modeled as a level 1 Social MDP). (a) At level 1, the yellow robot follows the red one around. It does not understand that the red robot is trying to help. The red robot correctly executes its social goal of helping the yellow robot by moving its watering can toward the tree. At level 2, the yellow robot recognizes that red is helping, then estimates where its future trajectory will take it, and efficiently goes to the intercept point accepting red’s help. (b) At level 1, yellow does not infer that red wants to hinder it. As such, it attempts to move the yellow can and repeatedly fails, entering a local minima where the yellow can is the one easiest to move without realizing that the red robot will forever prevent this. At level 2, the yellow robot recognizes that the red robot is attempting to hinder it, gives up on the yellow can, and makes a globally-optimal move of using the harder-to-move red can instead.

A social simulation

To study social interactions, the researchers created a simulated environment where robots pursue physical and social goals as they move around a two-dimensional grid.

A physical goal relates to the environment. For example, a robot’s physical goal might be to navigate to a tree at a certain point on the grid. A social goal involves guessing what another robot is trying to do and then acting based on that estimation, like helping another robot water the tree.

The researchers use their model to specify what a robot’s physical goals are, what its social goals are, and how much emphasis it should place on one over the other. The robot is rewarded for actions it takes that get it closer to accomplishing its goals. If a robot is trying to help its companion, it adjusts its reward to match that of the other robot; if it is trying to hinder, it adjusts its reward to be the opposite. The planner, an algorithm that decides which actions the robot should take, uses this continually updating reward to guide the robot to carry out a blend of physical and social goals.

“We have opened a new mathematical framework for how you model social interaction between two agents. If you are a robot, and you want to go to location X, and I am another robot and I see that you are trying to go to location X, I can cooperate by helping you get to location X faster. That might mean moving X closer to you, finding another better X, or taking whatever action you had to take at X. Our formulation allows the plan to discover the ‘how’; we specify the ‘what’ in terms of what social interactions mean mathematically,” says Tejwani.

Blending a robot’s physical and social goals is important to create realistic interactions, since humans who help one another have limits to how far they will go. For instance, a rational person likely wouldn’t just hand a stranger their wallet, Barbu says.

The researchers used this mathematical framework to define three types of robots. A level 0 robot has only physical goals and cannot reason socially. A level 1 robot has physical and social goals but assumes all other robots only have physical goals. Level 1 robots can take actions based on the physical goals of other robots, like helping and hindering. A level 2 robot assumes other robots have social and physical goals; these robots can take more sophisticated actions like joining in to help together.

Evaluating the model

To see how their model compared to human perspectives about social interactions, they created 98 different scenarios with robots at levels 0, 1, and 2. Twelve humans watched 196 video clips of the robots interacting, and then were asked to estimate the physical and social goals of those robots.

In most instances, their model agreed with what the humans thought about the social interactions that were occurring in each frame.

“We have this long-term interest, both to build computational models for robots, but also to dig deeper into the human aspects of this. We want to find out what features from these videos humans are using to understand social interactions. Can we make an objective test for your ability to recognize social interactions? Maybe there is a way to teach people to recognize these social interactions and improve their abilities. We are a long way from this, but even just being able to measure social interactions effectively is a big step forward,” Barbu says.

Comments