The two main types of driving we do on a regular basis - freeway and city - both have traffic flow problems which require very different solutions. This is because the type of bottleneck that produce traffic jams is different depending on the style of road. In a city, you're much more likely to be stuck in traffic because of a stoplight somewhere, while on the freeway a number of different events might take place that cause a slowdown. Obvious reasons for freeway slowdowns, such as accidents and construction, are only part of the problem. One of the other major causes of freeway traffic jams (those really frustrating ones with no apparent cause) is nothing more than a small number of cars deciding to adjust their speed suddenly.

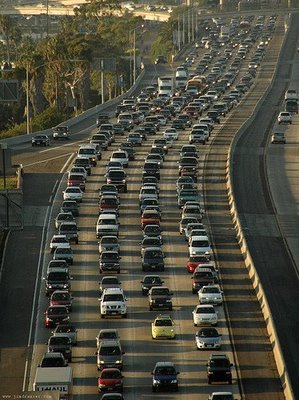

Unexpected Frustration: Sometimes traffic jams appear with little to no warning and have no apparent cause. Photo Credit: UC Berkeley

Scientists at the University of Exeter, in the UK, produced a traffic model showing that when a car's speed dips below a certain critical speed, it creates "waves" of high car density that travel upstream, eventually causing stopped traffic miles behind the original disturbance. In March, 2008, a Japanese team at the University of Nagoya confirmed this effect experimentally using a high density of cars on a circular track. Sure enough, traffic moved smoothly until one car altered its speed and caused cars behind it to slow down, eventually resulting in a traffic jam.

So, what are transportation engineers doing to reduce these kinds of traffic jams? At the root, every effort needs to be taken to get drivers to drive at as near a constant speed as possible. This way, the density of cars on the road can stay high while preventing upstream traffic jams. One way to do this is through metering lights. Although they already exist in most major cities, metering lights are often calibrated to allow cars on the road based on occupancy. They work, to a degree, but they don't take merging cars into account, a major source of slowdowns. Merging cars cause other cars already on the freeway to slow down and avoid a collision, but if cars slow below the critical speed, they will cause a traffic jam behind them.

Craig Davis, a retired Ford Motor Co. research scientist and current adjunct professor at the University of Michigan, created a new metering algorithm that uses merge time and total throughput (the rate at which cars pass on the highway) rather than occupancy to determine when cars can be allowed on the road. He found that capacity drops dramatically when the throughput is above 1,900 cars/hour/lane. Below this limit, congestion is reduced.

Davis has also studied automatic cruise control, which can also help provide a smooth traffic flow. Automatic cruise control differs from regular cruise control because it is capable of adjusting speed to keep the correct distance between cars. Although it seems like this may not help keep cars moving at constant speed, it does help by reacting to small changes in the speed of the car ahead of us before the driver notices, smoothing out speed changes and reducing the amount of heavy braking. In simulation, these systems show promise in stabilizing the amount of throughput but don't help much for merging cars. It's likely that a multi-faceted approach will be required to solve the problem.

Ready, Set, Go: A new optimization scheme for stoplights may reduce travel time and traffic jams. Photo Credit: University of San Francisco

But what about those pesky stoplights? In the 1960s and 1970s, huge investments were made in traffic lights to manage traffic during peak hours. They were often pre-programmed to deal with traffic during specific high-volume conditions, and ran through set routines based on the most likely traffic conditions. While the volume of cars and number of stoplights was still relatively low, this system worked just fine. Now, however, there are so many cars on the road that additional stoplights are required to control them all, and that adds a level of complexity to the roadway system that pre-programmed lights are simply not capable of dealing with. Engineers can attempt to optimize the stoplight routines by taking the additional stoplights into account, but eventually they run into a computational limit.

Dirk Helbing, Professor of Sociology at the ETH Zurich Chair of Sociology, a specialist in modeling and simulation, says that "even for normal-sized cities, super computers are just not fast enough to compute all of the different options that exist for controlling traffic lights. So the number of choices actually considered by the optimization program is significantly reduced." As a result, most traffic lights are programmed based on a simplified model of average traffic flow - a condition the traffic light practically never sees.

"The variation in the number of vehicles that queue up at a traffic light at any minute of the day is huge," Professor Helbing says. "You are optimizing for a situation that basically is true on average but that is never true for any single day or minute: essentially for a situation that never exists. Plus, even adaptive traffic lights in modern control schemes are usually restricted to a variation of cycle-based control."

In order to combat this problem, Professor Helbing and co-author Stefan Lämmer of the Institute for Transport and Economics at Dresden University of Technology have published a paper delineating two distinct strategies for dealing with traffic flow at stoplights. On its own, neither strategy is as good as current traffic light schemes. When applied together, though, travel time becomes stable and emmisions are reduced.

The first strategy uses a decentralized system - which uses sensors to determine real-time traffic conditions at the light - to optimize flow through one intersection. At low volumes, this strategy worked fine, but as volume increased the light was unable to clear jams off of side streets. The second strategy was a stabilizing method that cleared traffic at a light once it reached a critical threshold. The stabilizing method performed worse than existing stoplight methods at all volumes. When combined, however, the two methods perform better than existing systems at all car volumes, at least in simulation.

The real problem will be selling the system to motorists. Although it works better than existing traffic light grids, it's unpredictable from the stand point of the driver - we are used to specific stoplight routines, and the irregularity may be a cause of great frustration to somebody stuck at a light when the other direction gets to go twice before he or she does. Helbing thinks that governments will have to accompany the new system with a large public relations campaign to keep drivers from going crazy.

At the very least, decentralized traffic lights are a drastic improvement over current systems. "What we don’t know is how big an advantage the news systems will be." Helbing says. "But all the facts point to decentralised traffic control. This will be the paradigm of the future."

I, for one, think we should get moving.

---

See the three strategies (optimized flow, stabilized flow, and combined) in action, albeit, in German (would anybody like to translate?).

Play with this model and try to prevent a traffic jam!

Comments