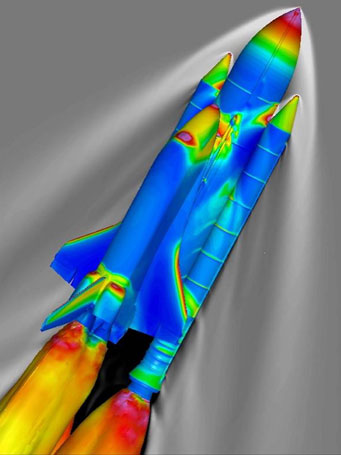

Indeed, this is what most supercomputers are up to: simulating climate change, the structure of proteins, or the dynamics of a supernova, while producing awesome pictures like this one:

An awesome picture of the ascending Space Shuttle and the pressure coefficients of the outside. Photo Credit: NASA Advanced Supercomputing Facility

Largely, supercomputers spend their time simulating large finite-element models or optimizing complex systems. One ability of supercomputers, however - the organization, management, and searching of large volumes of data - is very useful to disciplines often ignored by the large-scale computation community: the humanities.

In December 2008, the Department of Energy partnered with the National Endowment for the Humanities and donated one million CPU hours of time at the National Energy Research Scientific Computing Center. The NERSC is home to "Franklin," a Cray XT4 computer that ranks as the seventh-most powerful computer in the world, as well as other immensely powerful computers. Humanities researchers will use the computer for three different projects under the Humanities High Performance Computing Program, the first formally established partnership between the DOE and NEH.

What exactly do humanities researchers want to do with the computers? Two of the three projects focus on analyzing patterns - one looks for language-related patterns while the other probes all types of media for social patterns. The Perseus Digital Library Project, led by Gregory Crane of Tufts University in Medford, Mass., will use the NERSC's computers to analyze how the meanings of Greek and Latin words have changed over the centuries.

"High performance computing really allows us to ask questions on a scale that we haven’t been able to ask before. We’ll be able to track changes in Greek from the time of Homer to the Middle Ages. We’ll be able to compare the 17th century works of John Milton to those of Virgil, which were written around the turn of the millennium, and try to automatically find those places where Paradise Lost is alluding to the Aeneid, even though one is written in English and the other in Latin," says David Bamman, a senior researcher in computational linguistics with the Perseus Project.

Lev Manovich, Director of the Software Studies Initiative at the University of California, San Diego, will use the new computational power to analyze a much broader set of data - a huge collection of pictures, paintings, videos, and texts. His team is looking for cultural patterns; they're taking a sociological look at media and calling it Cultural Analytics.

"Digitization of media collections, the development of Web 2.0 and the rapid growth of social media have created unique opportunities to studying social and cultural processes in new ways. For the first time in human history, we have access to unprecedented amounts of data about people’s cultural behavior and preferences as well as cultural assets in digital form," says Manovich. He hopes to be able to identify global cultural patterns and processes, leading to a more analytical analysis of cultural phenomena.

The third project is run by David Koller, Assistant Director of the University of Virginia’s Institute for Advanced Technology in the Humanities in Charlottesville, Virginia, and has something of a different goal. Koller is assembling very detailed 3-dimensional digital images of historical statues and buildings - from Michaelangelo's David to Colonial Williamsburg, Virginia. His scans are accurate to a quarter of a millimeter, and therefore require a large amount of computing power to stitch together into a true 3-D image for analysis. Koller has also taken scans of fragments that have chipped off of old works of art and plans to re-assemble them with the help of the computer - something never before available to the humanities.

Digital Art: David Koller scans the Laocoon Statue at the Vatican Museum to get a detailed 3-D image of the sculpture. A single statue may require billions of data points, necessitating large computer power for synthesis into a real 3-D image. Photo Credit: Berkeley Lab Computing Sciences

A similar project run by the Rochester Institute of Technology aims to digitally store all of the Sarvamoola Granthas, a huge collection of palm-leaf documents from ancient India. Digitizing the Granthas will help preserve them as the original copies deteriorate. The RIT used Teragrid - a supercomputing system that is distributed over 11 sites throughout the United States and is capable of storing 30 petabytes of data for quick retrieval and archiving - to store the Grantha data. The program helps demonstrate the usefulness of such systems for all disciplines, not just the sciences.

Obviously, the humanities are not poised to take over much more supercomputing time - the lion's share of processor cycles are still dedicated to exotic scientific problems and will almost certainly stay there. As data mining needs for the humanities increase, however, we will almost certainly see art and literature applications running more frequently. At any rate, it's nice to see a bit of diversity.

Comments