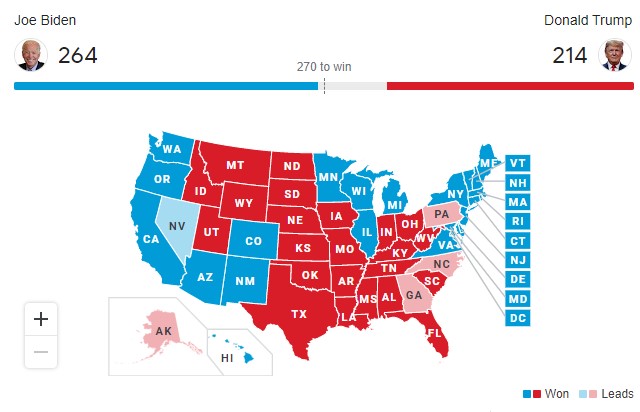

Trust in polls was already shaky after the 2016 prediction debacle and 2020 hurt their standing even more. Democrats who went into Tuesday expecting a clear rebuke of President Trump did not get what supposedly scientific data promised them. There is no blowout. No takeover of the Senate. The Cboe Vix index of US stock market volatility dropped after being up sharply for weeks because financial analysts had believed polls. They assumed that former Vice-President Joe Biden would win in a rout, and with a clear mandate his administration would mean more regulations, more taxes, and a stagnant economy even after the coronavirus pandemic is over.

What polls did to hopeful Democrats.

None of that happened.

It is expected by most that Biden will still win, but instead of trouncing President Trump he will have the weakest mandate since President Clinton got into office with 43 percent of the vote in 1992. He will have to cooperate and compromise with Republicans. That's good for the country but this election was bad for polling organizations.

What went wrong with polls? The reality is they were never right

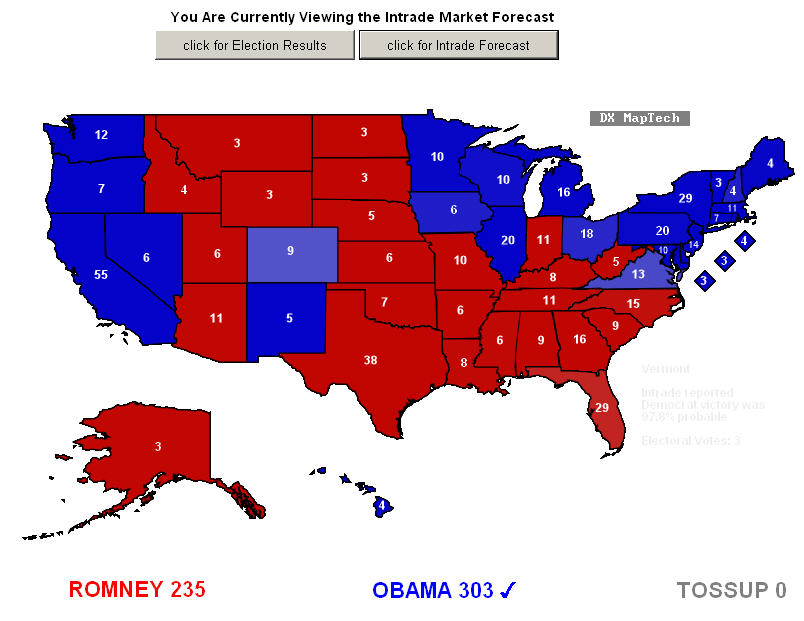

Just two elections ago we were assured that the age of scientific projections had arrived. Somewhere, someone who covers science at some media corporation declared polling was science and it was all over my Twitter feed, repeated by people who really should know better. Yet their only evidence was that a baseball statistician who liked Senator Obama more than Senator Clinton in 2008 averaged polls to find that - surprise - Senator Obama was liked by more people than media claimed. By 2012 he was at the New York Times throwing around meaningless numbers like a 73 percent chance for re-election by President Obama.

Meaningless precision sounds scientific but anyone with a clue knew that there was a 100 percent chance he was winning re-election.

Nate Silver was right again in 2012. Everyone was, except maybe Karl Rove or whoever Fox News had on to claim Mitt Romney was winning in an upset. A European betting agency that used nothing but how Europeans bet on the US election was actually as correct as Silver the day before the election and they didn't look at polls at all. Despite those glaring flaws, 'polling is scientific' rhetoric reached its apex.

I said then that, despite the results, polls were not accurate and that meant poll averaging was pointless.

I used a physics analogy; it is absolutely possible to have a completely wrong answer with 99.99 percent accuracy. It is also completely possible to get the right answer by guessing. If the methodologies used in polling were correct, we could predict Congressional elections, yet such predictions are wildly inaccurate. Instead, as we learned in 2016 and again in 2020, election polls are only valid when the result is obvious well in advance.

Here are three reasons media should stop promoting polls the same way they should stop promoting observational claims that pretend to be science.

1. Polls do not predict outcomes. In science, a mouse study or a statistical correlation linking some chemical or food to some negative or positive effect is placed into the "exploratory" section. A poll may be interesting, but it's not science any more than Harvard food frequency questionnaires or mouse studies linking everything to cancer are legitimate epidemiology.

In California at the same time as my 2012 criticism of polls as science, few on surveys claimed to be anti-vaccine, yet California had more vaccine deniers than the rest of the country combined. All those progressives on the coast claimed to be more educated than the rest of the state but they could say they were not anti-vaccine because they couched their vaccine denial in language about choice. Predictably, when I wrote about California's vaccine problem and how it was progressives on the coast, I had academic statisticians trotting out surveys claiming that vaccine denial was just as strong on the right.

On surveys, it was. In actual behavior, it was systemic among the left. Alabama and Mississippi had near 100 percent compliance, California was eventually forced to pass a law, which the Democratic Governor did not want to sign, eliminating 'for any reason' exemptions because some schools on the coast had vaccine rates under 30 percent. That did not show up in surveys, but it showed up in CDC data, and it showed up in a Whooping Cough resurgence. Polls can lie, disease does not.

2. A confidence interval just tells you how likely it is the results are not bananas. If a poll tells you a candidate has a 5 percent lead with +/-3 margin of error and a 95 percent confidence interval it means they're guessing - and there is a 5 percent chance they're guessing completely wrong. And actual results were so far outside the margin of error they could have been a Scientific American editorial on politics and been just as close.

Polls can be completely wrong for obvious reasons; who answers the phone for the poll results? People who like to take surveys on the phone, that's who. The 2016 election polling was just as ridiculous because the scorn toward Donald Trump was so high that people who intended to vote for him clearly didn't want the drama of admitting it. Including to strangers on the phone. Though Democrats in media rushed to find a cause for their disappointment - an investigation into some emails, Russian money, more dumb people voting, whatever - the polls were simply wrong, and journalists who should know better relied on them too much. They were writing aspirational election outcome articles pretending they had a data basis, just like they'll write up statistical correlation or mouse studies and imply they have human relevance.

It is easy to get a 95 percent confidence interval for a completely wrong answer. With statistical significance I can show coin flips are not random. I can show that eating red meat both causes and prevents cancer.

3. You still have to play the game. In the run-up to the 'polling is science' fetish of 2008, a movie called "Swing Vote" was released. In it, one clueless white guy was going to determine the outcome of the election. In that case, the vote was tied in the one state that would determine the electoral college but it brings up a fine thought experiment; if polling is accurate, couldn't we let 1,000 people vote? Or one? The reason is that the most accurate polling secret sauce method was wrong by 21 electoral votes and 1,100,000 million people in 2008. And that was an easy year, as was 2012.

In 2016 and in 2020 it was back to its terrible normal.

You don't send up your utility player to bat against a relief pitcher and let that determine the baseball game. You also don't bother not to watch because Big Papi predicts who is going to win; sports fans know better. You have to play the game. It is time journalists remember that about elections as well.

"Everyone believes the data except the collector. No one believes the model except the modeler" - old statistics saying.

Comments