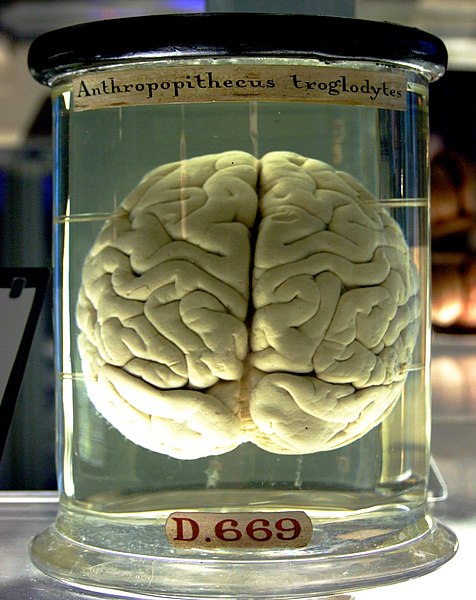

The ultimate cause of human behavior is the mind. The mind, an emergent property from the workings of collections of neurons, hormones, proteins, and other biochemical agents, controls everything every human does. From their intended actions to their subconscious actions, all of it is controlled by the human analogue of a central processing unit. Cognitive models in the social sciences and particularly in psychology and economics take seriously this point of view of the human decision maker and apply it to political, economic, and other social situations.

One of the major ideas of the subject is that people are equipped with a cognitive “toolbox” that houses tools that help them to solve problems in different environments. These tools come in the form of heuristics, or simple models, of a situation. Robert Axelrod in his 1973 paper, “Schema Theory: An information Processing Model of Perception and Cognition,” called his version of this model a Schema. It’s components were an input of information about a particular case, including what type of case or situation it is; an interpretation of the case at hand and a complete specification of it; and an accessibility check to see how closely the schema (or heuristics) currently available in ones “toolbox” fit the case, which lead to slight adjustments of the schema to fit the case better if warranted.

Herbert Simon forecasted such a theory with his 1955 paper, “A behavioral Model of Rational Choice.” In it he claimed that people, when confronted with a situation have available to them a set A of strategies, or actions, but will only consider a limited number of them, a subset B of A. The reason for this could be many, but is likely due in part to a lack of available information about the actions’ availability. For instance, most people when playing chess do not realize how many moves they have available to them at any given point in the game. There is an objective number of possible moves that far exceeds their limited set of perceived options.

Simon mentions that one of the most important ideas about a cognitive model approach to human decision making is in how we model the payoff functions of individuals. Classically payoff functions are the weighted sum of all the outcomes of all the options and individuals are presumed to make a “rational” decision based upon this information as to what action to take. Simon suggests instead of a “real-valued” payoff function, that is one that has a number as it’s output, we use a “vector” valued payoff function. The value of such a payoff function is that we can account for sub-payoffs within the total payoff of a given action that don’t sum together. For instance, in his example, if one is deciding between two jobs in two different cities, two of the payoff calculations could be 1) salary, and 2) weather. It is hard to add these two payoffs together. Instead a total payoff may contain both sets of information as is.

Another component to the vector valued function would have to include the history of past actions in similar situations. That is, it would need to be history-dependent. Again, using an example of Simon’s, you won’t know if you like cheese until you have tasted it.

Axelrod mentions that people often don’t have the time, nor the computing power, to evaluate every option available to them. Simon also discusses that the problem of a lack of computing power. A good cognitive model must take this into account by incorporating into the model these limits, or bounds, on an actor’s rationality.

The so called “fight or flight reflex” is a good example of the type of heuristic discussed in the literature. If you are being chased by a bear, you don’t have a lot of time to think about all of your options. Instead, you simplify your options down to just two: run; or stay and fight. One of those two may get you killed. But, if you happened to pick the one that kept you alive, then you are more likely to try that one again the next time you are in a similar situation than you are to try the opposing option that hasn’t yet been tried. Thus in the ordering of strategies, the tried and true trumps the unknown.

Another major component of cognitive models is loss or risk aversion. In “Loss Aversion in Riskless Choice: A Reference-Dependent Model,” Amos Tversky and Daniel Kahneman discuss the asymmetry of individual choice when faced with loss verses being faced with gain. People seem to behave in such a way as to be more worried about losing what they have, than in gaining more. They mention that such loss aversion can hamper situations such as negotiations if the outcomes of the negotiation are framed as losses. For example, it matters to people whether the surgery they are about to undergo has a 90% survival rate, or a 10% mortality rate. These are just different ways of stating the same idea, and Rational Choice Theory claims they are equal and that actors wouldn’t care which way the information was presented to them. The trouble is that the evidence says they do care.

Cognitive choice models prove to be a powerful refutation of the classical theory of the “rational actor”. In classical theory, human beings take the entire set of objective options, sift clearly through these options, weighing the differing payoffs assigned to each option, and taking the appropriate action that results in the greatest payoff to the individual. Cognitive models do away with much of this theory. Simon’s clearly states that people throw away much of the information available to them to simplify the calculations involved in a given situation. Axelrod also mentions that the relevance of information being inputted into ones calculations determines much of what is and is not thrown away, but that this may not always be accurate.

That is, people, using a heuristic that has worked previously in a similar situation will often toss out information relevant to the current case because it doesn’t fit their preconceived model of action. This type of “data dumping” can result in outcomes contrary to the individual’s interests. Tversky and Kahneman make it clear that people are often more worried about losses than about gains, and that this can skew the payoff functions, and make their actions seem less rational to an outside observer, and in fact may seem irrational, upon reflection, to the participants themselves.

Much of this discussion may sound negative to the reader since cognitive models predict that individuals will, and often do, make erroneous decisions based on false assumptions. Amos Tversky and Daniel Kahneman discuss this concept to some degree in their paper, “Rational Choice and the Framing of Decisions (1986).” They say because cognitive models don’t allow for much of the rationalizing behavior that is necessary if one is to come to an “optimum” solution to a given case, that “no theory of choice can be both normatively adequate and descriptively accurate.” This means that cognitive models are best used in positive theories about human behavior, and suggests that the “rational actor model” may be best suited to normative theories of human behavior.

If we want to “get to the bottom” of why people make the decisions they make in political and economic situations, the cognitive models provide a great leap forward in that direction. They offer a clear system of evaluation that may be used by an individual that is also practical and realistic, though not always one that results in an “optimal” outcome. It may indeed be a “good fit” to how humans actually make choices.

Humans are not optimizers, they're satisficers. So, don't call me rational!

References

- Axelrod, Robert. 1973. “Schema Theory: An Information Processing Model of Perception and Cognition.” The American Political Science Review, Vol. 67, No. 4, 1248-1266.

- Simon, Herbert A. 1955. “A Behavioral Model of Rational Choice.” The Quarterly Journal of Economics, Vol. 69, No. 1, 99-118.

- Tversky, Amos and Kahneman, Daniel. 1981. “The Framing of Decisions and the Psychology of Choice.” Science, New Series, Vol. 211, No. 4481, 453-458.

- Tversky, Amos and Kahneman, Daniel. 1986. “Rational Choice and the Framing of Decisions.” The Journal of Business, Vol. 59, No. 4, Part 2: The Behavioral Foundations of Economic Theory, S251-S278.

- Tversky, Amos and Kahneman, Daniel. 1991. “Loss Aversion in Riskless Choice: A Reference-Dependent Model.” The Quarterly Journal of Economics, Vol. 106, No. 4, 1039-1061.

Comments