Fink and a team at Caltech, the U.S. Geological Survey and the University of Arizona are developing software along with a robotic test bed that can mimic a field geologist or astronaut - the software, they say, will allow a robot to think on its own.

The way it works now, engineers command a rover or spacecraft to carry out certain tasks and then wait for them to be executed. They have little flexibility in changing their game plan as situations change, like imaging a landslide or cryovolcanic eruption as it happens, or investigating a methane outgassing event.

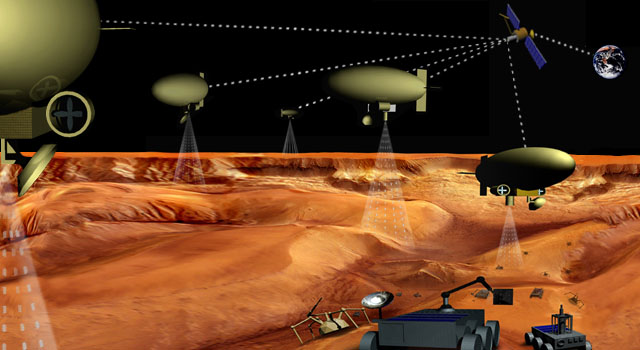

Orbiter, airblimps, rovers and robots working together. Credit: JPL

"In the future, multiple robots will be in the driver's seat," says Fink. The robots he envisions would share information in almost real time. This type of exploration may one day be used on a mission to Titan, Mars and other planetary bodies. Current proposals for Titan would use an orbiter, an air balloon and rovers or lake landers.

In this mission scenario, an orbiter would circle Titan with a global view of the moon, with an air balloon or airship floating overhead to provide a birds-eye view of mountain ranges, lakes and canyons. On the ground, a rover or lake lander would explore the moon's nooks and crannies. The orbiter would "speak" directly to the air balloon and command it to fly over a certain region for a closer look. This aerial balloon would be in contact with several small rovers on the ground and command them to move to areas identified from overhead.

"This type of exploration is referred to as tier-scalable reconnaissance," says Fink. "It's sort of like commanding a small army of robots operating in space, in the air and on the ground simultaneously."

A rover might report that it's seeing smooth rocks in the local vicinity, while the airship or orbiter could confirm that indeed the rover is in a dry riverbed - unlike current missions, which focus only on a global view from far above but can't provide information on a local scale to tell the rover that indeed it is sitting in the middle of dry riverbed.

A current example of this type of exploration can best be seen at Mars with the communications relay between the rovers and orbiting spacecraft like the Mars Reconnaissance Orbiter. However, that information is just relayed and not shared amongst the spacecraft or used to directly control them.

"We are basically heading toward making robots that command other robots," says Fink, who is director of Caltech's Visual and Autonomous Exploration Systems Research Laboratory, where this work has taken place.

"One day an entire fleet of robots will be autonomously commanded at once. This armada of robots will be our eyes, ears, arms and legs in space, in the air, and on the ground, capable of responding to their environment without us, to explore and embrace the unknown," he adds.

Papers describing this new exploration are published in the journal Computer Methods and Programs in Biomedicine and in the Proceedings of the SPIE.

For more information on this work, visit http://autonomy.caltech.edu.

Comments