The number of scientists is increasing along with the number of scientific journals and published papers, the latter two thanks in large part to the rise of electronic publishing. Scientists and other researchers are finding it more difficult than ever to zero in on the published literature that is most valuable to them.

A team of researchers from Northwestern University say they have developed a mathematical method to rank scientific journals according to quality.

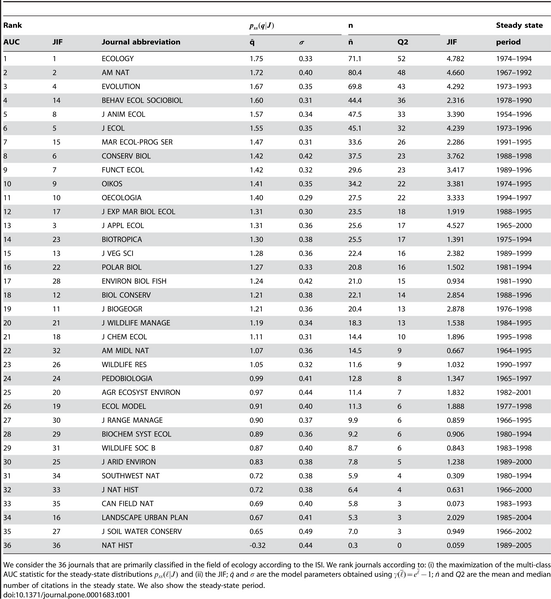

The team analyzed the citation data of nearly 23 million papers that appeared in 2,267 journals representing 200 academic fields and that spanned the years from 1955 to 2006; their analysis produced 200 separate tables of journal rankings by field.

“Trying to find good information in the literature can be a problem for scientists,” said Luís A. Nunes Amaral, associate professor of chemical and biological engineering in Northwestern’s McCormick School of Engineering and Applied Science, who led the study. “Because we have quantified which journals are better, now we can definitively say that the journal in which one publishes provides reliable information about the paper’s quality. It is important to grasp a paper’s quality right away.”

Amaral, Michael J. Stringer, lead author of the paper and a graduate student in Amaral’s research group, and Marta Sales-Pardo, co-author and a postdoctoral fellow, developed methods to look at the enormous amount of published papers and to make sense of them. For each of the 2,267 journals, they charted the citations each paper received across a certain span of years and then developed a model of that data, which allowed the researchers to compare journals.

The researchers’ model produced bell curves for the distribution of “quality” of the papers published in each journal. For each field, all the bell curves for the journals then were compared, which resulted in the journal rankings. The field of ecology, for example, had 36 journals, with Ecology ranked highest and Natural History ranked lowest.

“The higher a journal is ranked, the higher the probability of finding a high-impact paper published in that journal,” said Amaral.

Amaral and his team found that the time scale for a published paper’s complete accumulation of citations -- a gauge for determining the full impact of the paper -- can range from less than one year to 26 years, depending on the journal. Using their new method, the Northwestern researchers can estimate the total number of citations a paper in a specific journal will get in the future and thus determine -- right now -- the paper’s likely impact in its field. This is the kind of information university administrators and funding agencies should find helpful when they are evaluating faculty members for tenure and researchers for grant awards.

“This study is just one example of how large datasets are allowing us to gain insight about systems that we could only speculate about before,” said Stringer. “Understanding how we use information and how it spreads is an important and interesting question. The most surprising thing to me about our study is that the data seem to suggest that the citation patterns in journals are actually simpler than we would have expected.”

Article: Michael J. Stringer1, Marta Sales-Pardo, Luís A. Nunes Amaral, Effectiveness of Journal Ranking Schemes as a Tool for Locating Information, PLoS ONE 3(2): e1683. doi:10.1371/journal.pone.0001683

Comments