Tidy or not, the two-digit number of matter fields in the standard model may suggest that these bodies are not, in fact, elementary. What does "elementary" mean, after all ? Well, elementary has several meanings: something elementary is something simple; something that does not have any structure, and cannot be divided into simpler parts; but also something that is the basic building block of more complex structures. Do we really need twenty-four different elementary building blocks to generate the universe ?

The question is at the basis of investigations for a substructure of quarks and leptons. Of course, to investigate the structure of things that we consider point-like, we need the highest-energy probes available. The hope is that we will experience something like what Lord Rutherford did a hundred years ago: by shooting alpha particles at a gold foil, he saw some of them deflected at very large angles, as shown in the figure on the right. In Rutherford's own words,

The question is at the basis of investigations for a substructure of quarks and leptons. Of course, to investigate the structure of things that we consider point-like, we need the highest-energy probes available. The hope is that we will experience something like what Lord Rutherford did a hundred years ago: by shooting alpha particles at a gold foil, he saw some of them deflected at very large angles, as shown in the figure on the right. In Rutherford's own words,"It was quite the most incredible event that ever happened to me in my life. It was almost as incredible as if you fired a 15-inch shell at a piece of tissue paper and it came back and hit you."

Today's analogue to a back-scattering of alpha particles, in the proton-antiproton scattering at 2 TeV provided by the Tevatron collider, is an excess of events with very large energy, emitted at large angle from the beams. The similarity may not appear so clear to you, but please consider: the momentum transfer from the gold atom to a back-scattered alpha particle is very large, and it indicates a strong force acting on the projectiles, produced by some hard sub-structure within the atom. Similarly, the large energy of the products of a hadron collision, radiated transversally from the incoming beams, may indicate that we are starting to see some substructure in the quarks.

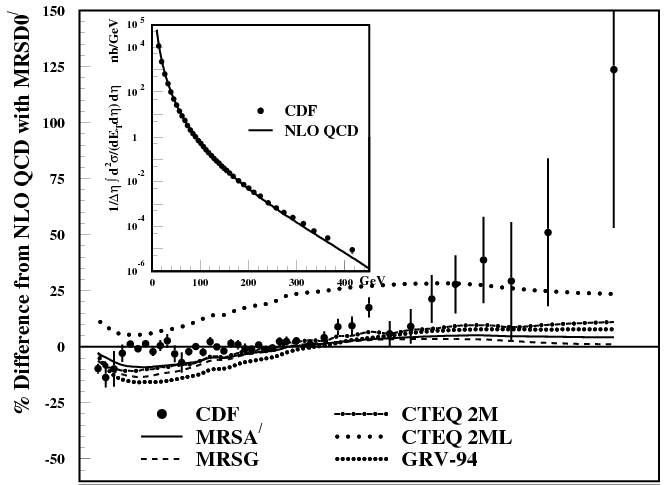

In 1996 CDF found in the Run I data a large excess of events with a jet of hadrons emitted with very high energy. You can see the excess in the original figure from the 1996 paper below.

The excess of high-Et jets (which you can see in the form of a upward deviation of the black points with error bars from the line at zero) could be the result of a underestimate of the cross-section for "normal" quantum-chromodynamical processes, or the first footprint of a quark compositeness. The effect made headlines back then, but it eventually died away once it was discovered that by tweaking the momentum distribution of gluons in the proton the number of energetic collisions could be boosted up to match the observations.

I can almost hear some readers screaming profanities as they leave this site in rage, after fighting with their guiltless neurons to make any sense of the above sentence. For those who are left, an explanation of what I mean above is in order.

When you collide a proton and an antiproton, each of them possessing an energy of 900 giga-electronvolts (the beam energy in Run I), you are not supposed to see the full 1800 GeV in your detector, transported by new particles flying out in all directions. That is, you expect that most of the incoming energy will be retained by the two incoming protons, or what has become of them after the collision; these energetic remnants will escape through the same apertures that allowed the projectiles in. Only a smaller, variable amount of energy will be emitted at large angles from the beams, and will thus be detectable by your instrument.

The reason for the above is that what are colliding are two constituents -a quark and an antiquark, or a gluon and a quark, etcetera. And these transport only a small fraction of the energy of the proton which contains them. How much energy each of them is expected to carry is indeed encoded in so-called "parton distribution functions", or "momentum distributions".

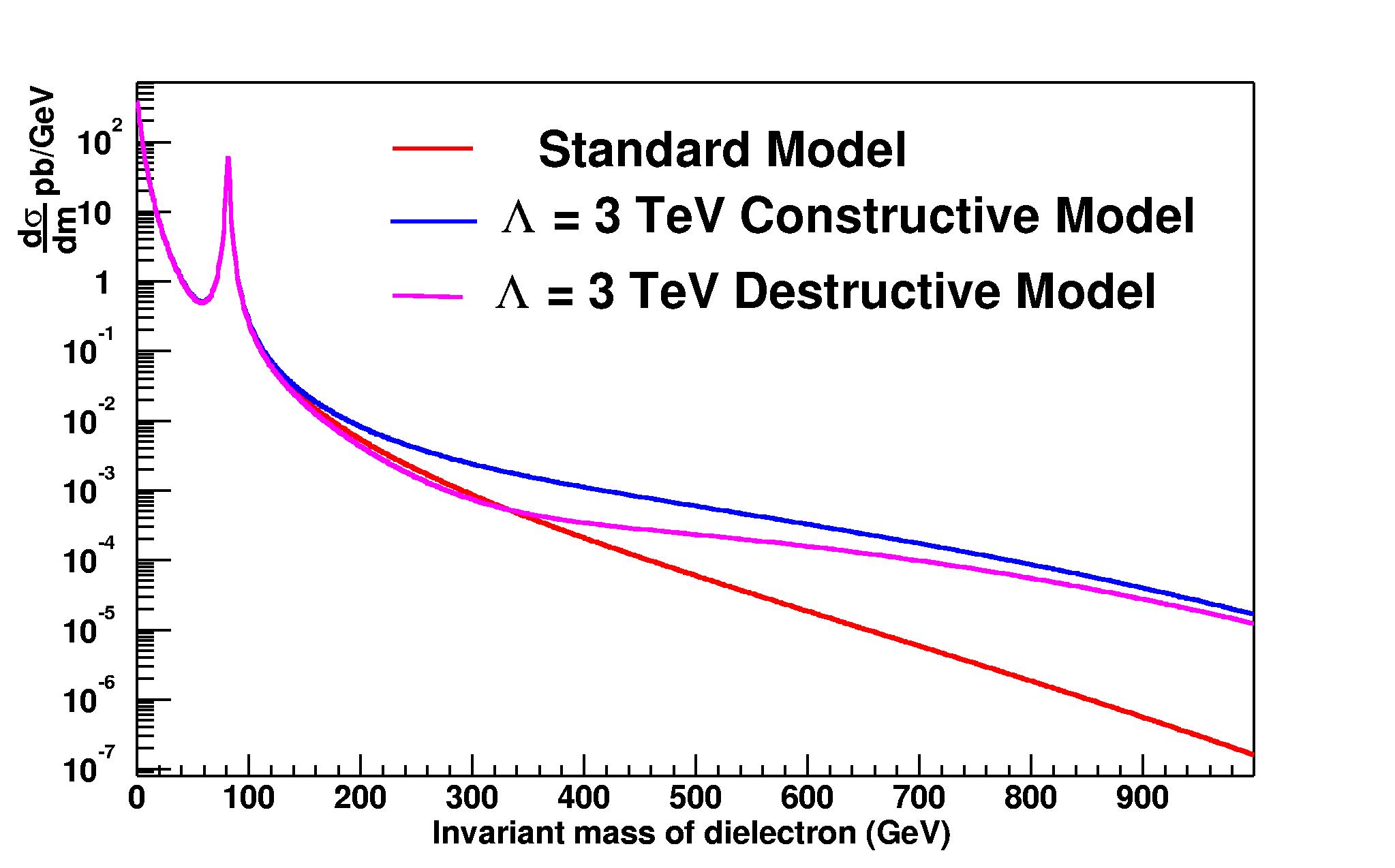

Now, it is not necessary to look for high-energy jets of hadrons to search for compositeness. It turns out that the modifications to the theory due to the structure of what we call "elementary" today are detectable by observing any kind of process yielding large energy emitted at large angle from the beams, because these very high-momentum-transfer collisions get their rate modified from the original standard model rate. Take the graph below as an example.

In the figure, you can see that the invariant mass of pairs of leptons expected by the DZERO collaboration has a very different high-mass shape from the standard model expectation (red curve) if one assumes that fermions are composite (purple and blue lines). The modifications to the equations due to compositeness may in truth both increase or decrease the rate of high-energy processes; in both cases, they are still detectable by comparing expectations with the observed spectrum.

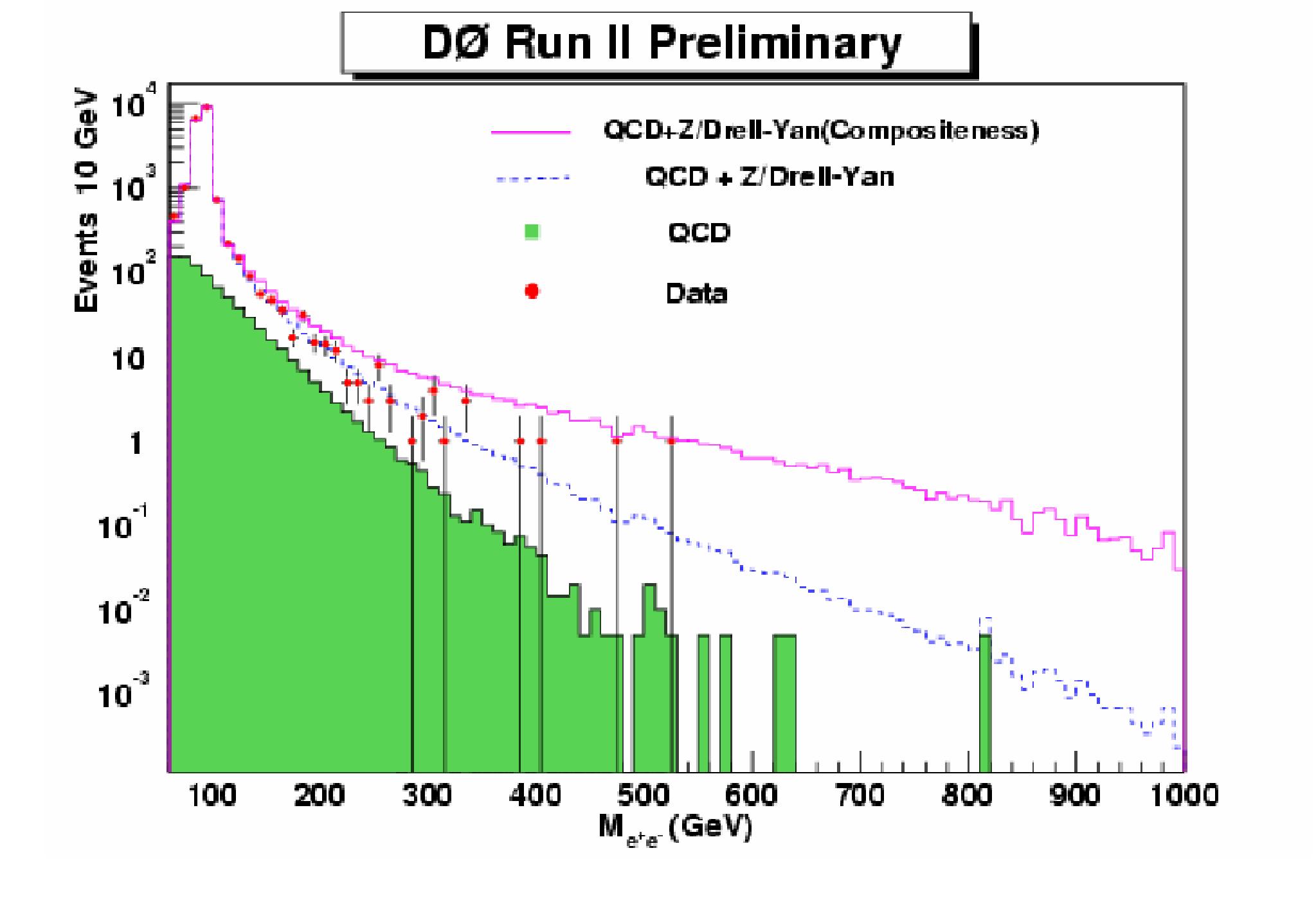

DZERO analyzed a very large dataset of electron-positron pairs, and recently produced a comparison of the mass spectrum with theory, extracting limits to the "scale" of compositeness -the energy above which the effect becomes important. The dielectron mass distribution extracted by DZERO is shown below.

As you can see, the data is well represented by the sum of backgrounds from QCD and Drell-Yan processes as predicted by the standard model (the dashed blue line). There is no excess nor deficit in any region of the spectrum, and this may be used to set limits to the energy scale at which compositeness would start to make itself felt -which is inversely proportional to the distance scale at which a hypothetical substructure of quarks and leptons would be present. In the figure, a particular possibility for compositeness is shown by the purple line, which is clearly inconsistent with the data.

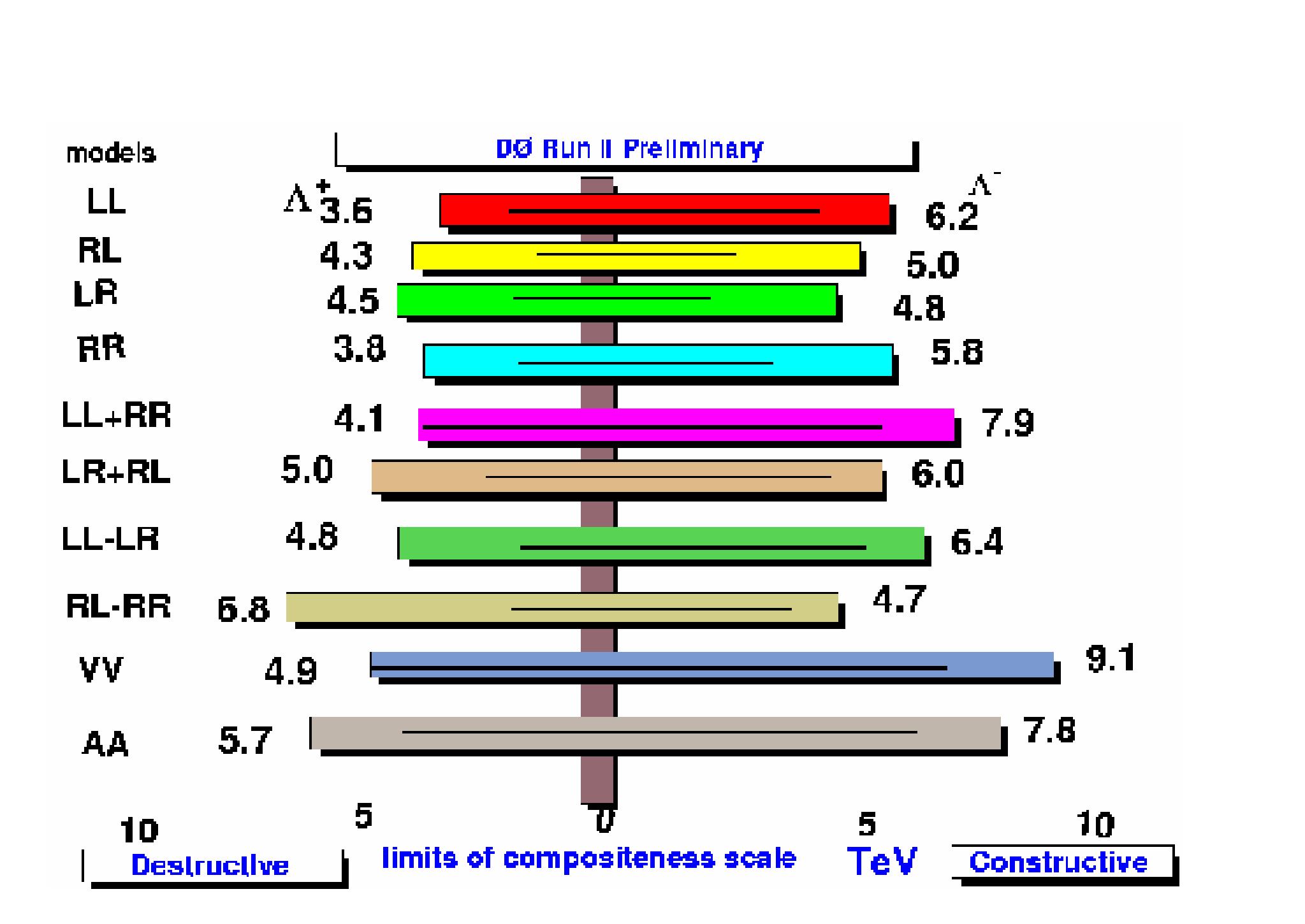

A summary of the limits obtained by DZERO on a variety of different models of compositeness is shown on the left. The bars show the range of the compositeness scale which is excluded for constructive (right) or destructive (left) interference between the terms introduced in the interactions by the new physics process and the standard model terms. I will omit discussing the detail of each of the models here, but if you are interested please have a look at the public document made available in the DZERO site.

Will we ever discover that quarks and leptons are not elementary ? That would indeed be a revolution in physics. I may be old-fashioned, but I like too much the standard model to believe that its beauty is an artifact, an accidental arrangement. But the search for compositeness should and will continue. The Large Hadron Collider will push the investigation to distance scales an order of magnitude smaller. Now, we found a structure in the atom by using MeV-energy projectiles; and we found a structure in the proton by using GeV-energy projectiles. Maybe using TeV-energy probes we will again strike gold. Maybe.

Comments