The Higgs boson itself is even older, having been hypothesized by a few theorists as far back as 1964 to explain an apparent paradox with massive vector bosons, particles that had to be massless in order to not violate a symmetry principle that could in no way be waived.

With that huge success in the bag the LHC experiments have not paused, though. After all, as predictive an powerful the Standard Model is, it is what its name says: a model. It may or may not be a perfect representation of reality.

In particular, physicists today believe that there should be things that the Standard Model does not predict or contain: new particles, maybe ones enabling a unification of all forces of nature, including gravity. Or maybe a candidate to explain dark matter in the universe -another unexplained puzzle which, admittedly, might well have a completely different explanation from ones concerning subnuclear physics.

Self coupling

Because of the work-in-progress nature of comparing a model to the data, physicists have continued to investigate the Higgs boson since 2012, trying to ascertain that its properties are exactly those that the Standard Model predicts. In particular, if the Higgs boson is THE particle once predicted by those 1960s theorists, it should exhibit a peculiar property called "self coupling": a Higgs boson, being the mediator of an interaction it itself is sensitive to, should be able to emit or absorb other Higgs bosons, even pairs of Higgs particles.

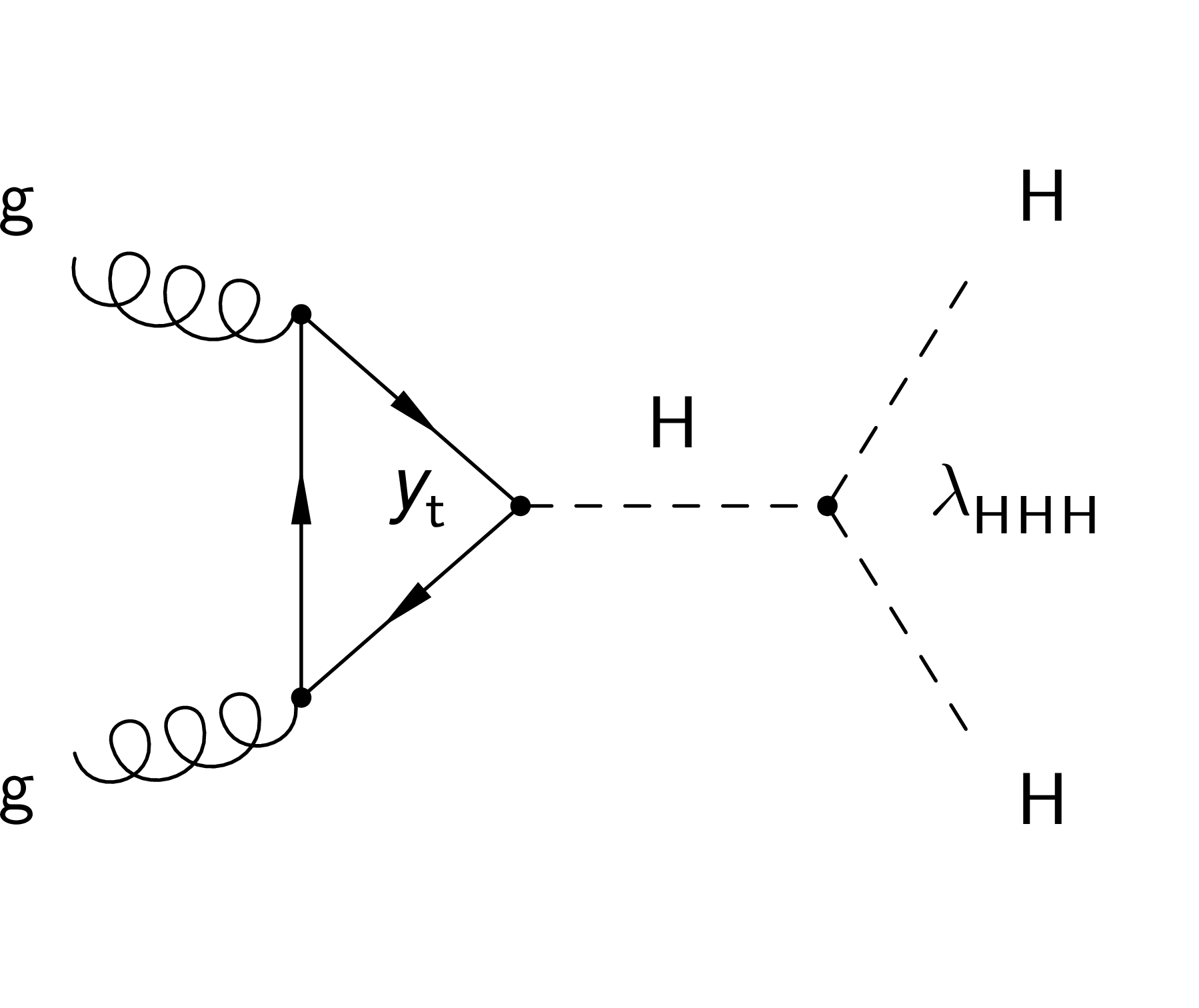

(Above: the main process which gives rise to a pair of Higgs bosons in proton-proton collisions. The graph should be read from left to right: each line is a particle, and vertices define their interaction. Two gluons -the curly lines- are brought to collide by the proton-proton encounter, and they do so by exchanging a top quark in what is called a "triangle loop". This allows the top quark to emit a Higgs boson. The latter then splits into a pair of Higgs bosons through its self coupling.)

Self coupling is a property we have studied in gluons, the mediators of the strong interaction. For gluons, this property is even weirder than for the Higgs, as the source of the strong interaction is a "colour charge" which comes in three varieties - three colours - and gluons possess a colour and an anti-colour. This funny property makes nuclei stable and ultimately allows the structure of matter we know in the universe. But I know, I am divagating. Back to the Higgs.

The intensity at which the Higgs self-coupling process -an extremely rare one, to be sure- takes place is one of the unsolved mysteries that the LHC experiments are vigorously pursuing. But how?

More data

If the Higgs boson self-interacts, it should be possible to observe the production of pairs of Higgs bosons in proton-proton collisions. That process could also take place by the independent emission of two Higgs particles by the "hard interaction" that occurs when energetic protons collide. But the total rate of such events betrays the existence of the self-interaction, and allows to verify that its strength is what the Standard Model predicts.

To observe pairs of Higgs bosons one needs large amounts of data, much larger than those that allowed the 2012 observation. This in fact is one of the reasons why the LHC is been upgraded to a "high-luminosity" version, which will increase the number of protons that get circulated and collided. More collisions means more data to collect and analyze, and a larger number of rare processes to study.

It also means, however, that the task becomes harder, as every 25 nanoseconds ATLAS and CMS will have to sort out the interesting "hard" collisions amidst not 20 or 30, but about 300 other "softer" interactions (called "pileup") that produce unwanted particles (thousands of them) flying around and messing up the readout of the detector. A huge challenge, which the experiments will handle by deploying intelligent new algorithms for sorting out the data on the fly, and more robust readout strategies.

The status: CMS is leading the race

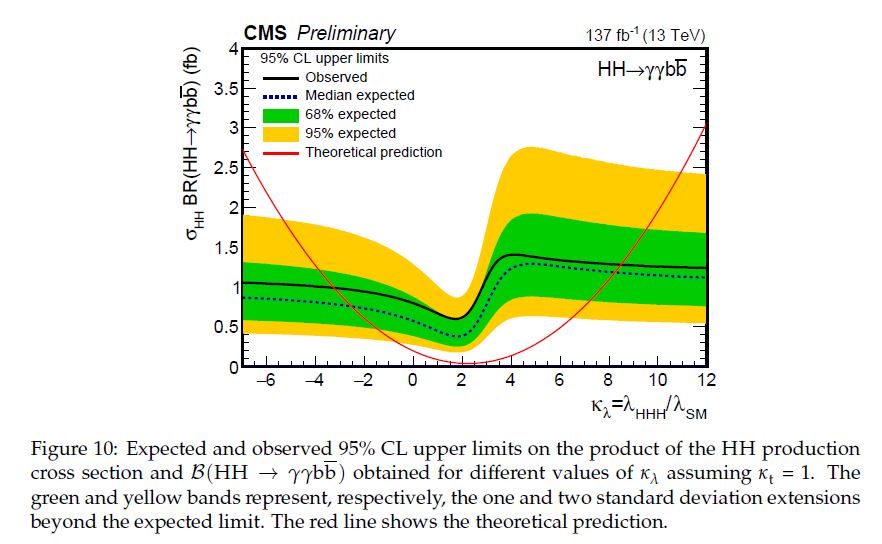

While we wait for more data, we are already sitting on a significant pile of them. So ATLAS and CMS have been digging in their own samples to extract information on the value of the self-coupling parameter of the Higgs in the Standard Model. There is a single number, called "kappa_lambda", which should be equal to one if the model is correct. Should it be found to have a different value, we would learn a whole lot on the existence of new physics!

For now, though, all we can do is to limit kappa_lambda to be in the range of 1, with large uncertainties. The smaller these uncertainties get, the more we can pat ourselves on the back, and eventually the constraints from more data will allow to ascertain that kappa_lambda is really too close to 1 to doubt that the Standard Model, again, is on top.

The most recent and most constraining result on kappa_lambda comes from CMS. The collaboration studied in detail all possible detectable ways of production and decay of Higgs boson pairs, to extract a confidence interval for kappa_lambda. The ways a Higgs boson pair could manifest itself in the data are many: the Higgs boson may decay in several detectable ways, and when there are two of them the possible interesting final states to consider cannot be counted on one's fingers.

You can study a Higgs boson decaying to a photon pair, and let the other one decay to two b-quarks, or two W bosons, or two Z bosons. Or you can consider one Higgs decaying to b-quark pairs and the other one to W bosons, or let both Higgses decay to b-quark pairs. And I'm not even half done. In each case you try to determine the best selection strategy to isolate the possible signal of Higgs pair production from all competing backgrounds. This is nowadays done with massive help from advanced machine learning algorithms.

The most sensitive input to this game comes in CMS from the search of the combined "bb-gamma-gamma" final state. This is the result of one Higgs decaying to b-quark pairs (something which happens over half of the time), and the other Higgs decaying to photons (something which is quite rare instead, only a few times every thousand). The photons are rare, but they are very distinctive; b-quark pairs are frequent, but they are easy to fake by other background processes. The compromise guarantees the highest sensitivity to Higgs pair production.

The results of the CMS search can be summarized in the graph below. The horizontal axis is the value of k_lambda that one assumes to be the one nature chose; the Standard Model bets that this is equal to 1.0. The red parabola shows instead what would happen to the rate of Higgs pairs if k_lambda were different from 1.0: any value below -2.7 and above 8.6 are excluded at 95% confidence level, something which can be observed by locating the intersection of the black line (the CMS result, which limits the rate of Higgs production) with the theoretical prediction.

As you see, the limits do not exclude that the self coupling of the Higgs is different from the Standard Model prediction (which you get if k_lambda is 1.0); but new physics theories which predict values that exceed the reported interval have indeed been disfavoured by the data. This game will intensify and become even more interesting in the next few years, as more data is poured into the grinding analysis tools. So stay tuned! As for now, you can get more information on this analysis in the CMS preprint.

Comments