Another paradigm shift awaits to be operated, though: it involves the realization that today we can aim for the holistic optimization of the complex systems of detectors we use for experiments in particle physics. Building particle detectors is a subtle art, and some of my colleagues probably have a knee-jerk reaction of disgust if one bounces off them the idea that a machine could inform optimal design of their instruments. With that in mind, we must be more gentle, and rather speak of "human in the middle" schemes and of making tools available that can help the hand of the expert designer.

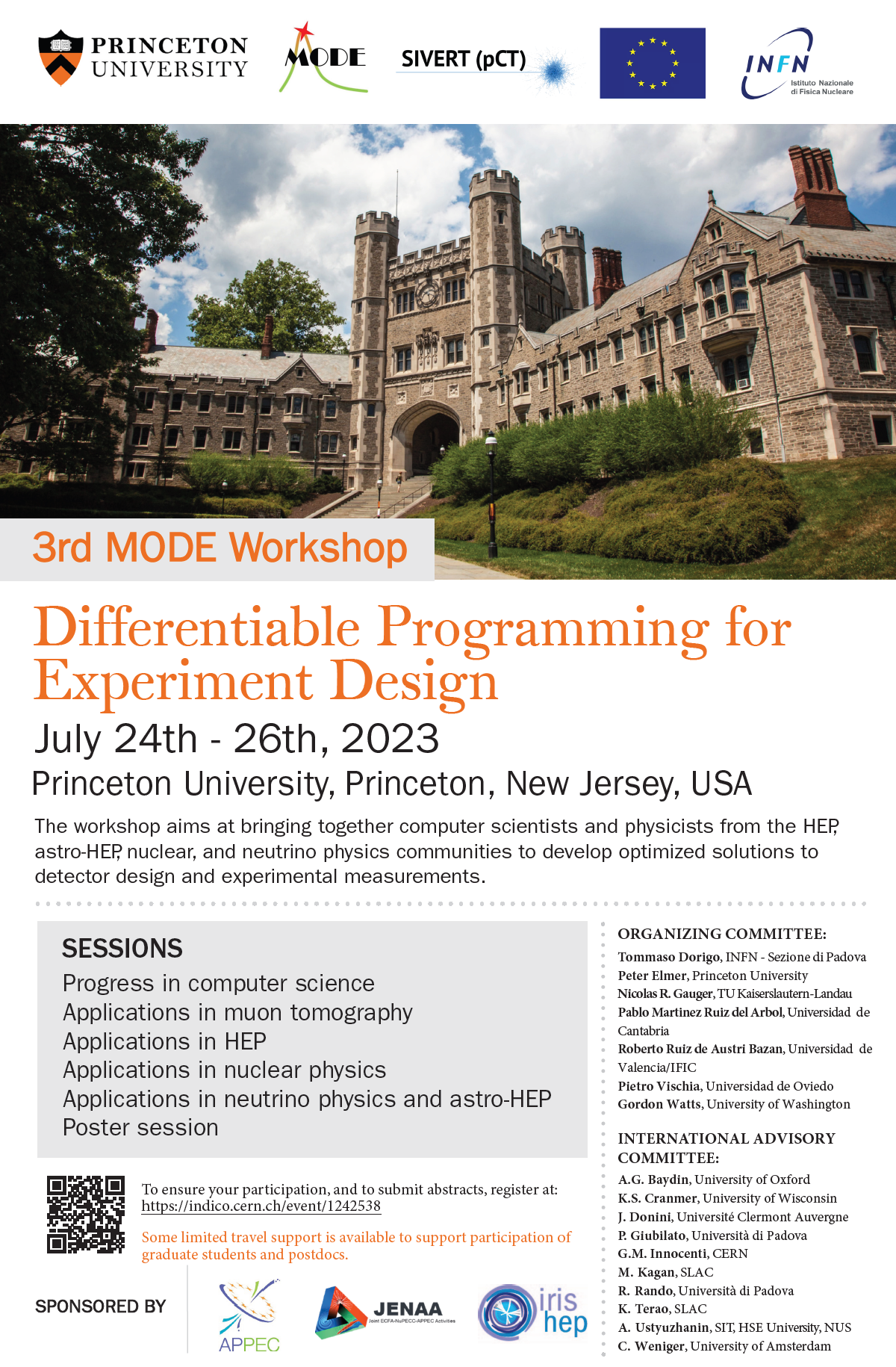

Either way, the topic of co-design of software and hardware has become hot in the past few years also in fundamental science, especially since I founded the MODE collaboration, a group that today counts 40 participants from 25 institutions in three continents. Since yesterday, MODE is running a workshop in Princeton to discuss recent developments in the field of end-to-end optimization of experiments. This is the third workshop of this series, and I have so far been very pleased by the quality and content of the presentations (mine included ;-) .

Here is the workshop poster:

And below is a picture of some of the participants, in front of the venue (the neuroscience institute, where we are hosted).

Comments