Also one goal is to understand what new ideas from the world of machine learning could find ideal applications in the typical use cases of research in fundamental physics. Here I wish to mention a few interesting things that I heard at the workshop so far, in random order. I will rarely make direct reference to the talks, to encourage you to dig into the pdf files available here.

Joe Lykken's Introduction

Of interest was, to start with, the short introduction that the Fermilab deputy director Joe Lykken gave yesterday morning. He correctly pointed out that physicists have always excelled at taking brand new technologies and applying them to solve their difficult research problems, and this can and should continue with the recent advances of machine learning. He also encouraged the audience to think hard and "outside the box" at novel ways to apply this new technology, asking questions such as "why can't I do X?"

Joe gave one good example of the above template question: why can't we use machine learning tools to produce a "fast simulation" of our detectors that are actually better than full detector simulators at that task, such that we can throw those old-fashioned, Geant-based tools in the garbage can? Of course, that would be a big advance for experimental particle physics research, as one of the hard limitations nowadays is the CPU time needed to generate the billions of simulated events we need in our studies.

Common themes

Several speakers yesterday and today discussed the issue of discrimination of quark-originated jets from gluon-originated jets. What am I talking about? Jets are collimated streams of particles that are produced when a quark or a gluon (which we could collectively call "parton", a constituent of the proton) is kicked off a proton by an energetic collision. The parton fragments into a number of particles that collectively end up sharing the total momentum that the parton possessed at the moment of its emission from the hard collision.

Being able to discriminate quarks (the constituents of matter) from gluons (the carriers of the strong force that binds quarks together inside the proton) is a very useful thing when we analyze the energetic collisions at the LHC, because this gives us access to distinguishing interesting physical processes (such as the production and decay of Higgs bosons) from un-interesting reactions which only involved quarks and gluons.

So a lot of effort has gone into solving that problem, which has notoriously been a very difficult one to solve. Surprisingly, it was also the most ancient application of neural networks in particle physics I am aware of: back in 1989 Bruce Denby and a few collaborators working at the CDF experiment tried (with modest success - but they were pioneers!) to distinguish quark from gluon jets in the proton-antiproton collisions that the Tevatron delivered in the core of that glorious detector.

Nowadays, a number of machine learning techniques have been applied to quark-gluon discrimination, and the surprising thing is that the problem appears to be solved - in the sense that there is a saturation of the discrimination power achieved by the best techniques. Those algorithms appear to be close to hit the ceiling of the maximum theoretically attainable performance, so maybe we should migrate to some other topic, despite how cool this one is!

Boosted object tagging

A number of speakers dealt with the problem of identifying the decay of heavy particles (such as W bosons, Higgs bosons, Z bosons, and top quarks) when these are strongly boosted and end up appearing as a single "fat" jet of hadrons in the detector. The technology of this "boosted jet tagging" has made great strides in the past, often employing techniques imported from the world of image reconstruction, such as convolutional neural networks or autoencoders.

A problem here is that our image of jets as we get them from our detection elements are more complex and more sparse than typical 2D pictures, so people have looked into variable-length representations of the input data. The issue is also how to represent the data in a way that remains invariant to permutations of the inputs (the individual signals in the detector). A number of interesting ideas were discussed on this topic, including the use of graph theory (in a seminar by Risi Kondor, which however did not consider the particle physics use case specifically).

Scratching the barrel of parton tagging: s vs d

A talk I enjoyed listening to was the one by Yuichiro Nakai, who took on the problem of discriminationg strange-quark-originated jets from down-quark-originated ones. Why would one bother trying to achieve s-quark vs d-quark separation? Well, the speaker gave a couple of examples of where such a tool could be put to use, yet to me this looks like the kind of question that gets the Clinton answer: "because I could".

In fact, the issue is that with jet tagging algorithms we have basically explored everything else: top-quark tagging (in boosted jets), b-quark tagging (ad nauseam), charm-quark tagging (a number of tools now do this), and gluon vs light quark tagging. Also, we do jet charge tagging of up-type versus down-type quark jets, so what is left is really trying to distinguish down from strange, indeed.

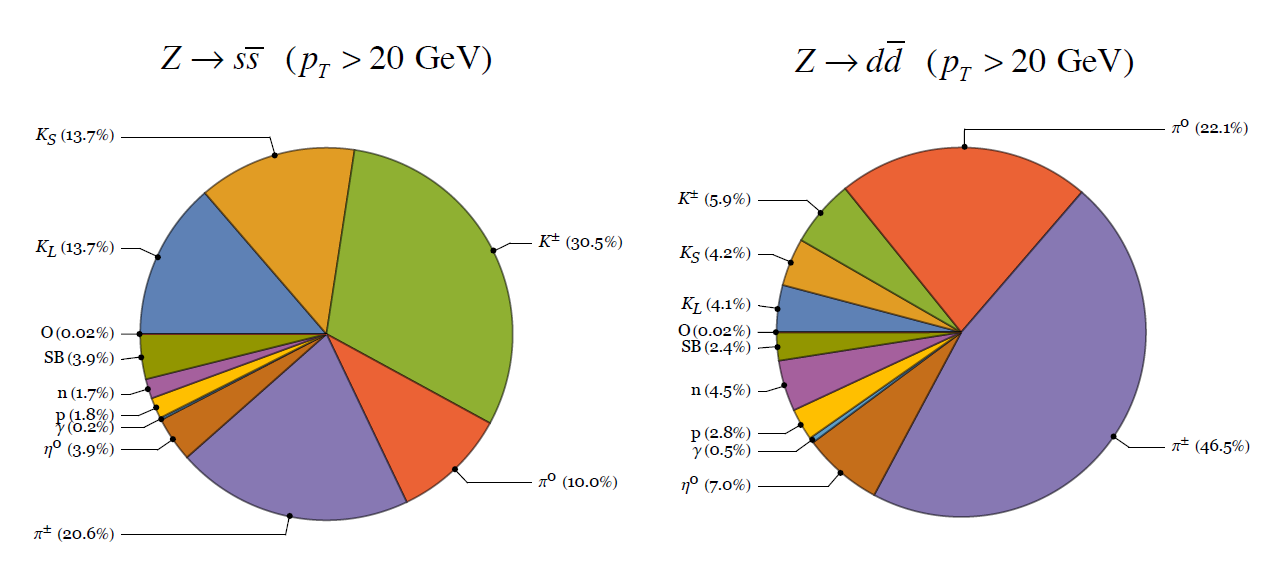

Now, if you take on such a **very** hard problem, you don't expect to get hugely successful results. In fact, even a perfect detector -one which allowed you to "see" exactly the full particle content of each jet, with perfect resolution and all - would be unable to provide a very strong discrimination of strange-quark-jets versus down-quark-jets. The results of Yuichiro however unearth a number of interesting things about s-jets. For instance, their leading particle composition (where by "leading" we mean the one with larger momentum). You can see what that is in the diagram below.

(Above, pie charts of the leading particle composition of strange jets (left) and down jets (right) originated from Z decays).

Mesmerizing graphics

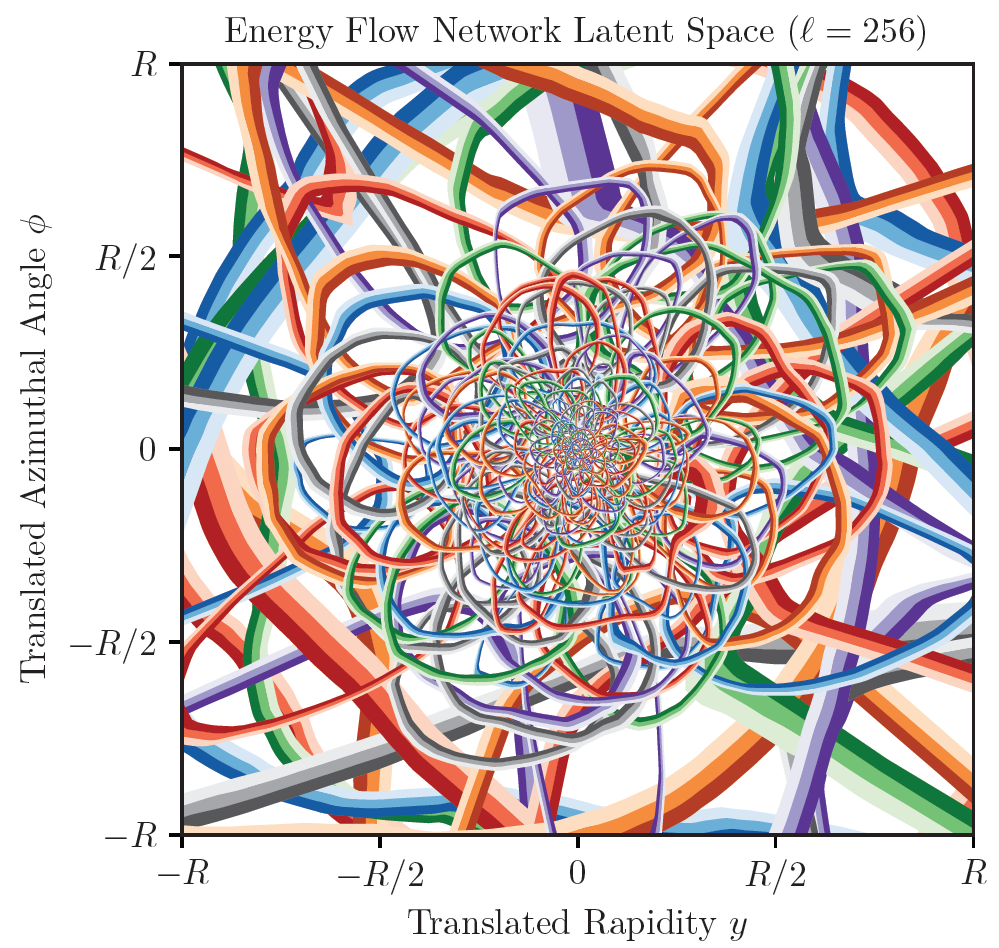

I will end this partial survey of a selection of topics from the workshop with a graph from Patrick Komiske III, which I have a hard time explaining but which is simply too much off in the awesomeness scale to not attach here. The topic is again quark vs gluon discrimination by using "energy flow networks" - ones that know about the detailed physics of collinear and soft singularities in the process of gluon emission. The technique is applied to q vs g discrimination using filters that learn the features of 2D-standardized jet images, and visualized by plotting different coloured contours of the "activation regions" overlaid to one another. The result is mesmerizing:

Again, I cannot but encourage the interested reader to plunge into the slides of these interesting talks!

---

Tommaso Dorigo is an experimental particle physicist who works for the INFN at the University of Padova, and collaborates with the CMS experiment at the CERN LHC. He coordinates the European network AMVA4NewPhysics as well as research in accelerator-based physics for INFN-Padova, and is an editor of the journal Reviews in Physics. In 2016 Dorigo published the book “Anomaly! Collider physics and the quest for new phenomena at Fermilab”. You can get a copy of the book on Amazon.

Comments