Just as six years ago, when the Higgs boson discovery was hot off the press and everybody wanted to hear the details, I have been invited there to hold two public lectures. The first will be held in the Aula Magna of the university, and its title will be "Science and Society 2.0: Elementary Particles and Social Change". I will debate the topic in the company of prof. Massimiano Bucchi, author of several books on the sociology of communication. The second will be a true "lesson", with blackboard and all, on the street - well, if you can call that way the beautiful setting of piazza Mantegna. It will be titled "Qualcosa non torna. Errore o scoperta?" (the English translation is the title of this post).

[By the way, there is a third event I am taking part in during the days of the Festival - it is called "ScienceGround" and will last the full duration of the festival, with interviews of science authros and a science fair, themed on data science. I have sponsored that event with funds from my two EU networks, and will discuss it in another article.]

While the contents of the discussion with Massimiano Bucchi are voluntarily not very precisely specified, so not liable to be discussed in advance, I can offer below a transcript of my street lecture, which I wrote down to help myself remember what I want to say. What I will actually say will most likely be different (I have 30-45 minutes to fill, so I will go into more detail on the topics than the writeup does), but I think I would like to offer you this text in the hope that you may provide constructive criticism to it.

Since I wrote the text in Italian and I am very busy these days, I have confided in google translate to produce an English text. I very roughly changed what really did not make any sense, but please forgive the occasional syntactical blunder below, it is not my cooking!

---------------------------------------------------------------------------

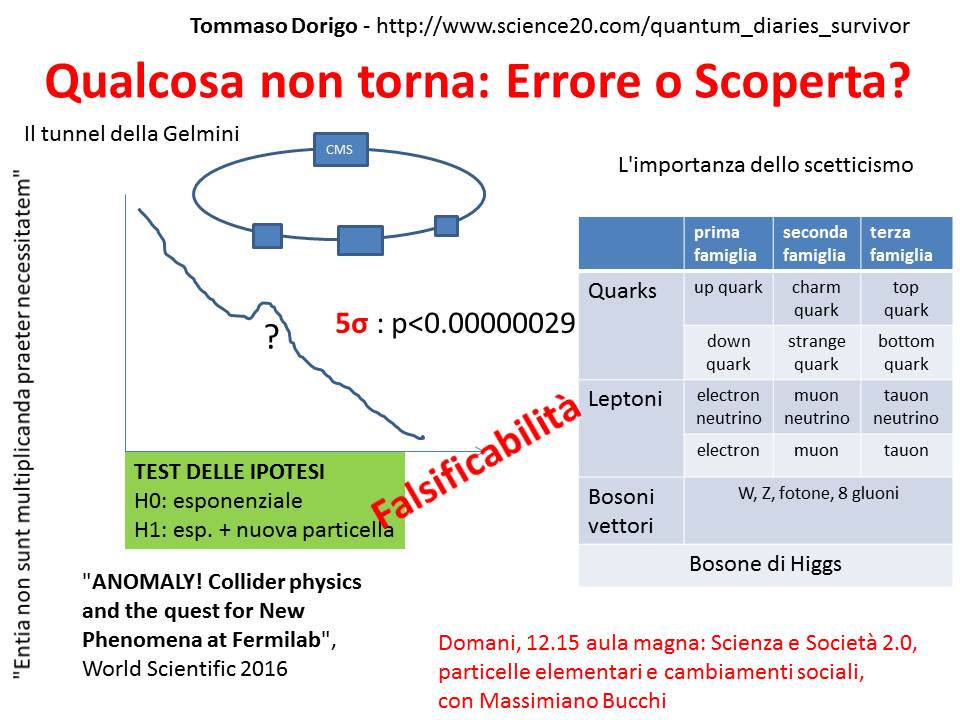

(Below, the sketch of what I will draw on the blackboard as I give my lecture)

First of all, I thank you for being here today, to talk about real and fictitious scientific discoveries; or rather, try to explain how in the practice of scientific research - at least in my field, fundamental physics - we proceed with extreme humility through a mechanism of continuous verification of hypotheses, of questioning one's own theories, and of strict discipline in interpretation of the data. Unfortunately, all this today is not part of the common perception of what scientific research is. Scientists today feel under constant attack, questioned, marginalized, when not blatantly ignored by civil society and politics; and this is a problem and it represents a risk of drift towards irrationality.

My name is Tommaso Dorigo. I am an experimental physicist of elementary particles; I work for the Italian National Institute of Nuclear Physics, and I participate in the CMS experiment at CERN in Geneva, where among other things I contributed to the discovery, six years ago, of the Higgs boson. For those interested, I have a blog where I talk about physics research to particle accelerators, www.science20.com/quantum_diaries_survivor, and I wrote a book on the subject of today, "Anomaly".

So, what is behind a scientific discovery in fundamental physics? What allows physicists to say "Eureka!", I found it? At the base of our work there is a fundamental pillar, the falsifiability of hypotheses. What is falsifiability? If we observe a physical phenomenon, we can imagine a cause - effect mechanism that generated it. We have thus formulated a hypothesis, a theory that is apparently able to explain the observed phenomenon.

Let me make a silly example: if one evening I look at the sky and see a bright strip light up and then vanish, I can imagine that it is an artificial satellite, like "Iridium", that for a brief moment has reflected towards me the sunlight. My hypothesis is falsifiable, because I can later verify if one of those satellites actually passed over my head. If once I consult the coordinates of the satellites in orbit I can not find correspondences, I must abandon my hypothesis, and contemplate others - it could have been a meteor, or some other object: my hypothesis was falsifiable, it was therefore useful to proceed, by deduction and through a process of elimination, towards a more precise understanding of the phenomenon.

But suppose instead that I hypothesized that it was an angel who directed for a brief moment a flash of heavenly light in my direction. Well, this hypothesis is not falsifiable, because even admitting that the angels exist, there is no searchable catalog of angels that report their trajectories and their schedules. You well understand that there is a fundamental difference between the two hypotheses of Iridium satellite and angel: only the first is fruitful for science, as it allows one to progress in understanding phenomena; the second not being verifiable remains sterile - I must passively accept the idea that there are angels in the evening flight, and that they have fun throwing beams of light around.

In the fourteenth century a philosopher, the monk William of Ockham, formulated a famous "lex parsimoniae". According to his thinking, in the investigation of the world we must look for explanations of the phenomena that are more "economic". The economy consists in not calling into question fictitious and not demonstrable entities; the explanation that uses known objects or phenomena is probably the correct one. "Entia non sunt multiplicanda praeter necessitatem": entities must not be multiplied. Why call into question an angel when I can attribute the luminous wake, in the first hypothesis, to a meteor or an artificial satellite?

Well, modern science works just like that: it formulates falsifiable hypotheses, and proceeds with scrupulousness to verify them one by one, discarding those that are not verified by subsequent experiments. In the end you can formulate a theory, and use it as a starting point to investigate further phenomena. From time to time something new is observed, which the theory is unable to explain. The theory must be reviewed, or extended to include the new phenomenon. In this way, by successive approximations, we can find out how the world is made.

Then let me tell you how to discover a new fundamental particle, and how we physicists avoid picking ourselves up by the nose by falling into the trap of being too enthusiastic about suggestive data of exotic interpretations. At CERN in Geneva there is the LHC, a gigantic proton accelerator, in a 27-kilometer long tunnel dug 100 meters underground. The protons are injected in two parallel rings and orbit in opposite directions; their kinetic energy is progressively increased by electric fields, and finally they are collided at the center of the CMS detector and three others.

CMS is like a digital camera, just a little bigger than what you have in your cell phones: it weighs like a warship and records, millions of times per second, the ephemeral traces of the particles produced in the collisions. Each "event" - thus we call the collisions - is then analyzed on the computer and rebuilt; the particles produced are identified and their energy and direction are precisely measured.

In the end, we build histograms where we collectively study the properties of the set of collected events. Thanks to decades of studies and experiments, we have constructed an extremely precise theory, the "Standard Model", which describes matter as made up of six quarks and six leptons, and the forces that hold matter together as the result of the exchange of other particles. "Vectors" of interactions: the photon, the W and Z particles, and the gluons. The theory allows us to calculate what we should observe when we study the properties of events.

Through detailed comparisons between this prediction and what is observed in the real data, we can verify if everything checks out, or if there is something strange, an anomaly.

Take for example a histogram of the energy of photon pairs collected by the experiment (technically it is the mass that would have a particle that produces the photons in its disintegration). The photons are the quanta of the radiation, the particles that transmit the electromagnetic force. At the end of 2015, there was enormous interest in the scientific community for these histograms, built with data collected by ATLAS and CMS, the two main experiments of LHC.

Why? Because something did not check out: the theory predicted that this distribution should be an approximately exponential curve, decreasing with the energy of photon pairs; but the data instead showed an accumulation to a very precise energy: a "bump". This can happen when, together with all the events in which two photons produced in an inconsistent manner were observed, by known physical processes (and well described by the standard model), a new elementary particle is produced from time to time, which then immediately decays generating two photons.

Since the particle has a very precise mass, it generates events that accumulate all around the same value in the histogram. To evaluate if we can say "Eureka!" we use the statistical technique called "hypothesis testing". The "null" hypothesis is that the data all come from physical processes known and well described by the current theory; in this case the "true" distribution of which the histogram collected is the result of random extraction is exponential, and the bump is then fictitious, a statistical fluctuation; the alternative hypothesis is that there is a new particle.

I will spare you the details, even if I am available to describe them with how many details you want; but here it is enough to say that the statistical test provides a number: the probability of observing data like those found, or even more "strange", if the basic theory is valid. If this probability is measured to be less than one part in 3 million (the so-called "five sigma"), physicists feel entitled to publish the "observation" of a new particle.

Clearly then years of study will be needed to understand more, but tentatively the theory is updated to include that new entity. In the case of the bump of the two photons of 2015, the probability of a fluctuation was higher, and in fact later data showed that there was no new particle. Alarm returned, and note that the physicists were not ashamed by having declared a discovery then withdrawn: there was no claim in the first place, because no publication was produced. This is the normal work of continuous verification of the theory, falsifiability at work. Of course, however, the data were public, and journalists from all over the world still had fun to announce new scenarios and overhype the observed anomaly.

It would be nice to talk about this somewhat perverse mechanism in the communication of science - we will do it tomorrow with Massimiano Bucchi in the "aula magna", at 12.15, where I hope to see you. So, I have described how a discovery takes place, in theory. But it happens that these experiments are gigantic, and take 20 years to build, and another 20 to exploit them by collecting data. CMS is a collaboration of 5000 people between physicists, engineers, technicians, students. You can well imagine how difficult it is to leave to the physicists who work there the autonomy of the research (which is however fundamental!) and at the same time to speak a common and shared voice.

We are many to study the data and it happens often that John, who is looking at data of a certain type, looking for a particular new particle predicted by some new theory, find anomalous data. Perhaps this is the discovery that so much awaited? At that point John certainly can not publish his observation, even if he would be dying to! No: John's analysis must go through a very strict internal verification process, during which anyone among his 5,000 colleagues can nitpick at the study, and find inconsistencies, ask to modify details, criticize this or that particular technique.

Physicists are among the most skeptical people on this earth: and with a bit of malice, one could say that this is all the more true as they discuss a phenomenon they did not themselves discover! This process of internal digestion is painful but precious, because it allows the collaboration to be unanimous if in the end it decides to publish a new discovery. There can not be a minority report.

The price to pay, however, is huge: it is for John, who can find himself having to work for six months, a year, two years to verify every comma of his analysis, without the certainty of being able to publish it at the end. And for the experiment as a whole, which through what could also be called a sort of self-censorship sometimes avoids publishing results that are controversial or not perfectly understood, to prevent the risk of jeopardizing one's reputation.

Perhaps you will remember the case of "superluminal neutrinos". In 2011 the OPERA experiment published the measurement of the velocity of neutrinos fired by CERN and revealed in the Gran Sasso cave. The neutrinos traveled through the rock, but the then minister Gelmini spoke of a tunnel that connected the two facilities ... However: from the observation of 20 neutrinos and an accurate timing of their route it was clear that they traveled faster than the speed of light in the empty. Newspapers around the world rebounded: "Einstein was wrong!"

The international scientific community remained very skeptical about the veracity of the

discovery, and soon more detailed studies and a new amount of data made it possible to ascertain that the effect was due to a glitch of the hardware that transmitted the detector's electronic signals: 60 nanoseconds of delay in the timing signal caused an erroneously superluminal measurement of the velocity of the neutrinos.

Unfortunately, despite the OPERA scientists have been quite honest and have followed their protocols, including declaring a discovery only if the probability of the "null hypothesis" was less than one in 3 million (it was measured to be less than one in 100 millions), the error exists and can affect even the most scrupulous of the analyzes. It is only through a process of continuous verification that science progresses. However, a sour taste remains in the mouth, because the publication of discoveries that are later withdrawn, although it is part of this continuous trial and error process, generates a loss of confidence in scientific research in those who do not understand how it works.

Scientists today have a greater responsibility than before, because their discoveries, genuine or to be retracted, reach without a filter - and often without adequate translation and interpretation - a civil society that remains unprepared to interpret them for what they are: not absolute truths, that if disavowed become a condemnation of the scientists as false and unreliable, but continuous small steps forward in the understanding of the world, each subject to verification and falsifiability.

What remains is, for the great scientific collaborations, a tendency towards self-censorship and a sorry life for the scientists who work there; and for civil society, a feeling of discredit of science, the tendency to question any statement in spite of the consensus of the scientific community, and a drift towards the idea that "one is worth one" and that the opinion of anyone is worth as much as the conviction of the scientific world - with devastating repercussions on the difficult path of humanity towards a better world: see the surreal debates on issues that are absolutely not controversial for scientists, such as the effectiveness and importance of mass vaccination, the hoax of chemical trails from airplanes, the ineffectiveness of homeopathy, the terrible reality of global warming.

Tommaso Dorigo is an experimental particle physicist who works for the INFN at the University of Padova, and collaborates with the CMS experiment at the CERN LHC. He coordinates the European network AMVA4NewPhysics as well as research in accelerator-based physics for INFN-Padova, and is an editor of the journal Reviews in Physics. In 2016 Dorigo published the book “Anomaly! Collider physics and the quest for new phenomena at Fermilab”. You can get a copy of the book on Amazon.

Comments