This article is a brief explanation of how errors can be handled in computer code. The basic idea is that if an error arises then it should be obvious, and easily corrected.

The concept of error-handling and correction is relevant to the suggestion in my series, A Science Of Human Language, that what we see as the grammar of a language is simply an error-handling component of language. I suggest that the function of the grammatical aspects of language is this: if an error arises then it is made consciously or subconsciously obvious, and is easily corrected.

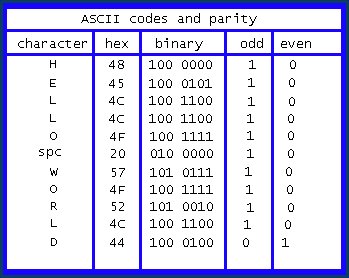

The ASCII code

ASCII, the American Standard Code for Information Interchange, pronounced ass-key, is a set of codes for printing text. Computers use binary numbers, most commonly based on 8 bits, giving a range of values from 0000 0000 to 1111 1111 or 0 to 255 in decimal. The ASCII codes use only 7 bits, giving 128 values in a range from 0 to 127. This leaves one unused bit position in a standard computer byte of eight bits.

The unused bit can be used for error detection. The procedure is to count the ones in a code and to set the spare bit to match, or reflect, the odd or even nature of the result. The match, or parity, between the number of ones in the ASCII code and the parity bit is tested at the receiving end of a communications link. If there is a mis-match, a request is made to re-send the code.

More complex codes can be used so that the receiving computer can not only detect an error, but auto-correct that error.

In the case of human language, the error-handler is the grammar. When language users conform to the norms of word and sentence building, of spelling and pronunciation, of intonation and of conversation, then errors of reading or hearing are made prominent and are easily corrected. Most often, the language-handling parts of the brain correct the error, so that the hearer or reader is entirely unaware that there ever was any error.

Thatz Y U can reed this evn wiv orl of teh miss takes.

Comments