11 years ago, DARPA predicted that there was a physics-induced train wreck coming straight at the computer chip industry; the limits of what electricity and existing materials can do. Even then, quantum computers were touted as the answer, with black box magic occurring between the existing and the vision.

Instead of relying on magic, it was said that computers would have to start getting bigger again if performance was going to continue to increase. And in many ways that has happened. Supercomputers are now mostly giant racks of ordinary CPUs, but the market also lowered its expectations. People are content with handheld phones and tablets that are suitable for playing Angry Birds and watching YouTube videos.

Millions of closely-packed transistor components at near atomic size means chip designers finally have to ask if we have reached the limits to computation.

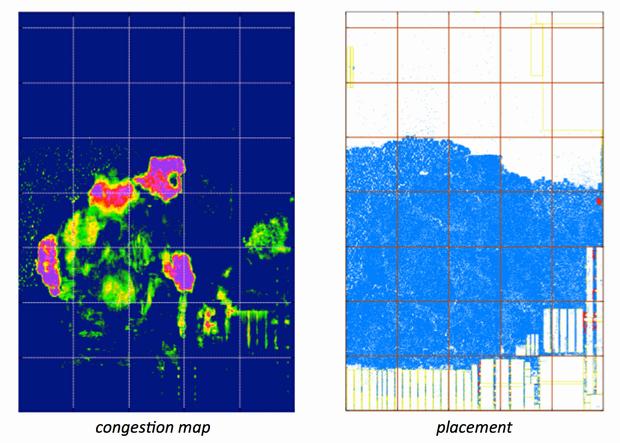

The image on the right shows the physical placement of a large integrated circuit with logic gates in blue and 'packing peanuts' (spacers) in red. The 'heat maps' on the left show wiring congestion -- green and yellow show sparse connectivity, but red and purple show insufficient routing resources. Routing congestion is one of the manifestations of physical limits to computation. The progression of images illustrates how computer-aided design tools address routing congestion by revising circuit placement. Credit: Myung-Chul Kim, Jin Hu, Igor L. Markov (University of Michigan)

Writing in Nature, Igor Markov of the University of Michigan reviews limiting factors in the development of computing systems to help determine what is achievable, identifying "loose" limits and viable opportunities for advancements through the use of emerging technologies.

The article summarizes and examines limitations in the areas of manufacturing and engineering, design and validation, power and heat, time and space, and information and computational complexity.

"What are these limits, and are some of them negotiable? On which assumptions are they based? How can they be overcome?" asks Markov. "Given the wealth of knowledge about limits to computation and complicated relations between such limits, it is important to measure both dominant and emerging technologies against them."

Limits related to materials and manufacturing are immediately perceptible. In a material layer ten atoms thick, missing one atom due to imprecise manufacturing changes electrical parameters by ten percent or more. Shrinking designs of this scale further inevitably leads to quantum physics and associated limits.

Limits related to engineering are dependent upon design decisions, technical abilities and the ability to validate designs. While very real, these limits are difficult to quantify. However, once the premises of a limit are understood, obstacles to improvement can potentially be eliminated. One such breakthrough has been in writing software to automatically find, diagnose and fix bugs in hardware designs.

Limits related to power and energy have been studied for many years, but only recently have chip designers found ways to improve the energy consumption of processors by temporarily turning off parts of the chip. There are many other clever tricks for saving energy during computation. But moving forward, silicon chips will not maintain the pace of improvement without radical changes. Atomic physics suggests intriguing possibilities but these are far beyond modern engineering capabilities.

Limits relating to time and space can be felt in practice. The speed of light, while a very large number, limits how fast data can travel. Traveling through copper wires and silicon transistors, a signal can no longer traverse a chip in one clock cycle today. A formula limiting parallel computation in terms of device size, communication speed and the number of available dimensions has been known for more than 20 years, but only recently has it become important now that transistors are faster than interconnections. This is why alternatives to conventional wires are being developed, but in the meantime mathematical optimization can be used to reduce the length of wires by rearranging transistors and other components.

Several key limits related to information and computational complexity have been reached by modern computers. Some categories of computational tasks are conjectured to be so difficult to solve that no proposed technology, not even quantum computing, promises consistent advantage. But studying each task individually often helps reformulate it for more efficient computation.

When a specific limit is approached and obstructs progress, understanding the assumptions made is key to circumventing it. Chip scaling will continue for the next few years, but each step forward will meet serious obstacles, some too powerful to circumvent.

Advanced techniques such as 'structured placement,' shown here and developed by Markov's group, are currently being used to wring out optimizations in chip layout. Different circuit modules on an integrated circuit are shown in different colors. Algorithms for placement optimize both the locations and the shapes of modules; some nearby modules can be blended when this reduces the length of the connecting wires. Credit: Jin Hu, Myung-Chul Kim, Igor L. Markov (University of Michigan)

What about breakthrough technologies? New techniques and materials can be helpful in several ways and can potentially be "game changers" with respect to traditional limits. For example, carbon nanotube transistors provide greater drive strength and can potentially reduce delay, decrease energy consumption and shrink the footprint of an overall circuit. On the other hand, fundamental limits--sometimes not initially anticipated--tend to obstruct new and emerging technologies, so it is important to understand them before promising a new revolution in power, performance and other factors.

"Understanding these important limits," says Markov, "will help us to bet on the right new techniques and technologies."

Comments