The search focused on the hypothesis that a massive fourth-generation quark was produced in the collisions. What was assumed was that the quark was heavy -otherwise previous searches would have found it already-, and that it behaved similarly to the sixth quark, the top, which is by now a well-known animal of the particle zoo.

Top quarks have been sought by the CDF experiment since the late eighties, and studied ever since their discovery in 1995. A brother of the top quark would hardly have escaped detection if its mass had been similar to that of the top quark, but a significantly heavier body would have been hidden by its smaller production rate.

The reason of the small rate is that the heavier a particle is, the harder it is to produce it at a hadron collider. The production process involves the transformation of kinetic energy of the colliding quarks or gluons contained in the proton and antiproton into mass: and quarks and gluons are increasingly hard to find with large enough energy.

The search I reported on in 2008 found an intriguing excess of events compatible with the decay of a 450-GeV t' quark. The significance of such excess was of merely two point something standard deviations -roughly the chance that you walk in a Casino and win 35 times your stake with a single bet on a number at the roulette. But it was interesting, and exciting.

So much so, that I could not wait for more data to be thrown in, to see whether the excess would disappear -a sign that it was due to a background fluctuation- or become more solid -a sign that it might be due to a new particle signal!... or to a systematic underestimation of backgrounds.

Now the analysis has been carried out, with as much as 4.6 inverse femtobarns worth of proton-antiproton collisions: exactly twice as much data as that used in the previous search. And the result is...

No, I am not going to give it away so easily. You will have to deliberately scroll down to satiate your curiosity. The more polite among you will instead stay with me for a minute more. Their prize will be to learn a couple of details about the new analysis.

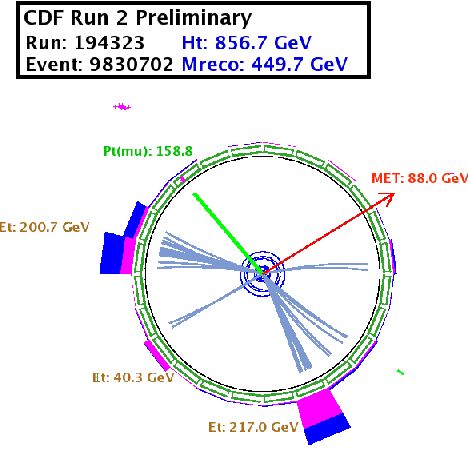

The analysis has not changed significantly from the previous instantiation - a good idea, given the need to investigate the early fluctuation at 450 GeV (on the right you get to see a t' candidate seen by CDF -it belongs to the dataset searched in 2008). The heavy t' quark is assumed to behave as any other standard-model quark, e.g. decay via weak interactions through a charged current W boson. For heavy objects, whose mass is larger than the sum of the masses of W boson and daughter quark, the emitted W boson is a real one, i.e. it carries its regular 80.4 GeV of mass -if we omit to discuss its natural width, that is. That would be a useful but derailing explanation which I will leave to some other article.

The analysis has not changed significantly from the previous instantiation - a good idea, given the need to investigate the early fluctuation at 450 GeV (on the right you get to see a t' candidate seen by CDF -it belongs to the dataset searched in 2008). The heavy t' quark is assumed to behave as any other standard-model quark, e.g. decay via weak interactions through a charged current W boson. For heavy objects, whose mass is larger than the sum of the masses of W boson and daughter quark, the emitted W boson is a real one, i.e. it carries its regular 80.4 GeV of mass -if we omit to discuss its natural width, that is. That would be a useful but derailing explanation which I will leave to some other article.If t' quarks are produced by strong interactions just like any other known quark, we should observe pairs of them: in other words, if they are standard model quarks they must carry a unit of "T'-ness" which strong interactions cannot produce out of the blue. So t' -anti-t' quark pairs are produced together, such that the final T'-ness of the system is still zero (+1 for the quark, and -1 for the antiquark).

The above observations drive CDF to focus to a very specific final state: the same which has proven successful to discover, and later collect and study, the top-quark-pair samples produced at the Tevatron. This final state includes the signal of a leptonically-decaying W boson, which provides sufficient characterization to allow the efficient triggering of the events: a high-momentum electron or muon.

Two words about data collection

I think that many among you do not know well enough the importance of the trigger in a hadron collider experiment, so I will spend a paragraph or two describing what is going on there. You must bear in mind that the Tevatron produces collisions three million times per second in the core of the CDF detector, and there is simply no way to read out and store with such a rate the information collected by the hundreds of thousands of electronic channels to which the sensitive detecting elements report their findings. Instead, the trigger makes a choice: it selects the very few events deserving to be kept and later studied offline.

Of course, this is a critical decision! Among three million interactions per second, only about 100 (a hundred, yes, you read correctly) are saved; the rest are lost forever. And the fact that the decision is taken "on the fly" by hardware processors should send a shiver down your spine: there is no turning back. But that is exactly what is done by CDF; and D0, and CMS, and ATLAS for that matter!

To collect events containing a leptonically-decaying W boson -ones among which hide top pair events, as well as Higgs events- an electron or muon of high momentum must be observed by the online data acquisition system, such that in the matter of a microsecond or so the event is flagged as potentially good by the "first level" trigger; in the matter of some twenty microseconds it is reconstructed in detail, to decide that the lepton signal is a genuine one, by the "second level" trigger; and finally, in few milliseconds it is handled by software algorithms performing a full reconstruction at the "third level" trigger.

Every time I think about the working of the data acquisition system of CDF my mind cannot help picturing a grand concert. Particles hit each other at light speed in the core of the detector; other particles are created and emitted in all directions; they leave electronic signals in the active devices. These signals travel through meters of cables, get interpreted by hardware boards, the information is collated and signals are sent to other boards that enable the collection of more detailed information by the detector components. Then the more complete event information goes to other electronic boards; and so on, until the distilled result of a single lucky collision chosen among thirty thousand gets written to a storage medium. And all this goes on at a lightning rate.

It is, in earnest, mind-boggling. And I am sorry if my description was incomplete and approximative: the more you know about the system, the lower your jaw is bound to fall.

The analysis, finally

Okay, I think I attended my duty with science outreach enough above. Now let me tell you in short about the analysis -if I did it in more detail, I fear I would not do a much better job than the one of the relative public web page anyway.

The selection is the one typically used to collect top-quark pairs: a well-identified, high-momentum electron or muon must be present together with significant missing transverse energy. The latter is the result of the escape of a energetic neutrino, which the leptonic W decay produced together with the triggering charged lepton.

Four hadronic jets are also required. These nominally correspond to the four energetic quarks emitted by t'-anti t' pairs: two from the other W boson, which must therefore have decayed hadronically; and two from the down-type quark that t' quarks must have turned into. Mind you: these are not necessarily b-quarks, since we assume to know nothing of the way these fourth-generation up-type quarks couple to b, s, or d-quarks. Unlike top quarks, that decay 99.9% of the times to a b-quark, t' quarks might in principle all yield down quarks!

The above should tell you that b-tagging is of course not used, as is instead the case in most top quark searches. Standard model backgrounds are thus larger than in the typical top quark analyses (b-tagging is a powerful background rejecting tool), but not overly so. They are due to real top quark pairs -of course!, since yesterday's signal is today's background- and to W production events accompanied by radiation of additional jets.

A further small background is due to non-W events: ones for which the leptonic W signature was faked; this is rare, since you must get both a jet passing as an electron or muon, and a large imbalance in the detected energy in the calorimeters, which mimics the effect of an escaping neutrino.

The authors reconstruct the putative mass of the new heavy quark with a fit technique which is identical to that of regular top mass measurement, save for the lack of any b-tagging information. This complicates matters, because if one does not have b-tags to indicate which jets come from the W decay and which ones do not, there are many different ways (12, if you are curious) to assign jets to the partons originated in the decay chain t't'bar -> W q W q -> (lnq)(qqq).

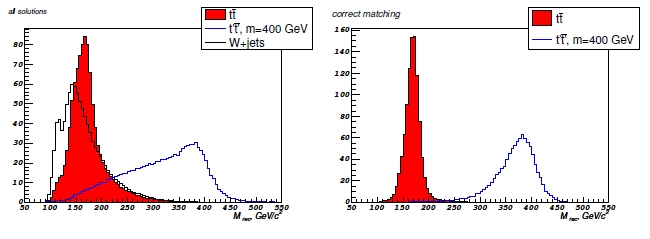

Below is shown how the fit reconstructs the mass of t' events (blue histogram, where the t' mass is simulated at 400 GeV), top events (red histogram -it clusters at about the true top mass as it should) and the main background (empty black histogram). On the top left panel you see all 12 solutions together; on the right you see the fit solution with the correct matching of the jets to final state partons (which we know since these are simulated events!). Reality is what you get on the left, but even there, there still is plenty of discrimination between top quarks and a heavier partner.

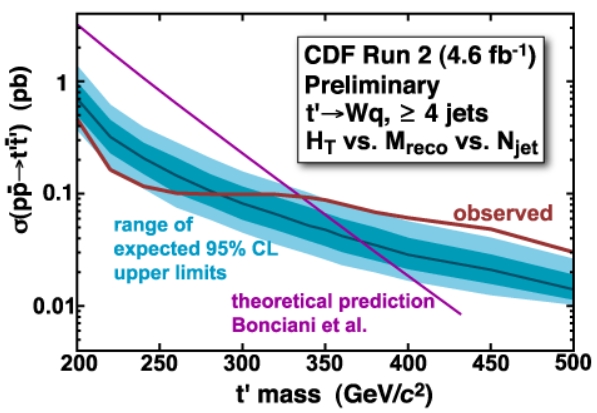

The reconstructed quark mass is then used together with another discriminating variable: the so-called Ht, which is the sum of transverse energies of all objects in the event, including the inferred neutrino. The t' mass and the Ht are fit together using a likelihood technique. This allows to extract an upper limit on the cross section for the new physics process. It is reported synthetically in the figure below.

In the figure you see the t' mass on the horizontal axis. As a function of it, the purple curve describes the theoretical guess for the rate of t' production at the Tevatron, in picobarns (one picobarn is about a hundredth of a billionth of the total collision rate). As you see, the predicted rate decreases with the hypothetical particle mass, an effect of the rarity of more energetic collisions, as I mentioned above.

Next is the blue band: it describes the rate limit that CDF expected to set, using the analysis and the size of data it analyzed, and it spans from minus two to plus two standard deviations -97% of the expected reality, in other words. The red curve shows instead the actual limit computed with the data actually observed. The crossing between purple and red line indicates that all t' masses below 335 GeV are excluded: if the t' had been that light, its predicted rate would far exceed the lower limit set by the red curve. Instead, for masses above that value, the data cannot exclude the t'.

But wait, that is not all. The data does not just "not exclude" a t' heavier than 335 GeV: if you look closely, you see that the red curve departs significantly from the blue band. The observed limit, for a 450 GeV t', is significantly weaker than expected! What is going on ?

What is going on is that there is still an excess -a two-sigmaish one, apparently- of t'-like quarks with a mass of about 450 GeV, with respect to the standard model expectation. So the same effect seen with half the data, and a quite similar analysis, remains!

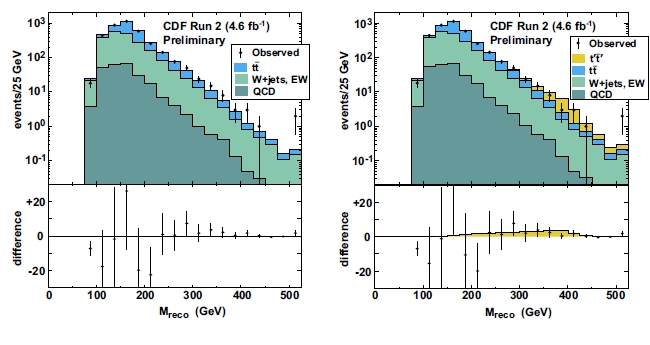

Let us look at the mass distributions for the data and the sum of backgrounds. They are shown below. The black points are data, the three main backgrounds are shown with different blue shadings. On the left the data is interpreted as the sum of backgrounds only; on the right, a 450 GeV t' is thrown in to see how well it fits the data. The lower parts of the figure show the difference between data and sums of backgrounds: judge by yourself if the yellow t' contribution is needed or not...

So, to conclude, the doubling of the data has not yielded a conclusive answer to the question thrown in by the result of the 2008 analysis: a 450-GeV t'-like quark is not excluded. And the rate of similar events in CDF is higher than expectations by over two standard deviations. One more fluctuation to keep a look at. Or rather, one fluctuation you cannot yet take your eyes off from!

It only remains for me to congratulate with the main authors (J. Conway, D. Cox, R. Erbacher, W. Johnson, A. Ivanov, T. Schwarz, and A. Lister), of this new CDF analysis, and I hope they will three-peat it when they get 7 or 8 inverse femtobarns of available collisions to analyze... Way to go, folks!

For more detailed reading on the analysis, please consult the relative public document recently produced, which contains a nice introduction on the theoretical motivation for the search.

Comments