It is the case of B physics analysts in the DZERO experiment, for instance, who published yesterday the signal of a nice new resonance -one that everybody knew would be there, but which only ATLAS had cared to look for yet.

Chi_b states are particles composed of a bottom-antibottom quark pair, just like the Upsilon particles observed by Lederman in 1977. Unlike what happens in the Upsilons, the quarks in the chi_b particles are in a P-wave configuration of relative angular momenta. This makes a big difference, because these particles cannot then branch into lepton pairs as the Upsilons, which are readily observed in their Y->μμ decay: the latter have one unit of spin which can be brought away by a virtual photon; the virtual photon then materializes into the lepton pair. So chi_b states are less easy to detect.

One way out is to search for radiative decays of a chi_b state into a Upsilon and a photon. We can detect these resonances via their radiative decays since they are heavier than the corresponding Upsilon states they decay into, otherwise they would be prevented to do so by energy conservation.

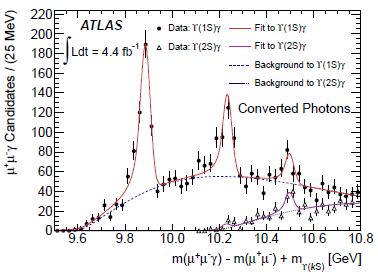

One way out is to search for radiative decays of a chi_b state into a Upsilon and a photon. We can detect these resonances via their radiative decays since they are heavier than the corresponding Upsilon states they decay into, otherwise they would be prevented to do so by energy conservation. Such a search was recently performed by the ATLAS collaboration at the CERN LHC. They searched for muon pairs making the mass of the Y(1S) or Y(2S), attached a photon candidate to the pair, computed the total three-body mass, and together with lower-energy resonances they saw a bump at 10539+-4+-8 MeV, which they interpreted as the chi_b(3P), a particle not yet catalogued in the Review of Particle Properties: new ground to claim for ATLAS. One such three-body mass spectrum is shown on the right: you can clearly see the three separate states, the chi_b(1P), chi_b(2P), and new-found chi_b(3P). I wrote about that analysis last December here, shortly after the ATLAS discovery.

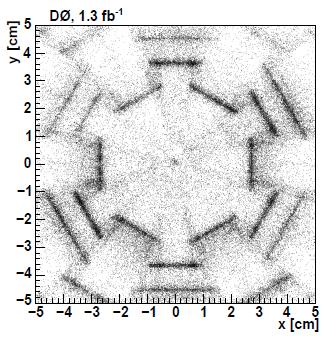

Now DZERO just did a similar search, and confirmed the ATLAS finding. To search for μμγ combinations, DZERO chose to rely solely on photon candidates reconstructed as electron-positron pairs, because the photon emitted in the radiative decay of chi_b particles is usually too soft to be detected and measured effectively in the DZERO calorimeter. Instead, photons which hit nuclei of material in the DZERO silicon tracker may convert into electron-positron pairs which are later well reconstructed by the tracking algorithms. The figure on the right shows the reconstructed vertex position of a sample of electron-positron pairs in DZERO, in the plane transverse to the beam direction. The structure of the DZERO silicon tracker is evidenced by the high-density of points corresponding to the location of conversion vertices.

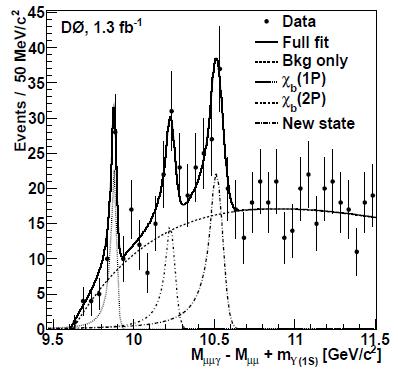

Now DZERO just did a similar search, and confirmed the ATLAS finding. To search for μμγ combinations, DZERO chose to rely solely on photon candidates reconstructed as electron-positron pairs, because the photon emitted in the radiative decay of chi_b particles is usually too soft to be detected and measured effectively in the DZERO calorimeter. Instead, photons which hit nuclei of material in the DZERO silicon tracker may convert into electron-positron pairs which are later well reconstructed by the tracking algorithms. The figure on the right shows the reconstructed vertex position of a sample of electron-positron pairs in DZERO, in the plane transverse to the beam direction. The structure of the DZERO silicon tracker is evidenced by the high-density of points corresponding to the location of conversion vertices. The three-body mass found by DZERO in 1.3 inverse femtobarns of 2 TeV proton-antiproton collisions is shown on the right. The three states also seen by ATLAS are clearly observable over the predicted background. DZERO can thus confirm the ATLAS finding, measuring a mass m(chi_b(3P)) = 10551+-14+-17 MeV, in good agreement with the previous determination. Note that DZERO has a worse mass resolution, so the three states they see are less separated in the mass distribution. Nevertheless, the significance of the 3P state is above 5 standard deviations, so this particle can safely be archived as a confirmed state.

The three-body mass found by DZERO in 1.3 inverse femtobarns of 2 TeV proton-antiproton collisions is shown on the right. The three states also seen by ATLAS are clearly observable over the predicted background. DZERO can thus confirm the ATLAS finding, measuring a mass m(chi_b(3P)) = 10551+-14+-17 MeV, in good agreement with the previous determination. Note that DZERO has a worse mass resolution, so the three states they see are less separated in the mass distribution. Nevertheless, the significance of the 3P state is above 5 standard deviations, so this particle can safely be archived as a confirmed state.

Comments