Mind you, the Standard Model is only an effective theory. This much has to be set straight: much like Newtonian mechanics, the Standard Model is incomplete, and only works in a certain range of applications -but there, it performs exceedingly well! We still use Newtonian mechanics to build bridges, but if we send satellites above the atmosphere we need to include relativistic effects that modify our GPS calculations; in the same way, we think we will still use the Standard Model to compute particle reactions after a new theory extending it will have been proven correct; but we will have a new realm of applications where the SM fails.

In fact, the term "effective theory" implies that the theory does work; but it also says it is an approximation. I like the term, which is so wittily contradictory and synthetic. The Standard Model is the hell of an effective theory! And while I know I will one day see it fall, and I desperately hope it will be sooner than later-because only by finding a breach we will be able to know what lies behind it-, my sceptical soul always feels unsatisfied by this or that three-sigma effect: while many colleagues attach a lot of emphasis to them, I always see their potential flaws, and when the results are foul-proof, I still know how incredibly more likely a fluctuation is than a true signal of new physics. I cannot help it, I always stick to Ockham's way of thinking: if you can explain it away with known sources, it is not due to a unknown agent.

"Entia non sunt multiplicanda praeter necessitatem" - William of Ockham

Meaning that Ockham's razor comes a-slashing hypothetical entities, if they are not strongly called for as the most economical explanation. William of Ockham was the first true minimalist.

...But let us leave Philosophy to onanists and get back to Physics. So, what drives me to rejoice today is the observed evaporation of one of the very few "outstanding" anomalies that exist in subnuclear physics these days, one which was indicated as a clear smoking-gun signal of physics beyond the Standard Model (at least, in its currently accepted form).

The result, which was made public by the DZERO collaboration one week ago, is extremely similar to another result recently produced by its companion at the Tevatron collider, the CDF collaboration. The two analyses studied the phenomenon called "flavor oscillation" of particles called "B-sub-s", or

The parameters governing the phenomenon can in fact be measured -with a lot of trouble, but they still can- in hadronic collisions, and a departure of their value from the very precise Standard Model expectation would be immediate proof that something in the description of the underlying physics must be changed. Not a radical, revolutionary change, but a sizable one. Smaller than the direct observation of Supersymmetric particles, but larger than the already occurred discovery of neutrino masses.

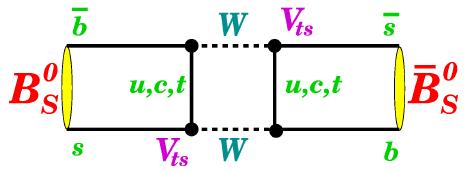

A

A Now, not only does the pictured process happen: it is actually happening at a mind-boggling frequency, high enough that a

The frequency of the oscillation between the two states is an important parameter of the process, but it is by no means the only one. The two most important ones are actually the difference in oscillation frequency of two possible quantum states of the

CP is the combined operation of changing the electric charge of a particle and taking the mirror image of the result. If you do that to an elementary particle, the result is another particle that behaves exactly the same way -a symmetry of Nature. However, this equivalence is not perfect: we then speak of a "violation" of CP symmetry.

Now, I do not expect that you understand what I am talking about here, but for the purpose of this article it does not really matter: the physics of flavor oscillations and CP violation is absolutely fascinating, but it is one of those things that one cannot grasp without at least a basic knowledge of quantum mechanics. Here, rather than trying my best to make do without those basics, I will try to go directly to the point. So if you want to know how the light and heavy eigenstates make up the flavor ones, how the matrix describing evolution and decay of these states contain non-diagonal parameters, and what does this all mean for the phenomenology, you are advised to look elsewhere. This article, for a change, stays at a more basic level.

So these particles oscillate, and then decay. We can determine whether they have oscillated or not -and thus measure their oscillation frequency- by finding what particles they decay into as a function of the distance they traveled, which we determine directly from the charged tracks they produced; and when they decay, we can also study the angular distribution of their disintegration products, and the value of their CP symmetry.

There are two crucial parameters measurable in the decay of

The Standard Model allows us to compute the contribution to these parameters from the exchange of known particles in the diagram already discussed above, and in other similar ones. So if we measure them well enough, we may expose the presence of unknown new bodies that contribute to the oscillation of

The hypothesis is not so far-fetched after all. A heavy fourth-generation quark, call it t', would give a contribution to the phase, departing sizably from the Standard Model prediction. In their publication, CDF explain this in some detail, so let me quote from it:

"[...] the Belle and BABAR collaborations have observed an asymmetry between direct CP asymmetries of charged and neutraldecays [...] it is difficult to explain this discrepancy without some source of new physics [...] if a new source of physics is indeed present in these transitions it may be enough to cause the different CP asymmetries that have been observed.[...] While there are surely a number of possible sources of new physics that might give rise to such discrepancies, George Hou predicted the presence of a t' quark with mass between 300 and 1,000 GeV in order to explain the Belle result and predicted a priori the observation of a large CP-violating phase in

decays [...]. Another result of interest in the context of these measurements is the excess observed at 350 GeV in the recent t' search at CDF using 2.3 fb-1 of data [...]. In this direct search for a fourth generation up-type quark, a significance of less than

is obtained for the discrepancy between the data and the predicted backgrounds, so that the effect, while intriguing, is presently consistent with a statistical fluctuation."

Intriguing, is it not ? CDF uncharacteristically casts the stone, but then repents and hides its arm: we see something unexplained (but on which the jury is still out) in Belle and Babar; this points to something observable at the Tevatron: a large phase of CP violation in the

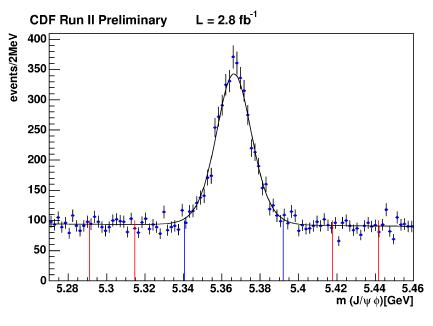

Leaving such rethorical mastery aside, let us come to the measurements. I will not deal with how these ephemeral quantities are extracted from the large datasets analyzed by CDF and DZERO; suffices to say that the angular distribution of

Leaving such rethorical mastery aside, let us come to the measurements. I will not deal with how these ephemeral quantities are extracted from the large datasets analyzed by CDF and DZERO; suffices to say that the angular distribution of [Ok, ok, a bit of additional detail for the few really nosy ones among my seventy-six readers: the Tevatron produces b-quarks in particle-antiparticle pairs; by identifying whether one of them is a b-quark or an anti-b-quark when it is produced, we infer that the other, at production, was the complementary one. This is needed because, crucially (since this is what creates the quantum interference effects we observe) bothand

decay to the same final state of J/Psi and phi: we cannot use the decay products to infer the nature of the particle this one time!

There exist several methods to "tag" whether the (anti-)B_s contains a b-quark or an anti-b-quark (same-side tagging) or identify the nature of the other (anti)-b-quark produced together with the one yielding the (anti-)B_s (opposite-side tagging). Here, "side" has a spatial meaning: when the b-quark pair is produced, typically they leave the interaction point in opposite directions, which then define the two sides of the event.]

In March 2008 the situation with the measurement of

"With the procedure we followed to combine the available data, we obtain evidence for NP at more than. [...] We are eager to see updated measurements using larger data sets from both the Tevatron experiments in order to strenghten the present evidence, waiting for the advent of LHCb for a high-precision measurement of the NP phase".

The article was greeted with a good dose of scepticism by the community. In CDF, we knew that the exercise operated by the theorists for their paper was not a precise one: they had taken the results of CDF and DZERO and combined them, but they lacked the necessary information: DZERO had not released the full information required for a careful combination. Some back-and-forth ensued, and then people moved on.

Then, finally CDF, and last week DZERO, updated their measurements by doing exactly what the theorists were anxious to see done: they added statistics, analyzing a total of 2.8 inverse femtobarns each.

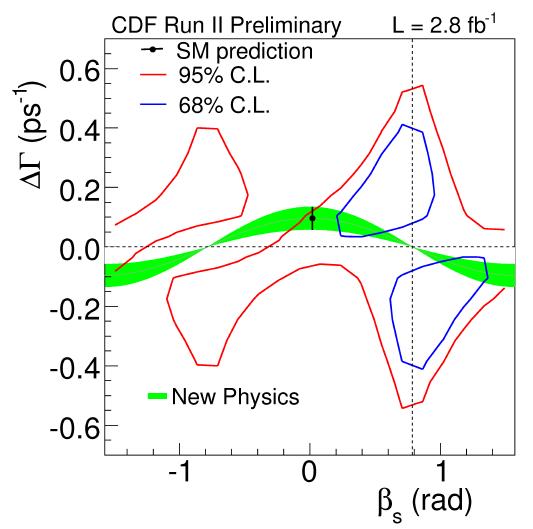

The CDF result is shown in the plot below. The plane of the two variables is divided by iso-probability lines. The tighter contours, in blue, represent the area within which the parameters have been measured, and comprise a 1-sigma region; the red contours allow two-standard-deviation variations from the measurement. The Standard Model prediction for the two parameters is shown by the black point with error bar in the center: you can see that it lies away from the most probable value, but is still well within the two-sigma region. The green band, by the way, shows instead the allowed region of new physics values for the two parameters.

The results of CDF left everybody somewhat wanting: they still saw some discrepancy with the Standard Model prediction -at the level of 1.5 standard deviations, equivalent to a probability that the SM was right of 7%. Seven percent! Seven percent is comparable to the chance that you win a bet on three numbers at the roulette (a so-called "Street bet", made by placing a fiche on the side of a row). Would you place on such an occurrence the significance that would be needed to claim that a fantastically accurate theory, which has withstood thirty-five years of scrutiny, is to be dumped in the trash bin ?

Of course not; and yet, the result seemed to be exactly what one would expect to see if a new particle was circulating in the loop in

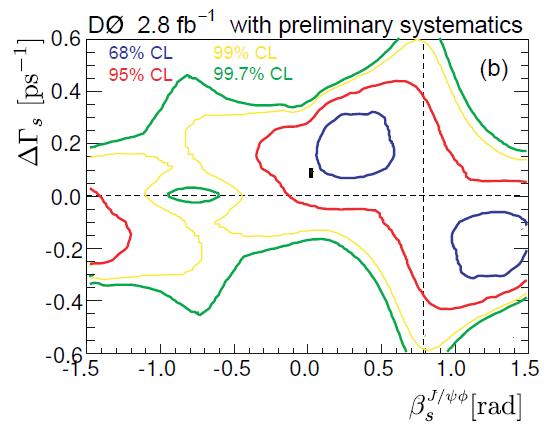

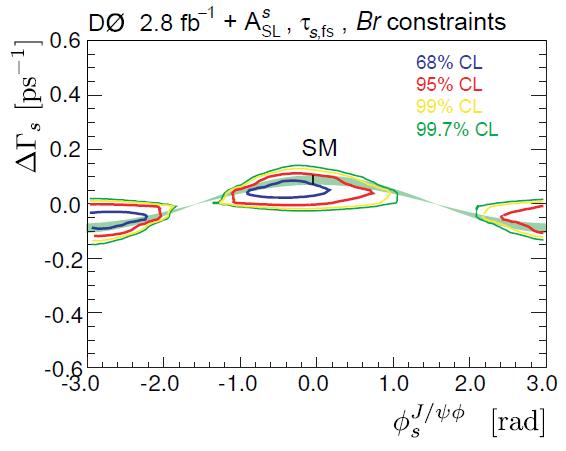

But now DZERO comes in, and finds a probability of 10% that the Standard Model is correct. The DZERO result is shown in the plot below. The meaning of the plot should be easy to guess by now.

Now, ten percent is really not worth commenting! It is still lower than 50%, true; and if combined with the CDF measurement -especially if in a quick-and-dirty fashion- it can still make for some discrepancy. But it is not a notitia criminis anymore. However, DZERO did more than just measure the parameter space allowed by their data: they went on and combine it not with their competitors' result, but with other information from B-physics measurements available in the literature: lifetimes, asymmetries, branching fractions. This brings in some model-dependence, but the final result is rather astonishing: the allowed region of the parameter space shrinks quite sizably!

In the plot above, you can see the "trust all" result of DZERO, which includes all known constraints. The Standard Model is still lying between the 1- and 2-sigma contours, but the green band, which represent the relationship expected between

The above plot, to me, is enough to make the

Comments