In fact, one of the explicit goals of the CDF and DZERO experiments for Run II of the Tevatron, which lasted from 2002 to 2010, was to study extensively the top quark both as a standard model object, subjected as it is to electroweak interactions which can be studied and compared to predictions, and as a portal to new physics of various kinds.

In the field of precision measurements CDF and DZERO have produced very precise determinations of the top mass, the top pair production cross section, and a few less precise but still quite interesting measurements of other properties, such as production asymmetries, spin, charge, production and decay mechanisms, rare decays, etcetera. Everything agrees with the standard model calculations, except maybe the production asymmetry, which appears at odds with predictions by over two standard deviations. The effect is clearly systematical, having been observed in both CDF and DZERO data.

In the field of searches for new physics, the top quark has also been central to the Tevatron investigations. To the search for departures from standard model predictions in the measurement of the properties already discussed one should add the search for particles decaying into top quarks, the search for particles produced in top quark decays, and a host of searches -mostly for supersymmetric signatures- where top quark pairs constituted a nasty background, whose production needed to be known very accurately, rather than a signal.

In particular, the search for resonances decaying into top quark pairs is one of the new physics searches to which CDF and DZERO have been focusing their attention lately, for a good reason: the observed asymmetry in the production of top pairs could indeed signal their production by some unknown mechanism in addition to the well-studied ones. A particle which could decay to top quark pairs and produce such a pattern is a new gauge boson Z', heavy enough to have escaped previous searches and to produce two top quarks in its disintegration, and also one unwilling to decay to electron or muon pairs (otherwise we would have discovered it already).

One such search is the one appeared yesterday in the arxiv, and it is by CDF. I will be pardoned if I call this the CDF III collaboration: in fact, since a few months the experiment revamped their author list to cut the dead branches, significantly clearing the list of people like me, who obviously do not belong there any longer (in fact, a couple of months ago I myself decided to remove my name rather than trying to find excuses for continuing to appear).

So you can well imagine the joy of being able to write about a CDF publication without the fear of incurring in the wrath of collaborators angry because of the diffusion of internal information or for criticizing a result of a collaboration I am no longer part of! Indeed, I will mention one or two things I do not like of the analysis, while I describe it.

The search is based on the full Run II luminosity, as the title itself clearly declares. Upon reading the fine print, this equates to 9.45 inverse femtobarns of collisions: not the 12/fb delivered during Run II by the Tevatron, but rather 79% of it. Of course one expects a difference between delivered and integrated luminosity: the latter is the data collected when the detector was fully operational, and which is labeled "good for physics".

Having been on weekly data-taking shifts over a dozen times in the last decade, as scientific coordinator in the CDF control room, I well know that the fraction of data which is good for physics is usually 90% or even a bit less: this is actually a robust design feature of the data-taking system of the experiment, for reasons which I explained elsewhere. But 79% seems a rather low number: I was expecting CDF would be able of surpass the 10/fb mark of analyzable data. Anyway, arguably the difference between 9.45 and something above 10/fb is going to have no appreciable impact in the physics one can extract from the final CDF dataset.

The search strategy involves the selection of a sample of data contributed in significant fraction by top quark decays, without however the explicit reconstruction of top quark signals. CDF takes events with a lepton, missing transverse energy (signalling the escape of an energetic neutrino from W decay), and three or four hadronic jets (at least one of them b-tagged), and computes the invariant mass of all objects observed in the final state, including additional jets if these are present.

I found that choice a bit odd: the explanation in the paper is that by doing that they remain sensitive to decays that do not include top quarks, but admittedly there is no reference to models that would predict a resonance decaying to a W boson and multiple hadronic jets, one or two of them containing b-quarks. What one has to pay for this added "versatility" of the search is a lower resolution in the invariant mass of the decaying object: a kinematic fit using the mass of top quark as a constant input would constrain more precisely the mass of the resonance, with added sensitivity.

The W boson mass is instead still used, as in most top quark-related searches and measurements in lepton+jets topologies, when reconstructing a hypothesis for the longitudinal component of the neutrino momentum. Remember, protons and antiprotons collide at 1 TeV of energy each, but what produces the actual hard collision are quarks that carry a unknown fraction of the protons momenta along the longitudinal direction: this prevents us from measuring the longitudinal component of the missing energy. Still, if one takes just the transverse components of that, and attributes them to the neutrino, then the hypothesis that charged lepton and neutrino come from the W decay allow one to cook up a second-degree equation where the unknown is the neutrino Pz component.

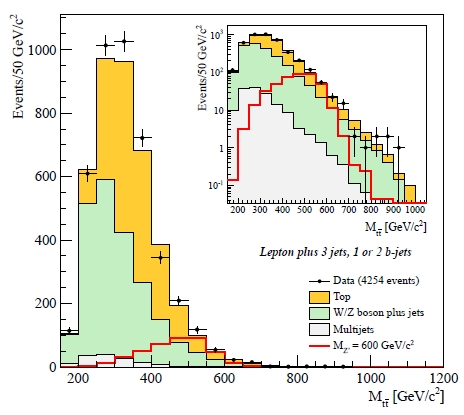

The selected data amount to 4254 events with three jets and 3049 with four jets. The top-pair background constitutes 43% of the former, and 78% of the latter. Other backgrounds are contributed by W+jets production and QCD multijet production (where the lepton and neutrino are either fakes or result from the decay of bottom quarks). The mass distribution of the events is shown in the figure below. The data is shown as black points with statistical error bars, and the three main backgrounds are stacked in two shades of grey and yellow (the top quark component). The hypothetical contribution from a signal of a Z' boson of 600 GeV is overlaid in red. Below, the W+three-jet data:

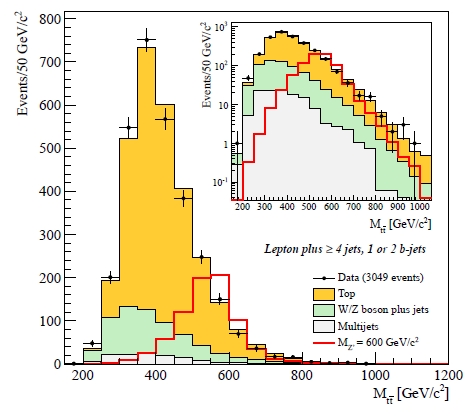

And below, the W+four-jet data:

These figures make it clear that a 600 GeV Z' would have stuck out head and shoulders in the dataset. In fact, the CDF paper underlines carefully that there is good sensitivity to a Z' below 750 GeV, better than the one from LHC experiments due to the peculiarity of the production of such an object (quark-antiquark annihilation) and the hypothesis that it does not decay into leptons. The LHC experiments would still exclude the predicted cross section of such a "low-mass" new particle with their datasets, but that does not need to be stressed - ATLAS and CMS are nowadays rather focusing the very high-end of the energy range allowed by the 8 TeV collisions of the LHC.

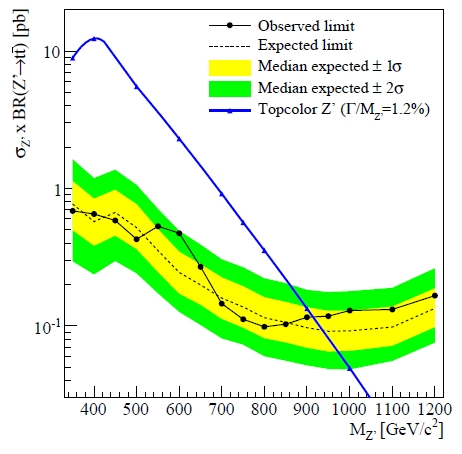

The absence of an observed signal is turned as customary into an upper limit on the production cross section of a Z' boson as a function of the hypothetical particle mass. Here a Bayesian technique is used for the task, where systematic uncertainties are added as Gaussian priors. I was surprised to read that the modeling of these "nuisance parameters" (ones coming from imperfect knowledge of final state radiation, jet energy scale ,b-tagging efficiency, PDFs, fraction of heavy flavors in the W+jets background, QCD background normalization), most of which are positive-defined quantities, is performed with a Gaussian function rather than with a Log-normal or a Gamma distirbution. This forces CDF to truncate the Gaussian for negative values. A detail, but I consider it an imperfection (in CMS we nowadays cause a lot of pain to our analysts if they dare do such a thing), albeit not a very significant one as far as the result is concerned.

In the end CDF can exclude Z' bosons of the leptophobic kind with masses below 915 GeV, at 95% confidence level. The graph showing the actual exclusion limit is the one below.

To conclude, this is the first article of the CDF collaboration which I can comment on "from the outside", and I have thus tried to be as critic as I could on the details of the analysis. Nonetheless, this is a nice new result and I congratulate my ex-colleagues for producing it, at a time when many if not most of them are already busy with other experiments.

Comments