The Dutch translation of Ray Kurzweil's 2005 bestseller “The Singularity is Near: When Humans Transcend Biology” (the title says it all!) is about to be released, so this post on 'practical PAC' will be a summary of my objections to this idea. The Singularity was a case study in my research to see if I could analyse the claims with PAC. This post will probably not reveal any new information for people who are into this particular transhumanist ideal (both enthusiasts and critics), but it was a good exercise to test the methodology of PAC with.

Methodological Stuff:- Introduction

- Patterns

- Patterns, Objectivity and Truth

- Patterns and Processes

- Complexity and Randomness

- Complexity and Postmodernism

- Complexity and Rationality

- Complexity and Professionalism

The Pattern Library:

- A Pattern of Difference

- A Pattern of Feedback

- The Hourglass Pattern

- The Pattern of Contextual Diminution

Practical PAC:

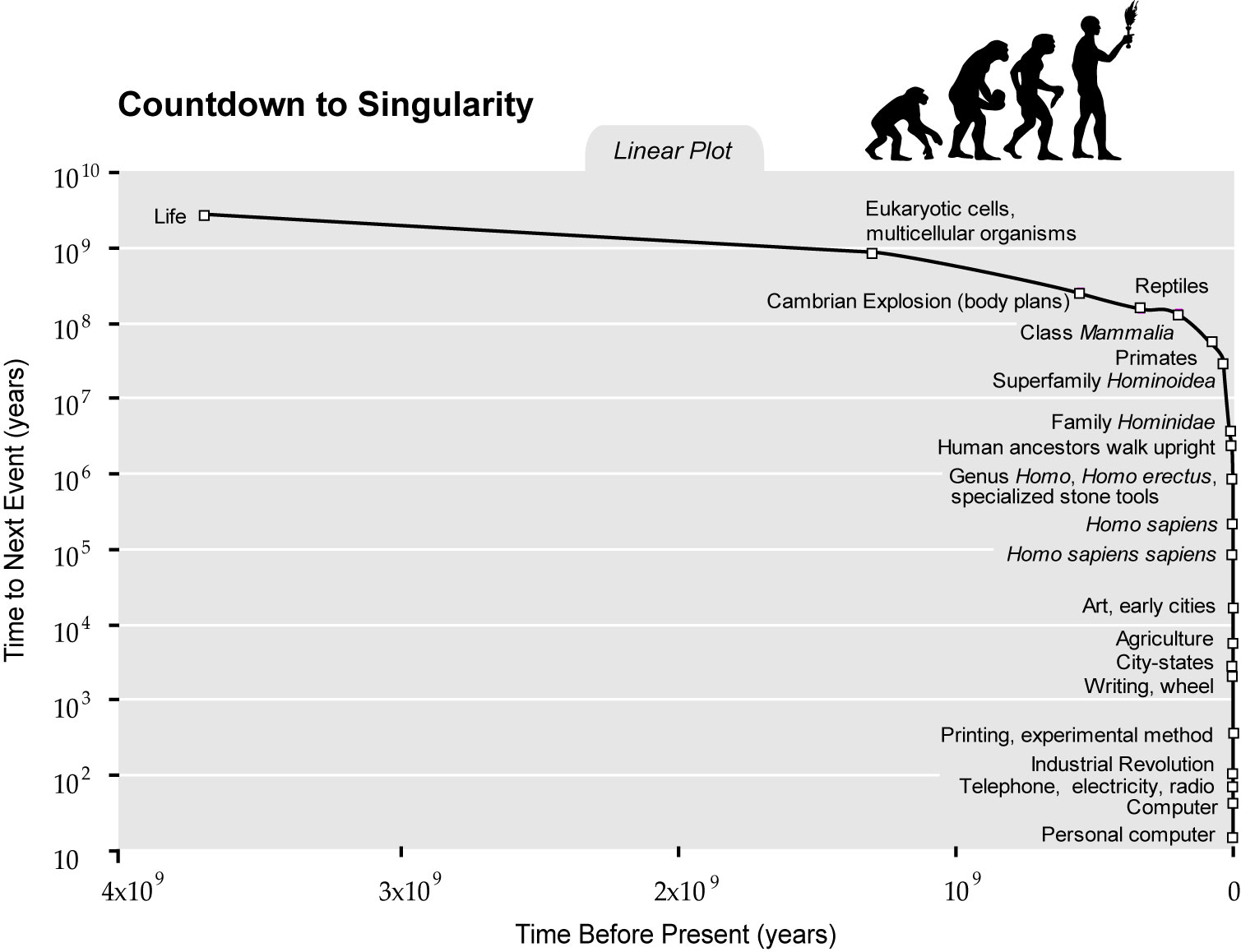

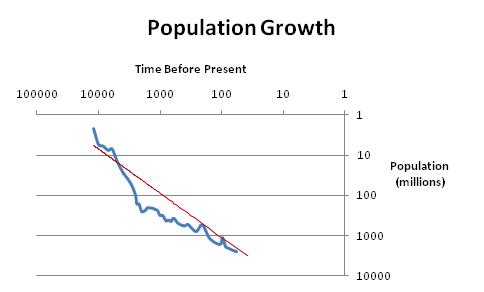

A quick recap; ‘Singularitarians’ (with a capital ‘S’) are people who believe that in a decade or three, technological intelligence will supersede biological intelligence because of the “Law of Accelerating Returns”. This ‘law’ claims that technological progress is increasing exponentially, with the result that at a certain point in time the increase blows off the charts and becomes infinite. This point has been argued with meticulous zeal by Ray Kurzweil, the well-known American entrepreneur and futurologist. On example graph from his 2005 book “The Singularity is Near”, which was amongst others a New York Times bestseller, demonstrates his point (Kurzweil 2005):

|

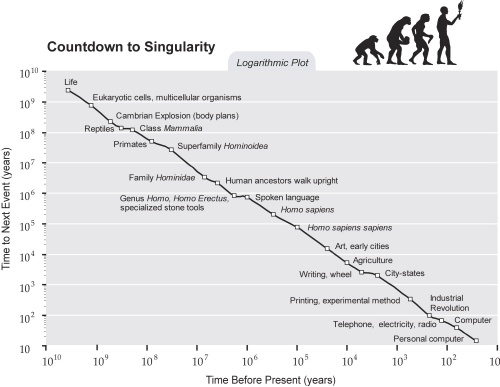

The first graph shows the ‘Countdown to Singularity’ in its most dramatic form, while the other graph shows the same in a log-log plot. Either way, the ‘time to the next event’ comes quicker and quicker and soon will be described in minutes and seconds rather than years or decades. These events correspond with ‘canonical milestones’ in the evolution of human intelligence, and as the more recent events correspond with technological innovations, Kurzweil’s claim –and other Singularitarians beside him- is that the evolution of technological ‘intelligence’ will take over biological intelligence. A new ‘epoch’ of human existence will arrive where we transcend our current limitations and become hyper-intelligent beings. Kurzweil presents a plethora of similar graphs in his book to support this claim.

Some Problems

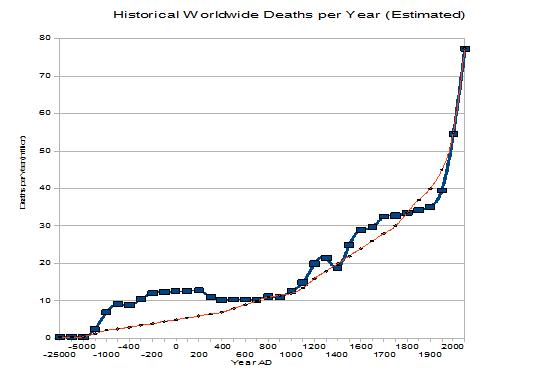

There are a few problems that I have with this line of reasoning. First, when presenting patterns like these exponential curves, one has to realise that they are all existential proofs which cannot be scientifically falsified. However many of these curves are presented, there is a chance that they all boil down to one underlying process that is driving the acceleration. To make my point, I will present one of my own below.

Of course we all know that this isn’t true, for there is another process that counters the crude death rate, namely the population growth.

It is clear with graphs like these, that one often needs a reference against which one can compare the curve; the curve itself does not necessarily say that much.

In terms of PAC: The pattern is contextualised with another one.

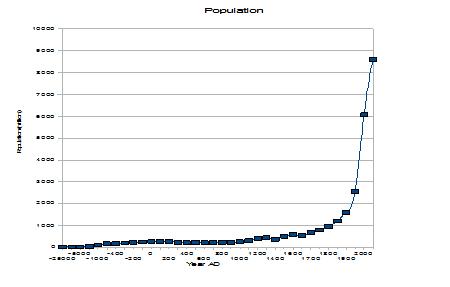

I have plotted the population growth over the last 10 000 years on the graph on the right-hand side as a log-log plot, and projected Kurzweil’s ‘events' during the same time-scale over this to highlight the trend (the red line). Population size and ‘events’ are different quantities, so an comparison is not really possible. However, it is interesting to see that, roughly speaking, the trend in the events correspond with that of the growth of the population.

|

This alternative explanation would suggest that the Singularity will not occur, as the exponential growth will become less when the world population stabilizes beyond 2050. In my previous posts I already used the same argumentation why we need multiple perspectives (different contexts). There will probably be a lag in this development, as innovations are likely to follow the growth of certain groups in the population, such as scientists and inventors. Schooling and increased access of certain groups of people who currently do not have the means to participate in innovation will probably delay this ‘cooling down’.

Another problem I have with Kurzweil’s graph is the quantification of events. If I call the bouncing of an object an ‘event’ and plot a log-log graph with the number of bounces as function of the mass of an object, then a number of experiments of objects from heavy rocks to bouncing balls will undoubtedly show an exponential curve. Bouncing balls are made to…, well, bounce!

The same applies to Kurzweil’s events. If one starts with events of cosmology, moves to biological events, then societal ones and last technological innovations, then one is bound to get an increase. If I would include the ‘canonical milestones’ of an insect –say every munch on a nice juicy leaf- the curve would already have blown of the charts millennia ago. This is a fundamental problem of isomorphy between a pattern and the phenomenon that the pattern tries to model. The isomorphism between ‘canonical milestones’ and ‘intelligence’ is highly uncertain. Therefore the correspondence between the amount of transistors on a square millimeter of silicon and intelligence is just as circumstantial as –say- the food intake of an insect. These kind of scaling tricks seem awfully close to being a form of circular reasoning.

An issue related to this is the way metaphors are used. ‘Singularity’ is a metaphor introduced by Vernor Vinge in the early Eighties to describe the point in time where the exponential curve becomes infinite. This metaphor is borrowed from the astrophysics of black holes. In itself there’s nothing wrong with this, but on Kurzweil’s website some of the discussions about the Singularity have gone past the metaphor and implicitly suggest an equivalence. It is a bit like saying “the sun is like a burning furnace” and then start to wonder how to get it installed in your kitchen.

My last problem with Kurzweil’s graphs deal with the correspondence between the canonical milestones and ‘intelligence’. We are currently facing the problem that we really do not know what exactly ‘intelligence’ is. This word falls in the same category as ‘life’, ‘consciousness’ or ‘empowerment’, which all have a strong intuitive ring to them, but are very hard to define in exact terms. Such terms are often package deals embodied in a network of meanings and associations.

As an example, it might be more correct to consider the ‘intelligence’ of an agent as being relative to the complexity of its environment. Some definitions of rationality in (software) agents for instance are defined along the lines of their ability to achieve certain goals in their environment. Exaggerating the consequences, this means that the more complex the environment, the more stupid the agents become!

the problem with these terms is, that they are biologically, socially and ethically contextualized. Scientists can use these words for their own endeavors, provided that they are strictly defined in the contexts of their research goals. Any claim that technological innovations correspond with biological, or human intelligence can never be substantiated because these concepts are socially constructed and embodied.

This brings me to my major concern,which has little to do with Kurzweil’s singularity.

A Slippery Slope

I am an old-school techie and I have always been intimately aware of the importance of careful analysis and modest claims. This has little to do with “scientist’s pessimism” that Kurzweil accuses the scientific community of, because even these modest claims –once proven- often may have significant consequences. A mathematical proof that a certain model behaves as expected is always an achievement. In my professional career in high-power robotics and industrial production machines, I have also witnessed that this careful attitude contributes significantly to the trust that people put in techno-science and technology.

In the current media-age, more and more pop-scientists are presenting themselves to the public through popular scientific books and media performances. On the hunt for lucrative grants they commit themselves to projects that promise to solve AKPU; All Known Problems in the Universe. Many of these good people –and Kurzweil is certainly one of them- take care to stress that they are engaging in a more or less speculative vision of the future, or that they indulge in science fiction. This is important, for speculation envisions new paths of scientific progress and tickles our imagination and enthusiasm. Imagination drives novelty and marks paths of new enterprise.

However, on many an occasion it becomes very vague in which context scientists make their claims. With the current state of our technology, Kurzweil’s claims to technological intelligence as reference to human or biological intelligence cannot be justified scientifically because it cannot be validated. Now Kurzweil is not claiming scientific correctness, but the raving praises in his book do show that the media and the public are at least partially assessing his authority as a scientist. This authority is amplified because of the many physicists, mathematicians and other scientists who are engaging in this transhumanist project of the future.

More and more often, scientists are making claims about technological developments that target human existence. Whereas Singulatarian claims are still, maybe paradoxically enough, rather harmless –it will either be proven or disproven in the near future- some other transhumanist groups are claiming to cure diseases or undesired social behavior, or conversely promise to ‘empower’ people based on current (gene or nano-) technology that as yet cannot fulfill these promises, if at all there is any correspondence between the technology they base their claims on and that what they target. In their communications, the role which they assume –visionary, scientist or dreamer- is extremely vague, but implicitly they ventilate their status as scientist to stress their authority on the matter. With this, they manipulate the public’s trust in ways that worry me. Trust, as an old Dutch saying goes, comes on foot and leaves on horseback. But especially when human health and well-being is concerned, betrayal of this trust can cause enormous emotional damage on the path that the fleeing rider leaves behind.

Comments