But for academics, this needs not be so. In fact, I saw the other day on social media a funny cartoon that stressed exactly that point. Academics use vacations time to get back on track with important activities they were completely unable to handle until then because of the piling up of urgent matters - urgent but not important, that is. So summer is the perfect time for finishing that paper that has been lingering on Overleaf, for preparing the course for the next semester, for writing a new grant application. Sadly, this is not an exaggeration - for many of us, vacations turn out to be an extension of our working weeks.

The problem, as I see it, is that we love what we do, so we just cannot detach completely from our research. Spending two weeks doing nothing is also dreadful as we live in a competitive environment - in terms of career advancement opportunities, grants, getting invited to give keynote talks or to chair important committees. All these things happen if we do more than our colleagues, or at least that is what many perceive it to work.

I have to say I constitute no exception to the above, but I do not consider myself a victim, nor do I think I have some form of work-related OCD. I in fact never spend a half day away from my laptop, even on vacation. But I do decrease my engagement with emails and communications with colleagues, students, institutions. What does not decrease is one simple activity: writing code.

At the moment I am finalizing a software program that has grown to be over 4000 lines of unstructured, rather untidily-written c++ code. The program is a challenge I posed to myself, as I had been advocating a rather computer-intensive use of differentiable programming to model complex systems and perform co-design optimization of detectors and reconstruction and inference extraction methods, simultaneously. This is a concept I am exploring with some 40 colleagues within the MODE collaboration (https://mode-collaboration.github.io), a group I created to prove that detectors can be optimized end-to-end together with the algorithms that perform the information extraction at the end of the day. These ideas are not new nor original - co-design is ubiquitous in market-driven sectors. But in fundamental science we are slow to pick up the new trends and make them our own, for several reasons.

The program I have been putting together in the course of the past few months (with some hyatus from March to May, when I visited northern Sweden for a sort of sabbatical focusing on neuromorphic computing, but that is another story) models the SWGO detector array and the reconstruction of cosmic ray showers from the particles detected on the ground by the Cherenkov tanks that constitute the basic units of the array.

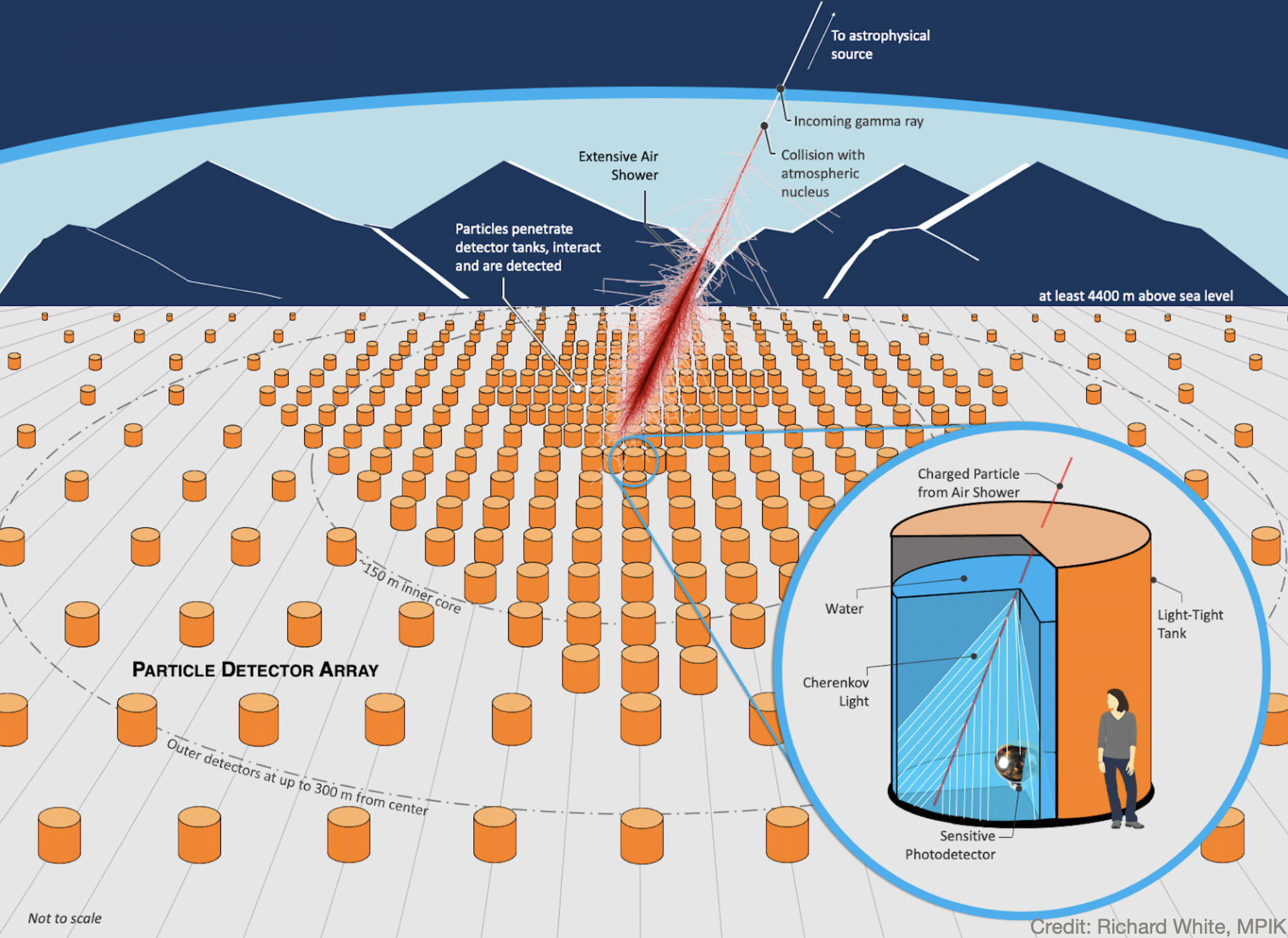

[Above, a schematic of SWGO, taken from the experiment's web site]

You should think of SWGO as a stretch of 6000 or so 3-meters-tall water-filled tanks, containing photomultiplier detectors that identify electrons and photons and distinguish those particles from muons. The array occupies about 1 square kilometer of ground at high altitude (possibly it will be built in the Chilean mountains near Atacama), as at 4800m above sea level the secondary particles generated by ultra-energetic cosmic showers constitute a undampened signal that allows a precise reconstruction of the incident cosmic ray direction and energy, which in turn opens up a lot of possible studies and discoveries in astrophysics.

The problem of finding the optimal layout of those 6000 tanks is equivalent to finding a point in a space of 11997 dimensions (determining the x and y location of each tank). The question is not as trivial as one might imagine, as despite the apparent symmetries of the problem (showers develop a conical distribution of secondary particles, and come in from all directions from the sky) there are distinct length scales (the typical spread of the particles on the ground, e.g.) which condition the optimal detector placement.

The software I have produced solves the problem by brute force. It generates proton and gamma showers, reconstructs them, and then determines how well it is possible to measure the flux of gammas (the particles SWGO is interested in measuring well), by constructing a utility function connected with the scientific value of the extractable information. The utility function can then be differentiated with respect to the position of each detector, such that we get to know in what direction we should move them to increase the utility. A gradient descent loop converges to arrays that are advantageous.

Easy to describe, right? But in fact it is the most complex computer program I have ever written. First of all because I computed all derivatives of the relevant functions by hand - and there are hundreds of them. I think the chain rule of derivation has become my best friend, as I filled literally a hundred pages of calculations. But the code does much more than that - it performs fits, computes expectation values, simulates showers. I will soon produce a detailed description of the software for my readers here.

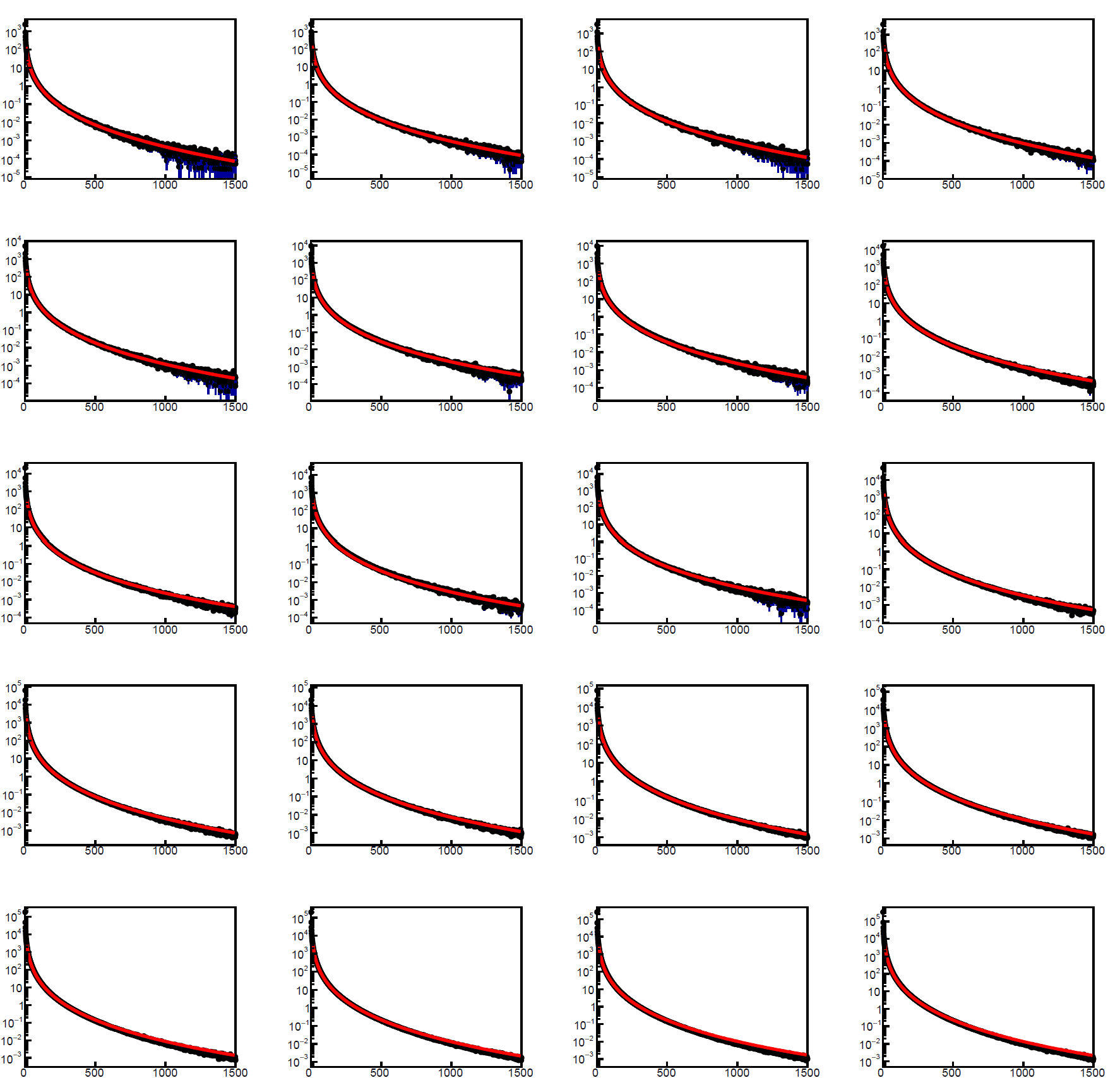

During the past three weeks I should have been on vacation (in the first 10 days), enjoying one of the best beaches of the southern Mediterranean sea, on the western coast of Crete; and then at a conference (in the last 10). I technically was there, but my mind was trying to solve a problem. I had been given a detailed simulation of particle showers, and I needed to extract a precise parametric model of the secondary particle densities on the ground, as a function of secondary particle species and primary particle species, energy, and incidence angle. It was a much harder work than I had expected, and when I worked frantically to finish it before leaving to Crete I soon realized that task would accompany me on vacation.

Rather funnily, I finally solved the parametric modeling problem _this_morning_, when I could finally hit the "run" command on my laptop while my flight to Athens - heading back home - was taking off! Here is a sample of some of the distributions of secondary particles, duly fitted with parametric forms (which themselves depend on parameters that have been fitted as a function of energy and angles of the primary particles, so that one has a completely differentiable model to play with in the end).

Yes, my wife is a bit annoyed by my spending time on coding at all times, but in the end she understands it: it is really one important component of my well-being and happiness!

Comments