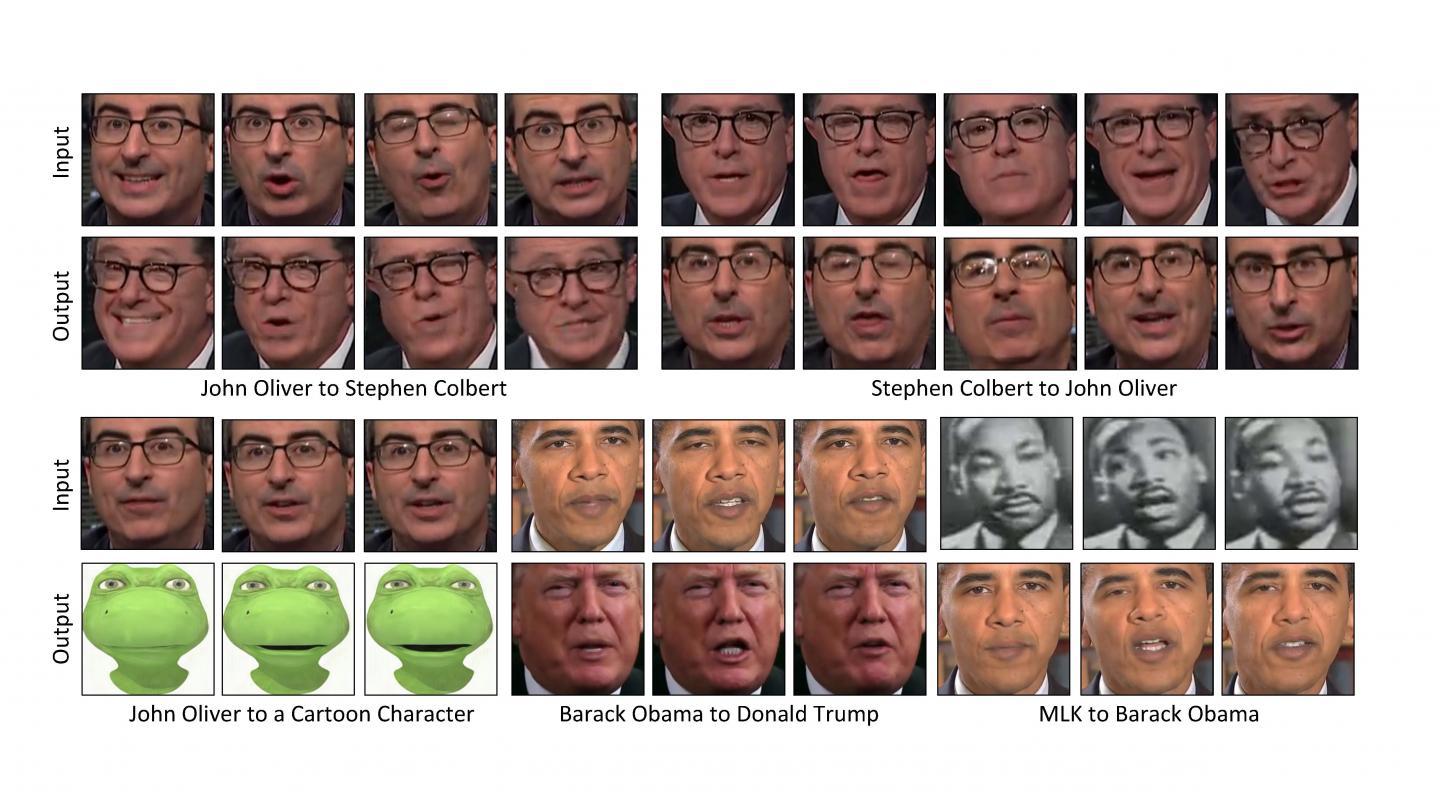

All very positive, but that is not what will happen. What will happen instead are "deep fakes." Whereas John Oliver's facial expressions can be transferred to look like they are the mannerisms of a cartoon character or Stephen Colbert, like in their demonstration, Oliver's creative team could also go beyond suggesting conspiracies by scientists and non-profits against his activist supporters and manufacture video where scientists actually conspire for corporate overlords. Even though it will never have happened in either case.

The technology uses a class of algorithms called generative adversarial networks (GANs). In a GAN, two models are created: a discriminator that learns to detect what is consistent with the style of one image or video, and a generator that learns how to create images or videos that match a certain style. When the two work competitively -- the generator trying to trick the discriminator and the discriminator scoring the effectiveness of the generator -- the system eventually learns how content can be transformed into a certain style.

Credit: Carnegie Mellon University

A variant, called cycle-GAN, completes the loop, much like translating English speech into Spanish and then the Spanish back into English and then evaluating whether the twice-translated speech still makes sense. Using cycle-GAN to analyze the spatial characteristics of images has proven effective in transforming one image into the style of another.

That spatial method still leaves something to be desired for video, with unwanted artifacts and imperfections cropping up in the full cycle of translations. To mitigate the problem, the researchers developed a technique, called Recycle-GAN, that incorporates not only spatial, but temporal information. This additional information, accounting for changes over time, further constrains the process and produces better results.

The researchers showed that Recycle-GAN can be used to transform video of Oliver into what appears to be fellow comedian Stephen Colbert and back into Oliver. Or video of John Oliver's face can be transformed a cartoon character. Recycle-GAN allows not only facial expressions to be copied, but also the movements and cadence of the performance.

The method was presented today at ECCV 2018, the European Conference on Computer Vision, in Munich.

Comments