In the recent past, whenever I had the occasion of being listened to detector developers I have been preaching the importance of considering the rapid evolution of AI and the potential it may have on extracting information from the basic physics processes we wish to be sensitive to. In a field such as high-energy particle physics, where the delay from conceiving an instrument and collecting the data with it can be of 10 or 20 years, this rapid evolution means we should be careful to not design instruments based solely on today's pattern recognition and data reduction capabilities, lest our chilren will operate sub-optimal experiments in the future.

The above ideas were part of the reasons why I founded the MODE collaboration, leveraging my connections with a few leading experts in particle physics and computer science. MODE comprises researchers from 20 institutions in three continents, and fills a small niche of advanced studies which are for the time being mostly ignored by the big developers of particle detection instruments. Not to worry - these changes of perspective require time.

All the while, we are starting to demonstrate the power of deep learning and differentiable programming in the full optimization of the complex experiments we want to build. Our approach is to start with small and mid-scale apparatus, where the time from coding to solutions can be relatively short; the idea is that by producing solutions to easy use cases we are building the expertise and a library of tools that will hopefully one day allow us to tackle bigger challenges, such as the full optimization of the design of a collider detector.

Calorimeters of the past and of the future

However, today I wish to discuss about something related but more specific. The project I have been toying with, and I am trying to get in motion, involves granular calorimeters. Calorimeters are instruments where energetic particles interact strongly or electro-magnetically with dense matter, progressively losing their energy in a way that allows that energy to be measured. Typically, reliance is made of the multiplication effect due to the progressive production of more and more secondary particles when an energetic primary withstands more and more collisions. The proportionality between the number of secondaries and the incident energy is exploited to infer the latter from the former.

Calorimeters have been built for decades with more or less the same construction principles - layers of dense matter alternating with layers of detection elements, or homogeneous dense matter that is both producing the interactions and recording them. The segmentation of the detection elements was only considered important for specific tasks -such as, e.g., discriminating the energy deposit of two nearby photons emitted by the decay of the Higgs boson, which led CMS to build its wondrous electromagnetic calorimeter with 80,000 3x3 cm^2 sized lead tungstate cells. Otherwise, the focus for particle colliders was more on a high precision of the total energy measurement rather than on the detailed inner structure of the showers.

The above paradigm was overturned almost overnight when in the early 2000s it was shown that there was important information waiting to be mined in the fine-grained structure of the hadronic showers that take place when an energetic quark is kicked out of its parent proton, and produces a narrow stream of particles that collectively incide on the calorimeter. The sub-structure of this hadron jet was shown to allow the extraction of a rather clean signal from the decay to pairs or triplets of "sub-jets" of heavy particles such as W and Z bosons, top quarks, and Higgs bosons - the four most massive elementary particles we know of.

A second reason to be interested in high granularity of calorimeters came to the fore almost simultaneously with the first, when the "particle flow" jet reconstruction technique saved CMS from trailing ATLAS in overall performance. CMS had invested a lot in its tracking and electromagnetic calorimeter, but its hadron calorimeter had strong budget constraints and ended up being sub-optimal with respect to what ATLAS had. What saved the day was the development of a software technique that combined the points of strength of CMS (its large magnetic field and B-field integral, if you want me to be specific, combined with its excellent tracking resolution) to help the inference from hadron calorimeter signals. Particle flow is today a compelling method of exploting high-granularity information, and it clearly indicates the way forward in the development of calorimeters of the future for large HEP endeavours.

What high granularity calorimeters may offer in the future

I am convinced that there is more information to extract from highly segmented calorimeters than what we have until today been able to mine. Protons, kaons, pions have different properties, and when they hit the nuclei of dense materials they exhibit their properties in the rate of the collisions (higher for protons, that are larger than pions and kaons) and in the secondary particles that are generated (in a probabilistic sense), as e.g. kaons contain strange quarks and this properties is conserved in the interaction. The question is whether these different physical properties can inform a deep learning algorithm capable of reading them out from the messy, highly stochastic environment, provided that sufficiently fine-grained information on the energy deposition of all involved particles -incident primary, nuclear remnants, delayed response from the latter, number and behaviour of secondaries- is extractable with suitable electronics.

Now that you sort of know what are the general ideas and underpinnings of this field of studies, I can offer you a peek into the preliminary text I submitted yesterday. I will save you a description of the general initiative this is aimed at. Let us just say that if the reviewers of this proposal will find it interesting, it will become a more full-fledged document complete with budget plan and all, and it may then receive support to help my team bring answers to the questions we have laid on the table.

Of course, putting online this text is a rather unconventional move - intellectual property issues, exploitation of ideas, and similar concepts typically advise researchers to keep such texts confidential, at least until a response from the funding bodies is acquired. On the other hand, I am in favour of open science and I want to be coherent: if anybody who are interested in these topics and find them worth being researched on want to exploit the ideas below, they are welcome - but I would advise them to contact me and team up.

Two other factors allow me to share this document: it is (almost) entirely of my making; and the proposal itself is not a formal one, in the sense that it is only a preliminary step toward a more concrete, formal process of funding application. In any case, I will remark here that I do wish I am not annoying anybody by putting the text online; and if that happens I apologize in advance, but point out that I mean well: progress in fundamental science, I am convinced, requires us to work together and be driven by common interests rather than private benefits. This also plays a lot along the lines of the kind of open science across borders that the USERN organization supports. Being the President of USERN, I have to be consistent with that!

The proposal: "FULL EXPLOITATION OF INFORMATION CONTENT IN HIGHLY GRANULAR CALORIMETERS"

T. Dorigo, March 31 2023

Background

High-granularity in calorimeters offers a significant increase in the performance of the information extraction procedures from particle interactions with dense media. At particle colliders its benefits stem from a two-pronged revolution that took place at the start of this century. The first prong was brought about by boosted jet tagging, when it was recognized that the signal of hadronically-decaying heavy particles (W,Z,H,top, and others hypothesized in new physics models) could be successfully extracted from backgrounds if sub-jets could be identified within wide jet cones. The second prong came from the success of particle flow techniques, which were instrumental to e.g. increase the energy resolution of hadronic jets in CMS above the non-state-of-the-art baseline performance of its hadron calorimeter. Both boosted jet tagging and particle flow reconstruction rely on accessing fine-grained information on the structure of hadron showers.

Other observations of the benefit of high granularity for future HEP developments include a recent demonstration [1] that fine-grained hadron calorimeters allow the measurement of the energy of multi-TeV muons from the pattern of radiative deposits (to a 20% relative resolution that does not degrade with muon energy), offering itself as an obvious substitute to magnetic bending, which becomes impractical above a few TeV.

Jointly with, and independently from, the open hardware question of how far can granularity be pushed with existing or future available technologies, there remains an open question of how useful it be, from an information extraction standpoint, to arbitrarily increase it. In principle fine granularity calorimeters offer more than what we currently exploit them for. The nuclear interactions that a proton, a pion, or a kaon withstand are different in cross section as well as in outcome, and this correspond to information which until today we have never even attempted to extract. Deep learning (DL) algorithms may today allow it, if only in probabilistic terms that are still going to be strongly useful for particle flow reconstruction and artificial intelligence (AI)-based pattern recognition. But can it be done?

1. Particle ID from nuclear interactions

One of the goals of this project is to answer the above-mentioned broad, far-reaching question, by providing specific quantification of the following:

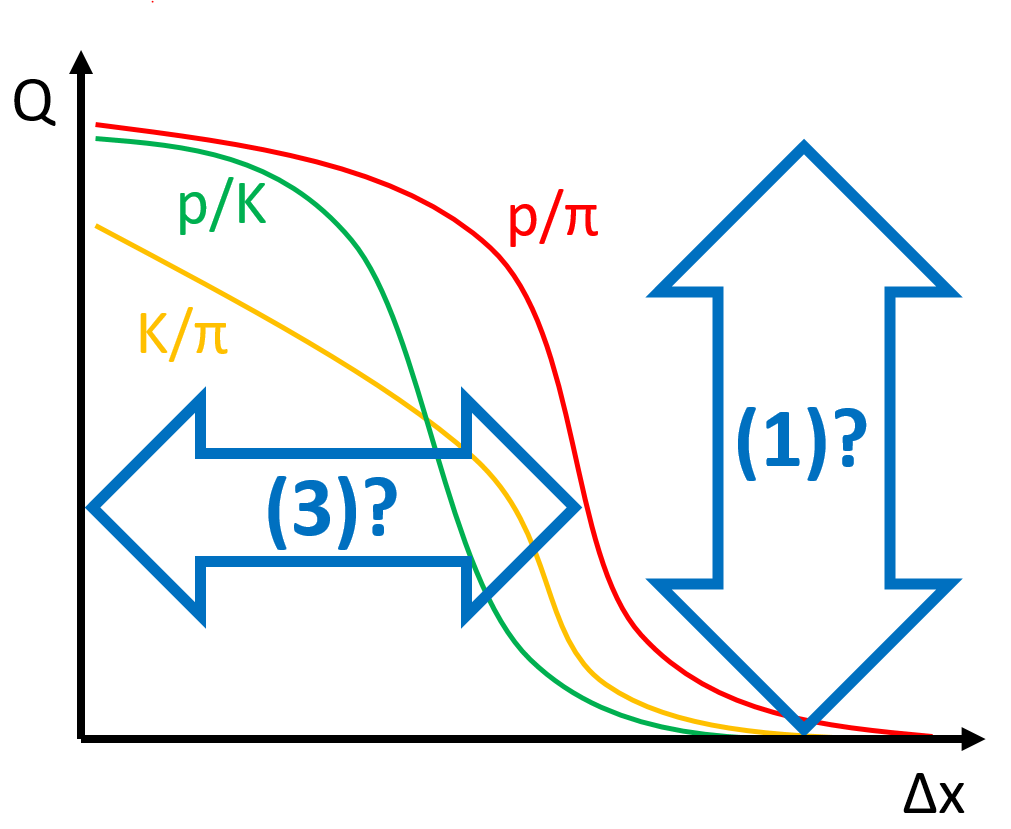

(1) What are the ultimate particle identification capabilities of an arbitrarily granular hadron calorimeter? In more quantitative terms, what is the highest achievable Bayes factor Q of hypothesis testing for discriminating a proton from a charged kaon or pion, assuming no limit on the smallness dx of individually read out cells?

(2) By how much would that information improve the performance of hadronic jet reconstruction in specific benchmarks of interest (e.g., H->bb) for a future FCC hadron collider or a muon collider?

(3) How does the above particle ID capability (in terms of Q) degrade when the cell size dx is increased, and for what cell size does it eventually get lost, in conceivable setups?

(4) How much additional information gain is available by exploiting timing information in this high-granularity setup, and what are the potential performances on strange-quark tagging in a future collider by combining the time-of-flight discrimination with the spatial one?

If we find out that (1) corresponds to valuable information (see Fig.1 below), and that (2) is a corresponding significant gain for future instruments, the answer to (3) becomes the gauge with which to measure whether our technology can be exploited for the task, and (4) is then to be studied as the necessary complement, given recent developments in ultra-high time resolution.

The studies needed to satisfactorily address the above questions require, in a first phase, the deployment of large DL models trained and tested on large simulated datasets; and the prototyping and test-beam operation of a small-scale demonstrator if the simulations demonstrate potential for hadron ID separation for cell sizes that are -or that may potentially become in the near future- technologically feasible.

Figure 1: Hypothetical discrimination power (measured by a quality factor Q) of hadron species as a function of cell size dx. See the text for detail.

2. Integration of neuromorphic computing

In parallel to the above investigation, we wish to investigate the possibility to integrate edge computing elements based on neuromorphic processors in the readout of the device. Neuromorphic computing (NC) is a new computing paradigm that exploits brain-inspired time-encoded signal processing to allow for highly energy-efficient, highly parallelizable, and de-centralized information processing and machine learning on the edge. While the typical aim of NC are applications where extremely low power consumption and computing at the data-generating end are required, we speculate that the potential of localized preprocessing and extraction of information within the core of a highly granular calorimeter could be quite significant. A calorimeter made of millions of individual cells, whose complete readout poses significant challenges, may strongly benefit from a localized, ultra-fast, and ultra-low-power pre-processing of the information at the highest spatial resolution, before higher-level primitives can be transferred to the back-end for reconstruction. In addition, the possible timing information that new generation detectors are starting to enable would find a perfect match with the time-encoded specific capabilities of timeconstant-modified NC hardware processors based on, e.g., state-of-the-art CMOS, nanowire memristor networks, and photonic and opto-electronic technologies [2].

The work plan in this case involves:

(1) Demonstration of the unsupervised learning of specific patterns in macro-cells, via in-situ neuromorphic processing of full granularity information via emulation in digital processors;

(2) Study of possible designs and hardware implementations that incorporate that functionality, and their effectiveness in terms of latency, information extraction, power consumption, and heat generation with respect to ordinary digital computing solutions;

(3) (Long-term, after the end of this project) prototyping, if the solutions developed in (1) and (2) prove effective.

In this case, the lack of an existing hardware solution to the problem of increasing the speed of neuromorphic computing, which in typical non-HEP applications is not its crucial element, demands a wide-ranging parallel study of photonic and opto-electronic solutions for the emulation of neuronal decoding of signals which are natively encoded as light pulses in conceivable homogeneous calorimeter elements [3].

3. Hybridization of tracking and calorimetry

A long-standing paradigm in detector design for HEP can be summarized as "track first, destroy later". With no exception, particle tracking has been reliant on low-material-density to avoid as much as possible the degrading effect of nuclear interactions; and conversely, calorimetry has exploited dense materials for efficient energy conversion in limited volumes. However, the advent of DL questions the validity of that paradigm, as today's neural networks can make sense of the complex patterns resulting from nuclear interactions. It appears therefore highly desirable to investigate the possibility to trade off some of the undeniable benefits of light-weight tracking (in terms of resolution and low background) for a better reconstruction of the identity and development of hadronic jets.

The above mentioned hypothesized possibility to discriminate the identity of different particles based on their behavior in traversing matter invites a study of what are the performance gains and losses of a combination of a state-of-the-art tracker followed by a fine grained calorimeter, when the density of the former and the latter do not abruptly change at their interface, but rather vary with continuity from the first to the second. Beyond the possibility of particle identification, a hybridization of tracking and calorimeter brings in a natural impedence matching with the state-of-the-art of particle flow reconstruction, in the sense that it potentially provides the algorithms with a larger and more coherent amount of information about the behavior of individual particles and their interaction history within showers.

The study of the above subject is again reliant on the deployment of highly specialized DL models, and in fact it requires to extrapolate to the future capabilities of these algorithms to the time when such a detector could become operational. It could be articulated as follows:

(1) Study ultimate performances, on specific high-level benchmarks (e.g., precision of the extraction of a H->bb signal in specific FCC or Muon-collider setups) of a idealized state-of-the-art tracker plus calorimeter (e.g. starting with an existing design, such as the CMS central detector), with a developed DL reconstruction.

(2) Consider increasingly hybrid scenarios when the outermost layers of the tracker are progressively embedded in the calorimeter, gauging the performance on low-level primitives (single-particle momentum resolution, fake rates) and high-level objectives.

Eventually, such a study should inform the one described in Sec. 1 above, to converge on a design of a future instrument capable of optimally exploting the enhanced information extraction potential.

4. End-to-end optimization

The studies included in the above project will inform a full modeling by differentiable programming of the whole chain of procedures, from data collection to inference extraction, that allow to directly connect the final utility function of an experiment with its design layout and technology choices, such that the navigation of the pipeline by stochastic gradient descent may allow a full realignment of design goals and implementation details. The MODE Collaboration has undertaken studies [4] and activities in this direction under the auspices of JENAA, being one of its Expressions of Interest. Demonstration of the paradigm-changing benefit of such studies are underway on limited-complexity use cases (muon tomography, ground-based arrays for cosmic showers, etc.).

5. Summary

The lines of investigation outlined in Sec. 1,2,3,4 above are no doubt easy to classify as far-fetched. However, in the interest of making an earnest effort to exploit the potential of today's and tomorrow's AI in the design of our instruments, we believe they should be viewed as a necessary investment, well in line with the long-term plans of the EUSUPP.

[1] See J. Kieseler et al., EPJC 82, 79 (2022), https://doi.org/10.1140/epjc/s10052-022-09993-5.

[2] See https://iopscience.iop.org/article/10.1088/2634-4386/ac4a83.

[3] See https://www.nature.com/articles/s41566-020-00754-y.

[4] See the white paper in T. Dorigo et al., arXiv:2203.13818, https://doi.org/10.48550/arXiv.2203.13818.

OBJECTIVES

O1 Demonstrate ultimate information content of hadronic showers initiated by different hadrons, proving their a-priori potential discrimination in highly-segmented detectors.

O2 Quantify gain that information extractable from calorimeter-based particle identification within showers produces in high-level objectives at future facilities: hadronic decays of Higgs bosons at FCC and muon collider; energy resolution for hadronic jets at multi-TeV energies.

O3 Determine potential use and benefits of neuromorphic computing in local processing of hit information, and deploy models of information processing and extraction in those scenarios.

O4 Prove AI potential in information extraction from hybridized tracking+calorimetry, quantifying gains and potential shortcomings of varying-density setups.

O5 Develop end-to-end models for the full optimization of design of a combination of tracker and calorimeter for a benchmark collider scenario.

DELIVERABLES

D1 Article describing hadron separation and curves of ultimate proton/pion, proton/kaon, kaon/pion discrimination power as a function of calorimeter cell size (see Fig. 1) (Month 24).

D2 Article discussing feasibility of in-situ neuromorphic processing of signals in fine-grained calorimeter, and presenting assessed resulting efficiency in extracting high-level information without information loss (in terms of particle ID, energy resolution, timing) (Month 36).

MILESTONES

M1 Demonstrate hadron identification in fine-grained calorimeter (Month 18).

M2 Demonstrate unsupervised identification and neuronal selectivity for localized nuclear interaction identification and reconstruction in fine-grained calorimeter, via neuromorphic processing of cell-level signals (Month 30).

Comments