Nanotechnology promises how

centrally important and powerful it will be in the near future. The near

future is always “just around the corner,” much like the breakthrough

in fundamental physics - if we just had one more super particle

collider, you know, just this last one again. We may dismiss such hype,

but rejecting it wholesale fails to see that nanotechnology poses

globally existential risks in a way unknowable yet also unavoidable like

no other technology.

We cannot here discuss unintended

consequences in detail – there are many ‘unknowable unknowns’ such as

the long term side effects of waste water treatment nano-particles which

promote the transfer of multidrug-resistance

genes up to 200 times,[2] thus speeding up the evolution of

‘super-bugs’. Nanotechnology is a volatile substrate for unpredictable

evolution accelerating and potentially leaving humans behind;

self-assembly of nano-structures is intensely pursued.

A here more relevant problem is that in spite of the dangers, nanotechnology has little external oversight and an often unscientific culture hostile to criticism. It effectively lacks important regulatory mechanisms such as proper peer review and reproducibility checks.

It lacks awareness of statistical methods such as error calculation,

which are central in fields such as medicine or sociology, which

similarly impact humans directly like nano-science promises to do. Such

accusations need to be backed up, which is made very difficult today. In

connection with the flawed detection of gravitational waves by Joseph

Weber in the late 1960s, Collins wrote [3]

“In physics, the literature is sufficiently open to allow some papers that have no credibility with the mainstream to be published. This normally causes no problem within ‘core-groups’ of scientists, because the orthodox interpretation is widely understood. There can still be trouble, however, from those who have not been socialized into the core-group’s interpretative framework.”

Collins’ analysis is aware of that the “orthodox interpretation” is not necessarily true, but it is still a naïve and optimistic analysis based on a romantic ‘core-group’ concept (= “scientists actively working in the respective field”). Outside of these groups, there are ‘scientifically literate commentators’, and further out still are policy makers and funding agencies. All papers that passed peer review look more or less equal; policymakers pick according to their agenda. Those outside, according to Collins, cannot distinguish the faulty science. This assumes that those inside can!

Collins’ analysis does not reflect the reality of self-interested peer-review today and the core-group-concept is misleading. The importance of short term survival (temporary positions), funding etc. have removed the importance of identification around scientific interpretations. Science is business, especially in nanotechnology.

One difference is the size of projects. Gravitational wave detectors are ‘Big Science’. The Laser Interferometer Gravitational-Wave Observatory (LIGO) has two separate, four kilometer long arms, and needs collaborations of scientists and non-scientific support staff. Collins’“trouble” may threaten a core-group’s interest: Why fund such expensive projects if competitors offer cheaper means? Weber’s resonance bars came for free compared with LIGO’s initial costs being in excess of $300 million.

In nanotechnology, there are no large and expensive projects such as the Large Hadron Collider (LHC) in particle physics. The tacitly agreed upon consensus is that nanotechnology as such should get as much funding as possible. Flawed science being involved hardly matters as long as the numerous small projects go on and produce many small results that ensure further publications and funding.

There are no big questions comparable to gravitational waves that bend space-time; no core-group with a ‘socialized interpretative framework’ beyond business alliances. Any single researcher has usually numerous projects that need to have their funding ensured via the overall output of the respective laboratory. These aims are best served with good news all around. Ethical or environmental concerns or internal fights in the community are bad news that endangers the enthusiasm of the public and funding. Critical papers are extremely difficult to publish while the publish-or-perish culture overwhelms us with an unprecedented pressure to publish fast and plenty.

Compared with other exact sciences, reproducibility in nanotechnology is poor. Each particular result is of little importance. If something cannot be reproduced, it is more economical to explore something different. It maybe that one’s own lab is unable to reproduce a result for odd reasons. A student may not work an apparatus in the same wrong way as the student involved in the original work.

Reproduction happens at times inherently when new results are based on previous ones, but often this is merely “error propagation.” Anyway, not reproducible results are seldom discredited. Too many novel materials cannot be synthesized with the published methods. In high energy particle physics, reproduction of discoveries, such as the top-quark, are important. In nanotechnology, reproduction is dangerous in light of publish-or-perish. Articles are rejected for not reporting novelties. Publishing negative results is impossible.

Criticism is also basically impossible. Every sub-field is so specialized that the peer reviewers will most likely include scientists affiliated with the original researcher’s claim. All you would effectively be able to proof is your failure to reproduce a result. You will not have published enough and made sworn enemies.

Publishing pressure affects all sciences, but the nature of nanotechnology renders the corrupting influence extreme. Although string theory is driven by publish-or-perish like few other fields, such theoretical work can be checked. Data in experimental nanotechnology as well as theoretical (numerical simulation) are taken on trust already between the principal investigator (PI) and students or post doctoral workers. The PI is a writer or just manager, selected by the environment according to the ability to ‘sell’ and be social. In such a ‘scientific community’, criticism hardly arises anymore – again this is evolution by selection, a fundamental ‘conspiracy’ so effective that no conspiracy is necessary or could ever be equally stable.

For example, the on grounds of fundamental physical symmetries predicted memristor has not been found,[4]and this was obvious to many after the 2008 claimed memristor discovery. However, criticism cannot be properly published, say in journals close to the field. Well connected researchers were allowed to complain about that they did not get the same attention for their own devices, but this only distracts from that the devices are not the hypothesized (and thus “missing”) memristors.

“Memristor,” much like “nano,” is such a catchy label, proving so successful in popular media as well as in getting high impact factor articles published, all the researchers’ interests are best served by jumping onto the bandwagon and somehow relating one’s own work with the memristor label. The memristor case is one of many examples.

The lack of critical papers and the self-interested peer-review, which mainly look after whether the reviewers’ papers are cited, are of course matched by much fraudulent and shoddy work - it is far easier to get nonsense published than the slightest criticism. It is easy to find questionable publications in endless supply by simply doing basic statistical analysis of published data.

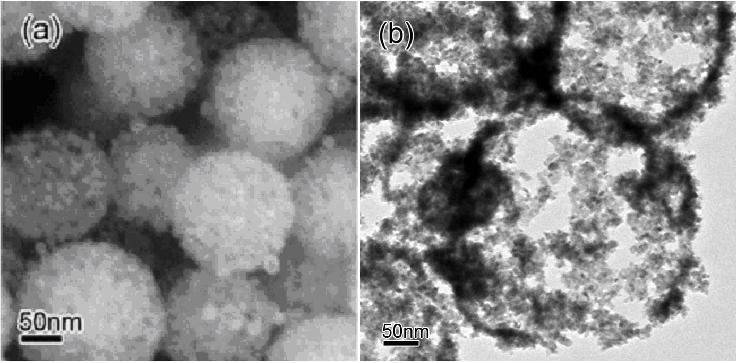

What can we practically do without committing career suicide? One aspect that can be improved easily is statistics. Statistics often reveals unexpected errors. This important aspect of the scientific method is as central to modern medicine and particle physics as their own specific theories. Statistics is accepted as crucial in such mature fields. In nanotechnology however, statistics is reduced to the width of statistical distributions being perceived as an ugly“error” that may undermine the claim of superior control and publishing “nice” looking images, although the perceived beauty of a nano-structure seldom optimizes desired properties (See Figure). Ironically, many researchers discard most of their data this way! They do not understand science deeply enough to appreciate a fundamental fact: The shape of a distribution is not just an 'error' (standard deviation) but instead data with more information than the average value; in fact, the average often falls out from the behavior of the width alone!

Pd nano shells,(a) scanning electron microscope image of complete spheres and (b) tunneling electron microscope image of incomplete shells. In catalysis, the ugliest compounds with the broadest size distributions are often the most active. The incomplete shells outperform their nicer looking, more narrowly size dispersed counterparts.

Widths and distribution shapes contain information about the processes that led to them.[5, 6] The statistics of partially random processes can predict the resulting statistical distributions. Statistics often reveals surprisingly large systematic errors which are even much bigger[7] than the already often surprisingly large shift from neglecting widths.

But not even this latter, most basic problem is widely appreciated: The average or maximum/modal values are usually misleading and should enter calculations only with correction formulas.[8] The good news is: Given today’s computing power, we often do not need to understand proper error propagation, because the computer can store and calculate the entire distribution and simulate distributions. It has never been easier to see how, for example, a neglected long tail of a statistical distribution of sizes can easily let a growth process look like a fractionation (the opposite of growth) instead.

Once the data are in the computer, statistical analysis can extract more than just averages and regression lines, and this is good news especially for researchers short on cash or staff. Once the statistics is in order, statistical and systematic errors are separately understood and something more has usually been discovered that way. The main problem left is: The data have to enter the computer. This can be eyesight destroying if not the computer does most of the data entry automatically. This is why I developed image recognition methods for nanotechnology.

--------------------

This article continues the series “Adapting As Nano Approaches Biological Complexity: Witnessing Human-AI Integration Critically”, where the status of all this as

suppressed information has already been discussed. I allow myself to actually

publish the most interesting and critical parts here (edited). If

citing, please cite nevertheless [1] anyway in order to support the

author.

Previous posts were “Complexity And Optimization: Lost In Design Space”

and "Magic Of Complexity With Catalysts Social Or Metallic".

I will finish next week or so with "A flexible,evolving approach to computing."

------------------------

[1] S. Vongehr et al., Adapting Nanotech Research as Nano-Micro Hybrids Approach Biological Complexity. J. Mater Sci.&Tech. 32(5), 387-401 (2016) doi:10.1016/j.jmst.2016.01.003

[2] Z. G. Qiu, Y.M. Yu, Z. L. Chen, M. Jin, D. Yang, Z. G. Zhao, J. F. Wang et al., Nanoalumina promotes the horizontal transfer of multiresistance genes mediated by plasmids across genera. PNAS, 2012, 109, 4944-4949.

[3] H. M. Collins, Tantalus and the aliens: Publications, audiences, and the search for gravitational waves. Soc. Stud. Sci., 1999, 29, 163-197.

[4] S. Vongehr et al., The Missing Memristor has Not been Found. Sci. Rep., 2015, 5,11657.

[5] S. Vongehr et al., On the Apparently Fixed Dispersion of Size Distributions. J. Comp. Theo. Nanosci., 2011, 8, 598-602.

[6] S. Vongehr and V. V. Kresin, Unusual pickup statistics of high-spin alkali agglomerates on helium nanodroplets. J. Chem. Phys., 2003, 119, 11124-11129.

[7] S. Vongehr et al., Promoting Statistics of Distributions in Nanoscience:The Case of Improving Yield Strength Estimations from Ultrasound Scission. J. Phys. Chem. C, 2012, 116, 18533-18537.

[8] S. Vongehr et al., Collision statistics of clusters: from Poisson model to Poisson mixtures. Chin. Phys. B, 2010, 19, 023602-1 - 023602-9.

Comments