In my article Digging Beneath the Surface of Grammar, I wrote:

It may be noted in passing that Searle's Chinese Room argument fails to convince because, amongst other defects of logic, for every possible natural language input there are infinite possible outputs. A machine capable of conversing in a convincing, human-like manner, cannot be based on a book of look-up rules, however large that book might be. The output of a machine capable of passing - or even better, administering - a Turing test must be designed around something more than a list, however large, of look-up rules.I recently covered the Chinese Room Argument in Thinking Machines and The Semantic Quagmire. Now, in this article, the Chinese Room Argument is covered again, but from a slightly different perspective and with a greater focus on the fundamentals of intelligence. I separated the material into two articles because the blend is far too long for a blog post.

The Intelligence in the Chinese Room.

The publication of John Searle's Chinese room argument, (Searle 1980a; 1984, pp. 38f; 1988; 1989a; 1990a) has spawned a multitude of arguments for and against the notion that a computer might think, that it might one day have some sort of mind or be intelligent in any human-like way.

In the whole area of artificial intelligence (AI), more specifically computational linguistics, the Chinese Room Argument (CRA) is perhaps the most influential and widely cited publication. The CRA has its supporters and its critics. Perhaps its most serious failing is that it does not adequately address the root AI question "Can a machine think" (Turing 1950, p. 433). After stripping away the elements of argumentum ad hominem and of seemingly addressing the issues by creating sub-sets of AI, there is apparently very little to discuss.

The core argument of the CRA

Given:

(a) It is theoretically possible to build a Chinese room (CR).

(b) To the outside observers, the room appears to understand Chinese.

(c) Searle, the human operator, does not understand Chinese.

(d) Intelligence is required to understand a language.

(e) The only possible intelligence in the room resides in Searle.

it follows:

(f) The room is not using any intelligence in its "understanding" of Chinese.

In order to have any sort of rational debate about the CR, axioms a, b, c and d must be accepted. This leaves only axiom (e) and the conclusion (f) as valid topics of debate.

On the surface, axioms c, d and e appear to combine to form an unbreakable chain of logic:

Intelligence is needed to understand language,

and

Searle does not understand Chinese,

hence:

It cannot be his intelligence at work in the room.

There is no other intelligence in the room

hence:

The CR is not "really" intelligent,

q.e.d.

The CRA appears to defy common sense. We assume that the ability to use natural language is a classic aspect of intelligence. But it is only ascribable in the CR to Searle, who does not understand Chinese. There is a way to break free of this circularity. We must examine at its most fundamental level what this thing is which we call "intelligence".

What is Fundamental Intelligence?

We do not ascribe intelligence to entirely inanimate objects. Nobody has ever observed a stone voluntarily moving out of the way of a sledge-hammer. Once we move into the realm of living things, the picture is different. Even a plant has the "intelligence" to send its roots downwards and its shoots upwards. It does not matter that this is "only" a chemical response to a stimulus. In all living creatures, every response to every stimulus is biochemical at its most basic level. Stimulus-response (S-R) behaviours can join together into behaviours that are so complex that they seem to defy analysis.

As a matter of surface appearance, even the simplest single S-R behaviour appears incapable of greater simplification. In reality, turning once more to the realm of computers, of Turing machines and of binary logic, we have a solution.

The simplest possible computing machine is a Turing machine. In order to operate at all, it is axiomatic that it should be able to distinguish between two states, which we humans prefer to visualise as zeros and ones. It does not matter how the data is encoded. Chemical, biochemical, electronic, mechanical, it is all one to the mathematical model, it is a detector.

Put another way, the simplest operation that any plant, creature or computer can do is to determine that x is not y, that 1 is not 0. If there is a most fundamental "particle of intelligence", this is it: detection, the ability or capacity to detect some thing or state.

A plant or animal needs to be able to determine minimally, for its very survival, if a chemical is a nutrient or not. The simplest virus replicates by putting together the fundamental building blocks of its own special form of life. It could not do that if it could not tell chemical x from chemical y.

In a very similar way, the most basic Turing machine cannot operate unless it has the capacity to detect a zero condition and perform action x. The absence of a zero condition leads to the performance of action y. There is no need to have two states, merely a state x and the absence of that state. The notion of states 1 and 0 is a useful model for us humans to comprehend, but it is not essential to the working of the machine.

This model of intelligence cannot lead to more than the most basic forms of life. Indeed, it can be applied to the growth of a crystal. However, only a very simple enhancement is needed to account for the intelligence in all higher forms of life including mankind. The fundamental particle of intelligence is the ability to apply a Goldilocks test', a simple go no-go gauge. A condition may be too much of x, too little of x, or 'just enough' x. By focusing on the 'just right' condition and excluding all other conditions, a simple mechanism can determine that either a condition is satisfied or it is not.

The exact nature of the difference between x and y is irrelevant. There is always going to be a zone of uncertainty, a limit of discriminability. On either side of that zone lie two infinitely large zones of indifference. The degrees of x and not x are irrelevant. Mere true-false detection is an indifference operation. The next step up in intelligence is the capacity to grade or sort things which are in some way similar. It is a difference operation.

The simplest level of intelligence reacts to, say, the presence or absence of light. At the next stage is an ability to react to, e.g., the brightness of light. This requires a basic comparator, comprising two basic detectors, or a memory unit, comprising a single detector and a reference or datum. Fundamental intelligence is a blend of indifference and difference operations: it enables a living creature or a computer to sort things into categories, or sets.

The notion of comparators and sets can be applied to language. Words can be compared syntactically as grammatical classes or sets. They can also be sorted semantically, as e.g. in a thesaurus. Whether any sorting algorithm is based on syntax or on semantics or even on some as yet undiscovered rule of human grammar, it must be based on some form of pattern matching.

Pattern matching in the Chinese Room

Here then is the flaw in the Chinese Room Argument. Reading is a process of comparing various dots and lines, using the comparator circuits in the eye-brain-memory system. The shapes are compared with stored patterns and sorted into sets. Sets compound into supersets until the conscious mind is able to recognise a pattern. When Searle is looking at the cards bearing the Chinese characters he is comparing them with illustrations in a pattern-book. This is intelligence in operation. He follows a set of instructions, again it is intelligence in operation. He selects cards for output, by matching patterns again. Intelligence is essential even to menial work.

If Searle were unconscious, the room would cease to function. The fact that Searle is not personally conscious of using his intelligence to operate the room is no proof that the room is not intelligent. Rather, the fact that the whole CR process is entirely dependent on Searle's being in a state of awareness is proof that the external observers are right in assuming the presence of an intelligence in the Chinese Room.

The room is a pattern-matching machine. It is reacting intelligently to the observers, as if aware of their presence and their needs. It is conversing with them in Chinese. It is intelligent by any rational standard. Any machine with the capacity to perform enough pattern-matching operations per second so as to converse in any natural human language will be intelligent in a human-like way, q.e.d. One may even say that a human is a highly complex pattern detection system.

Artificial intelligence is just a matter of degree.

The proper question is not: "Can a machine think?", since, having the capacity to compare states, of course it does. The proper question, currently being addressed, is: "How do we improve a machine so that it thinks more like us?"

That question will be answered when we program computers so that they can readily pass the Turing test. Perhaps one day, in a reflexive Turing test, computers will outperform humans in judging such competitions. Such a capacity is essential as a corollary of Isaac Asimov's famous laws of robotics. A robot must obey a human in preference to another robot. Accordingly, it must needs have the intelligence to determine the difference.

The greatest problem in making a computer understand natural language is freedom of speech. Any utterance can trigger any other utterance at all, or no utterance. The rules of conversation are only a guide. The joy of language is that we can break the rules with impunity. As Lewis Carroll famously observed, a word means what we want it to mean.

The inventiveness of language.

By suitable manipulation, any word at all can mean anything at all. We can also invent new words at will. The most basic rules of natural language dialogue are these:

1. Make any word mean anything.

2. Invent any word to mean anything.

provided that:

3. Both parties to the exchange continue to understand each other.

Continued understanding is ensured by the deep interdependence of words together with conventions of use. These are conventions of syntax, of semantics and of application, constrained by contexts - what I refer to as the rules of conformity of natural language.

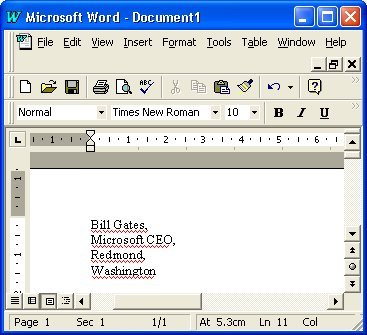

Current computer programs are fairly moronic. The word processor that underlines my name in red is a good example. I do not need to persuade the computer that "Lockerby" means "Lokie's byre". I do, however, need it to accept that the word is a name and that it refers to a particular sub-group of human beings as a convenient label. Once we begin to understand how language really works, we can write word processing code that only throws up an exception where it finds something which a human might also find objectionable.

Further reading:

The Chinese Room Argument

The Turing Test

I Think, But Who Am I?

Related Articles in my blog:

Intelligence Made Simple

A Journey to the Centre of the Universe

Digging Beneath the Surface of Grammar

Thinking Machines and The Semantic Quagmire

Comments