---

My ATLAS colleagues will have to pardon me for the slightly sensationalistic title of this article, but indeed the question is one which many inside and outside CERN are asking themselves upon looking into the new public material of ATLAS Higgs boson searches using the 2011 dataset in conjunction with the first part of 2012 data.

My two pence: relax. This is normal business - if we had to get excited at every slight disagreement between our measurements and our expectations, we'd be sick with Priapism (sorry ladies for this gender-specific pun).

The fact is, we love to speculate. We'd love if we ended up discovering that what we have announced last July as THE Higgs boson were in fact two, or more, distinct states. It would be like having discovered a vein of diamonds while digging in a gold mine. So observing that the Higgs peak in the diphoton final state of ATLAS sits over two GeV away from the peak of the H->ZZ signal is music to our ears. Yet we need to keep our feet on the ground.

So first of all let me give you the basic facts.

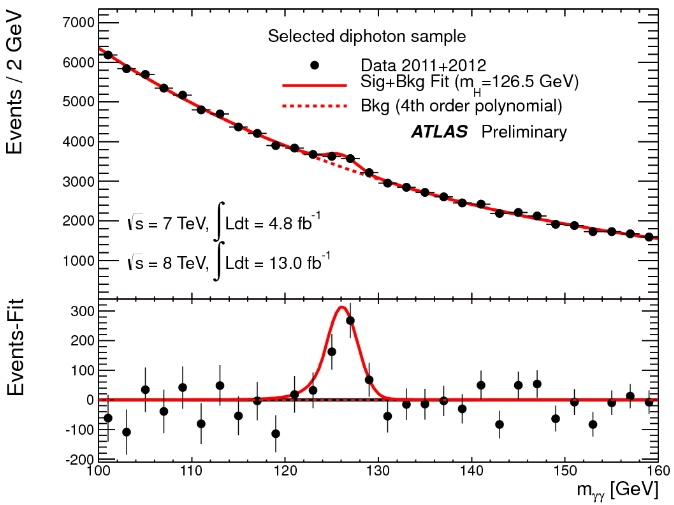

ATLAS has a very nice signal of H->γγ in a total of 17.8/fb of collision data. You can check it out in the figure on the right, which shows the combined spectrum of all their diphoton signal categories on top, and the background-shape-subtracted data on the bottom part, showing a very clear bump at 126.5 GeV. You can make no mistake here: that is a new resonance for sure.

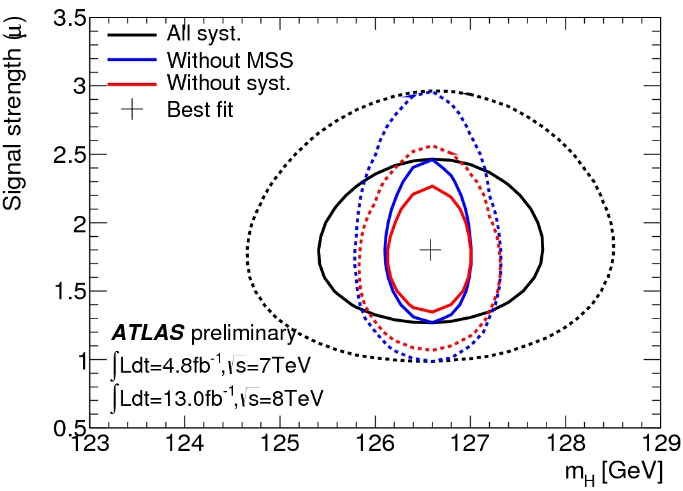

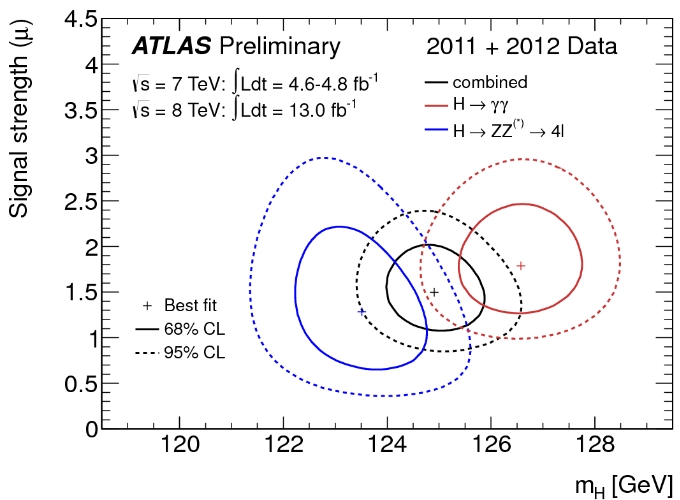

ATLAS has a very nice signal of H->γγ in a total of 17.8/fb of collision data. You can check it out in the figure on the right, which shows the combined spectrum of all their diphoton signal categories on top, and the background-shape-subtracted data on the bottom part, showing a very clear bump at 126.5 GeV. You can make no mistake here: that is a new resonance for sure.They use the signal for a mass-cross section measurement, and they obtain the result shown by the figure below: the one- and two-sigma contours show that the mass is in the whereabouts of 126.5 GeV. The cross section of these final states measured by ATLAS is slightly higher than the standard model calculation, sitting at μ=1.8 (1.0 is the standard model prediction). That the ATLAS signal is stronger than expected is no news of course; the compatibility with the standard model rate is at the level of 5%, so I am not impressed by this.

(If you need more detail on what the graph shows: the full line in black shows the full measurement and the one-sigma contour around the best-fit values of mass and signal strength; the black dashes show the 95% CL contour. The blue and red companion ovals show what happens if one were to not consider in the fit the systematic uncertainties due to energy scale and other systematics.)

BONUS TRACK: an added side note on the greedy bump bias

I should note that there is an interesting effect to consider when fitting a small signal on top of a large background, if you do not know the true signal mass. Any fitter will try to maximize the signal, in order to make best use of the signal shape parameters, by "searching" for fluctuations right or left of the main peak, adapting the mass parameter accordingly. By doing that, the rate measurement is potentially upward biased.

I have studied the topic a long time ago in the context of investigations of a weird bump in the dimuon mass distribution that CDF used to have at 7.2 GeV, and produced an internal CDF note on the subject. Later, three years ago, I produced two detailed posts in this site on the matter. What I found is that in general, the bias in signal strength is a universal function of the ratio between signal and square root of background under the signal. This could be guessed from first principles, but what is interesting is that one can look back at the plots I produced with pseudoexperiments a dozen years ago, and guesstimate the size of the potential rate bias in the H->gamma gamma fit.

The matter is complicated by the fact that these H->γγ results are actually the combination of eight different channels, each having a different value of signal to noise. Nevertheless I tried some eyeballing of the relevant distributions. It appears that the s/sqrt(b) values in the eight ATLAS categories are not too different from each other (as far as is relevant to our purpose here): in a five-bin window spanning the signal region I observe s/sqrt(b) values ranging from 0.7 to 1.5, give or take a cow or two [No, the last sentence cannot possibly make sense to you if you are not Italian].

Anyway, for similar situations my toy studies indicated biases of the order of 10% in the signal strength extracted by the fits. I wonder if ATLAS corrects for this bias or not - I know that the bias has been recognized (although they now call it in a different way from what I dubbed it originally), so they might well be doing it already.

Back to business

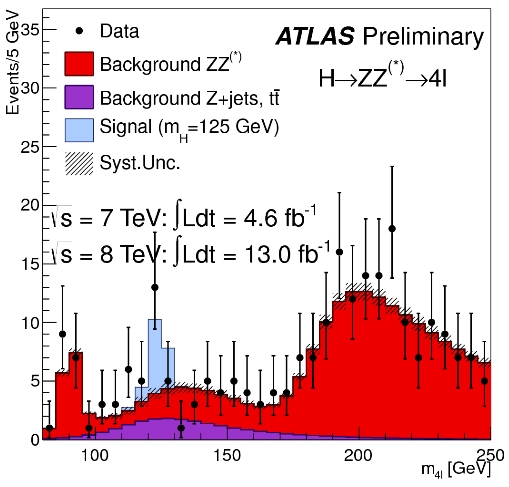

Now let us instead turn to the H->ZZ->four lepton decay mode. Here, the signal to noise ratio is much larger (indeed the four-lepton final state is dubbed "golden" decay mode for Higgs searches), but the number of Higgs boson candidates you can find in any dataset is smaller, because although the branching fraction of Higgs bosons to ZZ* pairs for a 125-GeV Higgs is one order of magnitude larger than the branching fraction to gamma pairs, in the former case you have to multiply that by the branching fraction of Z bosons to electron or muon pairs, each of which is a mere 3.3%! So the golden mode is really rare, and this reflects in the paucity of signal events in the graph on the right. A clear signal is visible here too, however (it is evidenced by the blue histogram (expected Higgs contribution for Higgs mass equal to 125 GeV).

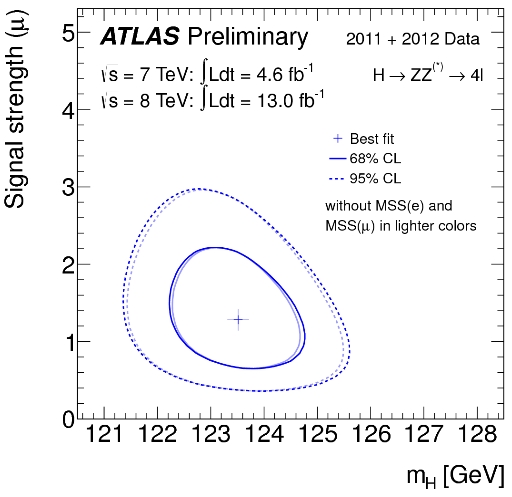

Now let us instead turn to the H->ZZ->four lepton decay mode. Here, the signal to noise ratio is much larger (indeed the four-lepton final state is dubbed "golden" decay mode for Higgs searches), but the number of Higgs boson candidates you can find in any dataset is smaller, because although the branching fraction of Higgs bosons to ZZ* pairs for a 125-GeV Higgs is one order of magnitude larger than the branching fraction to gamma pairs, in the former case you have to multiply that by the branching fraction of Z bosons to electron or muon pairs, each of which is a mere 3.3%! So the golden mode is really rare, and this reflects in the paucity of signal events in the graph on the right. A clear signal is visible here too, however (it is evidenced by the blue histogram (expected Higgs contribution for Higgs mass equal to 125 GeV). And now let us see (left) what the one- and two-sigma contours of the profile likelihood fit to the above signal produces, for mass M and signal strength μ (the latter on the vertical axis, is the signal rate in units of the expected standard model signal as usual). Here the signal strength is more in agreement with the standard model prediction, but the best-fit mass (123.5 GeV) is significantly lower than the fit 126.5 GeV of the gamma-gamma mode! What is going on ?

And now let us see (left) what the one- and two-sigma contours of the profile likelihood fit to the above signal produces, for mass M and signal strength μ (the latter on the vertical axis, is the signal rate in units of the expected standard model signal as usual). Here the signal strength is more in agreement with the standard model prediction, but the best-fit mass (123.5 GeV) is significantly lower than the fit 126.5 GeV of the gamma-gamma mode! What is going on ?Indeed, what is going on is the question everybody is asking. However, consider. This effect is only seen by ATLAS - the latest published results by CMS show consistency between the mass measurements in the diphoton and di-Z final states; and CMS has a quite similar sensitivity to Higgs bosons. CMS sees signals in the 125.5 GeV ballpark, which is right in the middle of the "two" ATLAS signals. Indeed, have a look at the two ATLAS mass measurements compared one to the other, in the figure below.

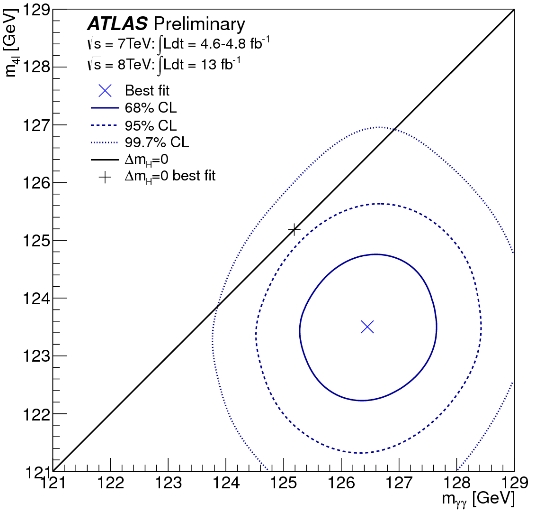

And maybe most interesting is the figure below, which shows the two mass measurements one against the other, with a diagonal line acting as "hypothesis zero" (that the two objects are indeed one and the same, such that the mass is the same and thus x=y): you can see that the two mass measurements are discrepant at just a bit over 2-standard deviations level.

So, to answer the question one idea is of course that some miscalibration systematics are affecting either or both mass measurements in ATLAS. However, I am sure this has been beaten down to death by the experimenters before making public the present results.

Another idea is that the gamma-gamma signal contains some unexpected background which somehow shifts the best-fit mass to higher values, also contributing to the anomalously high signal rate. However, this also does not hold much water - if you look at the various mass histograms produced by ATLAS (there is a bunch here) you do not see anything striking as suspicious in the background distributions.

Then there is the possibility of a statistical fluctuation. I think this is the most likely explanation, and I am willing to bet $100 with as many as five takers that the two measurements will be reconciled with each other once more statistics is added, and that no observation of a double state will be made. This however might take three years to sort out, given the impending shutdown of the LHC.

Finally, you might instead want to believe that we are indeed looking at the first hint of new physics -Supersymmetry or some other model producing multiple Higgs-like particles. Very exciting, but I just do not buy that.

Time will tell! So if you have some extra cash to throw away consider taking the bet...

Comments